Note

Access to this page requires authorization. You can try signing in or changing directories.

Access to this page requires authorization. You can try changing directories.

In this article, you can learn how to read data and metadata and evaluate measures in semantic models using the semantic link Spark native connector in Microsoft Fabric. You will also learn how to write data that semantic models can consume.

Prerequisites

Get a Microsoft Fabric subscription. Or, sign up for a free Microsoft Fabric trial.

Sign in to Microsoft Fabric.

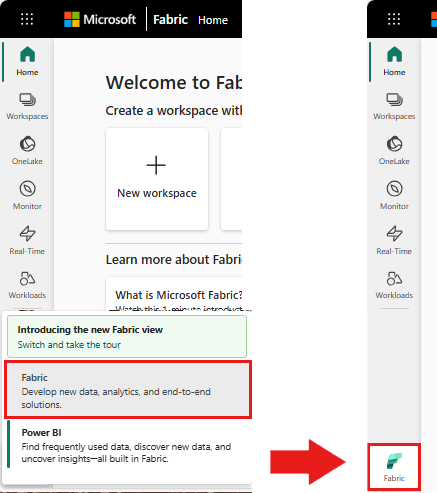

Use the experience switcher on the bottom left side of your home page to switch to Fabric.

Go to the Data Science experience in Microsoft Fabric.

- From the left pane, select Workloads.

- Select Data Science.

Create a new notebook to copy/paste code into cells.

-

For Spark 3.4 and above, Semantic link is available in the default runtime when using Fabric, and there's no need to install it. If you're using Spark 3.3 or below, or if you want to update to the most recent version of Semantic Link, you can run the command:

python %pip install -U semantic-link Download the Customer Profitability Sample.pbix semantic model from the datasets folder of the fabric-samples repository, and save the semantic model locally.

Upload the semantic model into your workspace

In this article, we use the Customer Profitability Sample.pbix semantic model. This semantic model references a company manufacturing marketing materials and contains data about products, customers, and corresponding revenue for various business units.

- From the left pane, select Workspaces and then select the name of your workspace to open it.

- Select Import > Report or Paginated Report > From this computer and select the Customer Profitability Sample.pbix semantic model.

Once the upload is done, your workspace has three new artifacts: a Power BI report, a dashboard, and a semantic model named Customer Profitability Sample. You use this semantic model for the steps in this article.

Read and write data, using Spark in Python, R, SQL, and Scala

By default, the workspace used to access semantic models is:

- the workspace of the attached Lakehouse or

- the workspace of the notebook, if no Lakehouse is attached.

Microsoft Fabric exposes all tables from all semantic models in the workspace as Spark tables. All Spark SQL commands can be executed in Python, R, and Scala. The semantic link Spark native connector supports push-down of Spark predicates to the Power BI engine.

Tip

Since Power BI tables and measures are exposed as regular Spark tables, they can be joined with other Spark data sources in a single query.

List tables of all semantic models in the workspace, using PySpark.

df = spark.sql("SHOW TABLES FROM pbi") display(df)Retrieve the data from the Customer table in the Customer Profitability Sample semantic model, using SparkR.

Note

Retrieving tables is subject to strict limitations (see Read Limitations) and the results might be incomplete. Use aggregate pushdown to reduce the amount of data transferred. The supported aggregates are: COUNT, SUM, AVG, MIN, and MAX.

%%sparkr df = sql("SELECT * FROM pbi.`Customer Profitability Sample`.Customer") display(df)Power BI measures are available through the virtual table _Metrics. The following query computes the total revenue and revenue budget by region and industry.

%%sql SELECT `Customer[Country/Region]`, `Industry[Industry]`, AVG(`Total Revenue`), AVG(`Revenue Budget`) FROM pbi.`Customer Profitability Sample`.`_Metrics` WHERE `Customer[State]` in ('CA', 'WA') GROUP BY `Customer[Country/Region]`, `Industry[Industry]`Inspect available measures and dimensions, using Spark schema.

spark.table("pbi.`Customer Profitability Sample`._Metrics").printSchema()Save the data as a delta table to your Lakehouse.

delta_table_path = "<your delta table path>" #fill in your delta table path df.write.format("delta").mode("overwrite").save(delta_table_path)

Read-access limitations

The read access APIs have the following limitations:

- Queries running longer than 10s in Analysis Service are not supported (Indication inside Spark: "java.net.SocketTimeoutException: PowerBI service comm failed ")

- Power BI table access using Spark SQL is subject to Power BI backend limitations.

- Predicate pushdown for Spark _Metrics queries is limited to a single IN expression and requires at least two elements. Extra IN expressions and unsupported predicates are evaluated in Spark after data transfer.

- Predicate pushdown for Power BI tables accessed using Spark SQL doesn't support the following expressions:

- The Spark session must be restarted to make new semantic models accessible in Spark SQL.