Hi Janarthanan S,

Thanks for the question. I would certainly recommend you to follow the below steps to create a simple qnachat application on private data using langchain, with Azure OpenAI service:

Before we get started with the Langchain with Azure Open AI service, make sure that you are having the prerequisite as below:

• An active Azure subscription

• Access granted to Azure OpenAI in the desired Azure subscription (you can ask access at https://aka.ms/oai/access).

• An Azure OpenAI resource with a model deployed.

• Python 3.7 or higher

• LangChain library installed (you can do so via pip install langchain)

Steps to create:

First, create a .env and add your Azure OpenAI Service details:

OPENAI_API_KEY=xxxxxx

OPENAI_API_BASE=https://xxxxxxxx.openai.azure.com/

OPENAI_API_VERSION=2023-05-15

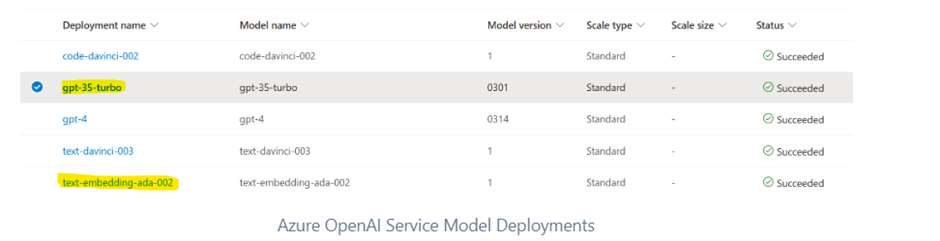

Next, make sure that you have gpt-35-turbo and text-embedding-ada-002 deployed and used the same name as the model itself for the deployment.

Let’s install the latest versions of openai and langchain via pip:

pip install openai --upgrade

pip install langchain –upgrade

we’re using openai==0.27.8 and langchain==0.0.240

First, let’s initialize our Azure OpenAI Service connection and create the LangChain objects:

import os

import openai

from dotenv import load_dotenv

from langchain.chat_models import AzureChatOpenAI

from langchain.embeddings import OpenAIEmbeddings

# Load environment variables (set OPENAI_API_KEY, OPENAI_API_BASE, and OPENAI_API_VERSION in .env)

load_dotenv()

# Configure OpenAI API

openai.api_type = "azure"

openai.api_base = os.getenv('OPENAI_API_BASE')

openai.api_key = os.getenv("OPENAI_API_KEY")

openai.api_version = os.getenv('OPENAI_API_VERSION')

# Initialize gpt-35-turbo and our embedding model

llm = AzureChatOpenAI(deployment_name="gpt-35-turbo")

embeddings = OpenAIEmbeddings(deployment_id="text-embedding-ada-002", chunk_size=1)

Next, we can load up a bunch of text files, chunk them up and embed them. LangChain supports a lot of different document loaders (https://python.langchain.com/docs/modules/data_connection/document_loaders.html), which makes it easy to adapt to other data sources and file formats. You can have your own sample data.

from langchain.document_loaders import DirectoryLoader

from langchain.document_loaders import TextLoader

from langchain.text_splitter import TokenTextSplitter

loader = DirectoryLoader('data/qna/', glob="*.txt", loader_cls=TextLoader, loader_kwargs={'autodetect_encoding': True})

documents = loader.load()

text_splitter = TokenTextSplitter(chunk_size=1000, chunk_overlap=0)

docs = text_splitter.split_documents(documents)

Next, let’s ingest documents into Faiss so we can efficiently query our embeddings:

from langchain.vectorstores import FAISS

db = FAISS.from_documents(documents=docs, embedding=embeddings)

Lastly, we can create our document question-answering chat chain. In this case, we specify the question prompt, which converts the user’s question to a standalone question, in case the user asked a follow-up question:

from langchain.chains import ConversationalRetrievalChain

from langchain.prompts import PromptTemplate

# Adapt if needed

CONDENSE_QUESTION_PROMPT = PromptTemplate.from_template("""Given the following conversation and a follow up question, rephrase the follow up question to be a standalone question.

Chat History:

{chat_history}

Follow Up Input: {question}

Standalone question:""")

qa = ConversationalRetrievalChain.from_llm(llm=llm,

retriever=db.as_retriever(),

condense_question_prompt=CONDENSE_QUESTION_PROMPT,

return_source_documents=True,

verbose=False)

Let’s ask a question:

chat_history = []

query = "what is Azure OpenAI Service?"

result = qa({"question": query, "chat_history": chat_history})

print("Question:", query)

print("Answer:", result["answer"])

From where, we can also ask follow up questions:

chat_history = [(query, result["answer"])]

query = "Which regions does the service support?"

result = qa({"question": query, "chat_history": chat_history})

print("Question:", query)

print("Answer:", result["answer"])

Here we go! We have created a simple qnachat application on private data using langchain, with Azure OpenAI service using the above code.

Please try out these steps with your data and check if it works. Hope this answer helps you with solution! Please comment below if you need any assistance on the same. Happy to help!

Regards,

Chakravarthi Rangarajan Bhargavi

-Please kindly accept the answer and vote 'Yes' if you feel helpful to support the community, thanks a lot.