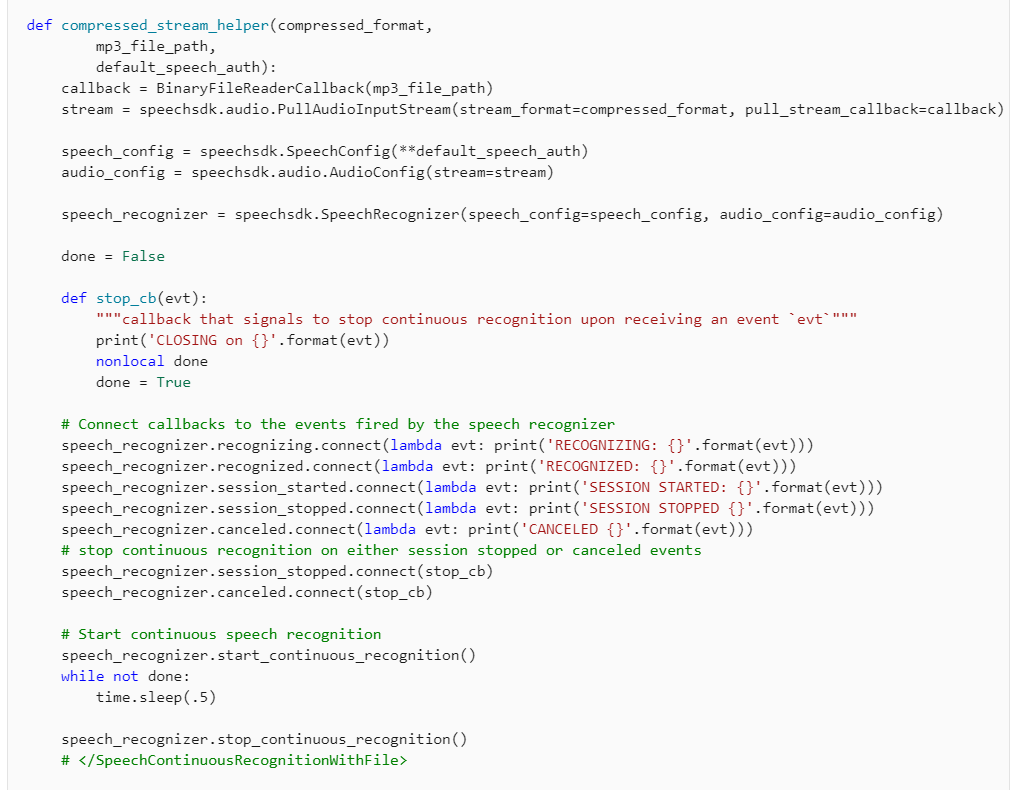

I integrated Twilio Media stream with Azure Cognitive service (Speech to Text). I inherited speechsdk.audio.PullAudioInputStreamCallback class to send audio chunks to server. ref : speech_sample.py

import azure.cognitiveservices.speech as speechsdk

import queue

class SocketReaderCallback(speechsdk.audio.PullAudioInputStreamCallback):

def __init__(self):

super().__init__()

self._q = queue.Queue()

def read(self, buffer: memoryview) -> int:

chunk = self._q.get()

buffer[:len(chunk)] = chunk

return len(chunk)

def has_bytes(self):

return True if self._q.qsize() > 0 else False

def queueup(self,chunk):

self._q.put(chunk)

def close(self):

print("AZ.Callback.Closed")

Below is code for transcriber class. Here add_request method adds audio chunks to Queue of above callback class and that method is called from Twilio's socket connection. callback class picks chunks from queue and uploads to Azure server for transcription.

import azure.cognitiveservices.speech as speechsdk

import queue

from rule_engine.medium.azure_transcribe.azure_calback import SocketReaderCallback

class AzureTranscribe:

def __init__(self, speech_config, on_response, user_id):

self._on_response = on_response

self.callback = SocketReaderCallback()

wave_format = speechsdk.audio.AudioStreamFormat(samples_per_second=8000, bits_per_sample=8, channels=1)

self._stream = speechsdk.audio.PullAudioInputStream(self.callback,wave_format)

audio_config = speechsdk.audio.AudioConfig(stream=self._stream)

self._speech_recognizer = speechsdk.SpeechRecognizer(speech_config=speech_config, language="en-IN", audio_config=audio_config)

self._ended = False

self.user_id = user_id

self.initialize_once()

self.state = None

def initialize_once(self):

# Connect callbacks to the events fired by the speech recognizer

self._speech_recognizer.recognizing.connect(lambda evt: print('AZ.RECOGNIZING: {}'.format(evt)))

self._speech_recognizer.recognized.connect(lambda evt: print('AZ.RECOGNIZED: {}'.format(evt)))

self._speech_recognizer.session_started.connect(lambda evt: print('AZ.SESSION STARTED: {}'.format(evt)))

self._speech_recognizer.session_stopped.connect(lambda evt: print('AZ.SESSION STOPPED {}'.format(evt)))

self._speech_recognizer.canceled.connect(lambda evt: print('AZ.CANCELED {}'.format(evt)))

self._speech_recognizer.start_continuous_recognition()

def add_request(self, buffer):

# buffer, self.state = audioop.ratecv(bytes(buffer), 2, 2, 8000, 16000, self.state)

self.callback.queueup(bytes(buffer))

def terminate(self):

self._ended = True

self._speech_recognizer.stop_continuous_recognition()

- If I upload audio chunks from an audio file, the transcription is accurate.

- If I upload audio chunks from twilio call, transcription is very bad.

Twilio's sample rate is 8 kHz while Azure's expected sample rate is 16 kHz. Yet Azure works with both sample rates and provides poor quality transcription for both.