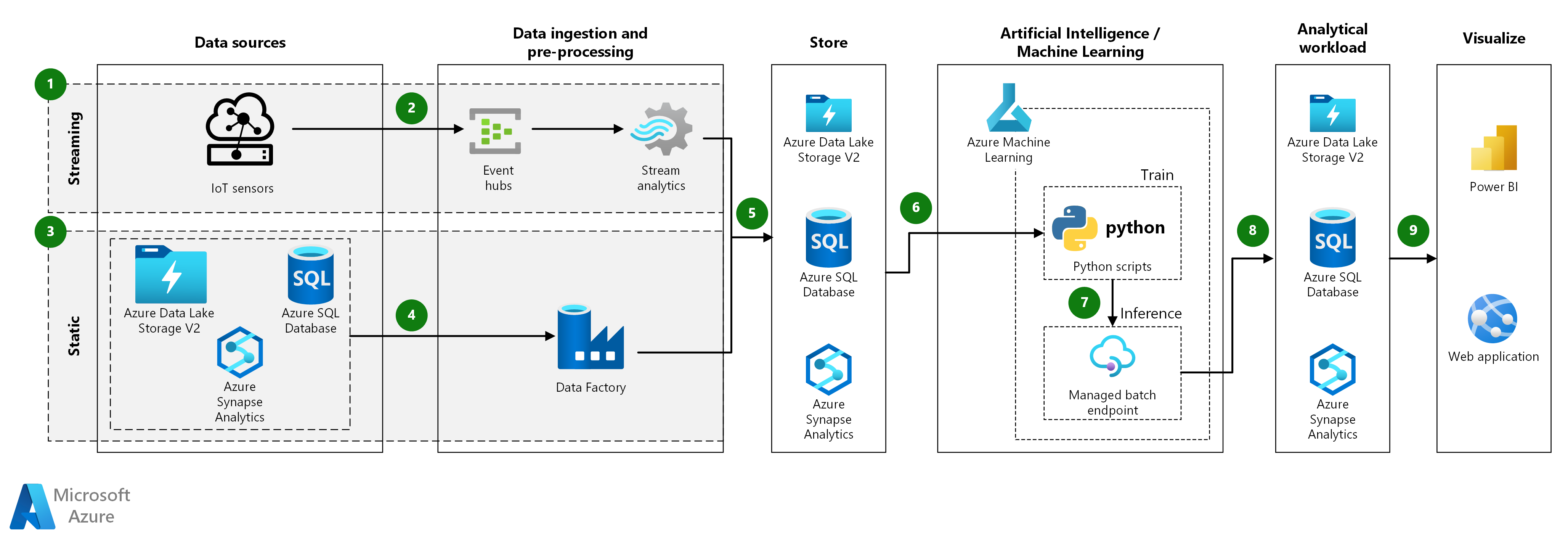

This architecture guide shows how to build a scalable solution for batch scoring models Azure Machine Learning. The solution can be used as a template and can generalize to different problems.

Architecture

Download a Visio file of this architecture.

Workflow

This architecture guide is applicable for both streaming and static data, provided that the ingestion process is adapted to the data type. The following steps and components describe the ingestion of these two types of data.

Streaming data:

- Streaming data originates from IoT Sensors, where new events are streamed at frequent intervals.

- Incoming streaming events are queued using Azure Event Hubs, and then pre-processed using Azure Stream Analytics.

- Azure Event Hubs. This message ingestion service can ingest millions of event messages per second. In this architecture, sensors send a stream of data to the event hub.

- Azure Stream Analytics. An event-processing engine. A Stream Analytics job reads the data streams from the event hub and performs stream processing.

Static data:

- Static datasets can be stored as files within Azure Data Lake Storage or in tabular form in Azure Synapse or Azure SQL Database.

- Azure Data Factory can be used to aggregate or pre-process the stored dataset.

The remaining architecture, after data ingestion, is equal for both streaming and static data, and consists of the following steps and components:

- The ingested, aggregated or pre-processed data can be stored as documents within Azure Data Lake Storage or in tabular form in Azure Synapse or Azure SQL Database. This data will then be consumed by Azure Machine Learning.

- Azure Machine Learning is used for training, deploying, and managing machine learning models at scale. In the context of batch scoring, Azure Machine Learning creates a cluster of virtual machines with an automatic scaling option, where jobs are executed in parallel as of Python scripts.

- Models are deployed as Managed Batch Endpoints, which are then used to do batch inferencing on large volumes of data over a period of time. Batch endpoints receive pointers to data and run jobs asynchronously to process the data in parallel on compute clusters.

- The inference results can be stored as documents within Azure Data Lake Storage or in tabular form in Azure Synapse or Azure SQL Database.

- Visualize: The stored model results can be consumed through user interfaces, such as Power BI dashboards, or through custom-built web applications.

Components

- Azure Event Hubs

- Azure Stream Analytics

- Azure SQL Database

- Azure Synapse Analytics

- Azure Data Lake Storage

- Azure Data Factory

- Azure Machine Learning

- Azure Machine Learning Endpoints

- Microsoft Power BI on Azure

- Azure Web Apps

Considerations

These considerations implement the pillars of the Azure Well-Architected Framework, which is a set of guiding tenets that can be used to improve the quality of a workload. For more information, see Microsoft Azure Well-Architected Framework.

Performance

For standard Python models, it's generally accepted that CPUs are sufficient to handle the workload. This architecture uses CPUs. However, for deep learning workloads, graphics processing units (GPUs) generally outperform CPUs by a considerable amount; a sizeable cluster of CPUs is usually needed to get comparable performance.

Parallelize across VMs versus cores

When you run scoring processes of many models in batch mode, the jobs need to be parallelized across VMs. Two approaches are possible:

- Create a larger cluster using low-cost VMs.

- Create a smaller cluster using high performing VMs with more cores available on each.

In general, scoring of standard Python models isn't as demanding as scoring of deep learning models, and a small cluster should be able to handle a large number of queued models efficiently. You can increase the number of cluster nodes as the dataset sizes increase.

For convenience in this scenario, one scoring task is submitted within a single Azure Machine Learning pipeline step. However, it can be more efficient to score multiple data chunks within the same pipeline step. In those cases, write custom code to read in multiple datasets and execute the scoring script during a single-step execution.

Management

- Monitor jobs. It's important to monitor the progress of running jobs. However, it can be a challenge to monitor across a cluster of active nodes. To inspect the state of the nodes in the cluster, use the Azure portal to manage the Machine Learning workspace. If a node is inactive or a job has failed, the error logs are saved to blob storage, and are also accessible in the Pipelines section. For richer monitoring, connect logs to Application Insights, or run separate processes to poll for the state of the cluster and its jobs.

- Logging. Machine Learning logs all stdout/stderr to the associated Azure Storage account. To easily view the log files, use a storage navigation tool such as Azure Storage Explorer.

Cost optimization

Cost optimization is about looking at ways to reduce unnecessary expenses and improve operational efficiencies. For more information, see Overview of the cost optimization pillar.

The most expensive components used in this architecture guide are the compute resources. The compute cluster size scales up and down depending on the jobs in the queue. Enable automatic scaling programmatically through the Python SDK by modifying the compute's provisioning configuration. Or, use the Azure CLI to set the automatic scaling parameters of the cluster.

For work that doesn't require immediate processing, configure the automatic scaling formula so the default state (minimum) is a cluster of zero nodes. With this configuration, the cluster starts with zero nodes and only scales up when it detects jobs in the queue. If the batch scoring process happens only a few times a day or less, this setting enables significant cost savings.

Automatic scaling might not be appropriate for batch jobs that occur too close to each other. Because the time that it takes for a cluster to spin up and spin down incurs a cost, if a batch workload begins only a few minutes after the previous job ends, it might be more cost effective to keep the cluster running between jobs. This strategy depends on whether scoring processes are scheduled to run at a high frequency (every hour, for example), or less frequently (once a month, for example).

Contributors

This article is maintained by Microsoft. It was originally written by the following contributors.

Principal authors:

- Carlos Alexandre Santos | Senior Specialized AI Cloud Solution Architect

- Said Bleik | Principal Applied Scientist Manager

To see non-public LinkedIn profiles, sign in to LinkedIn.

Next steps

Product documentation:

- What is Azure Blob Storage?

- Introduction to private Docker container registries in Azure

- Azure Event Hubs

- What is Azure Machine Learning?

- What is Azure SQL Database?

- Welcome to Azure Stream Analytics

Microsoft Learn modules:

- Deploy Azure SQL Database

- Enable reliable messaging for Big Data applications using Azure Event Hubs

- Explore Azure Event Hubs

- Implement a Data Streaming Solution with Azure Streaming Analytics

- Introduction to machine learning

- Manage container images in Azure Container Registry