Hi,

We had this exact issue in a Windows 2019 (core) S2D cluster. We logged this it with MS and after a week they were able to find a cause and solution meaning in our case we didn't have to remove then re-add the Hyper-V features.

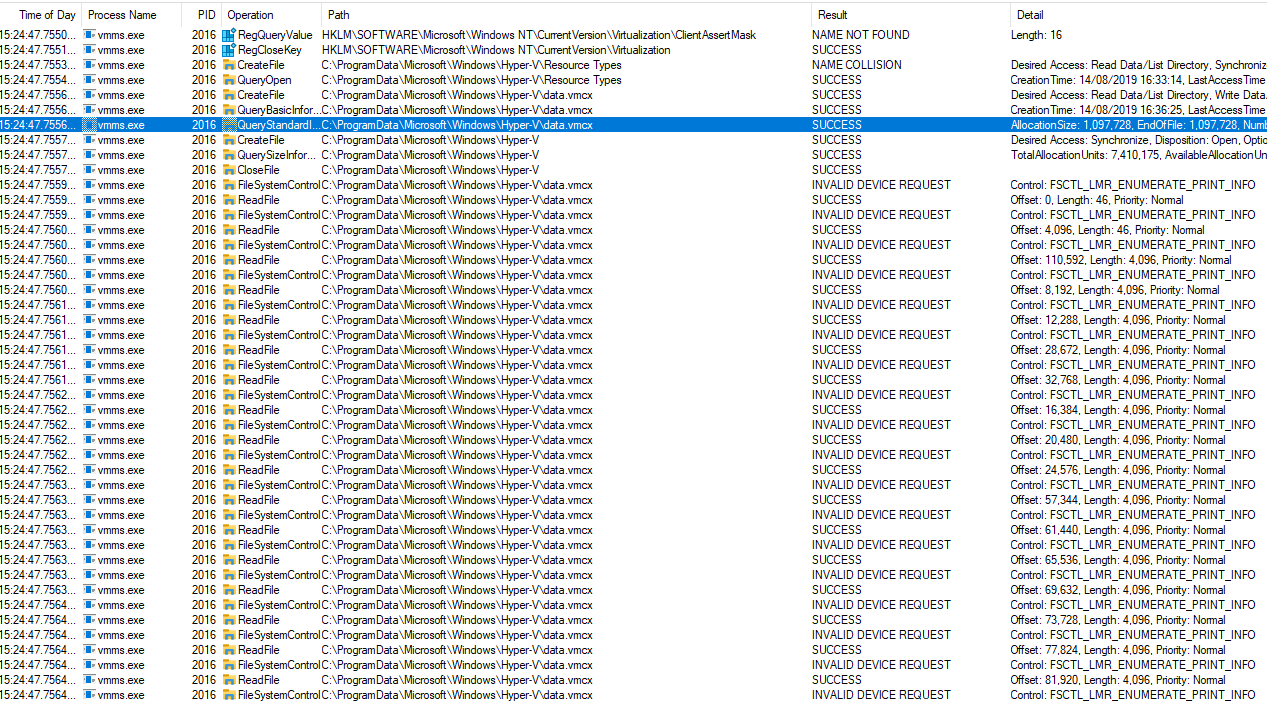

Process Monitor revealed that the when the Virtual Machine Management Service was trying to start it was seemingly getting stuck at reading the contents of the data.vmcx file located in C:\ProgramData\Microsoft\Windows\Hyper-V on the local node. Additionally, the support engineer then was able to verify using a "VML" trace that there was indeed an issue with the data.vmcx file.

So to fix it we found we had to do the following:

- Ensure the node is paused with no roles

- Rename the data.vmcx file in C:\ProgramData\Microsoft\Windows\Hyper-V\ to dataold.vmcx (on the problem node)

- Reboot the node

- Check the Virtual Machine Management Service has started.

- Test migrate a VM to the node

Additionally, we then found 2 VMs wouldn't migrate to the node (despite others working) due to the vm config vmcx files for the individual virtual machines being located in C:\ProgramData\Microsoft\Windows\Hyper-V\Planned Virtual Machines on the node. Stopping the Virtual Machine Management Service then moving the vmcx files out of this location (to desktop in my case) before starting the service again fixed this problem too. With all VMs migrating successfully after that.

We don't have a root cause as to why this happened, at the time of writing the node has been stable. Hopefully this is of use to someone with the same issue. As always take the relevant backups and precautions just in case your issue isn't fixed by the above.