Hi,

The problem was solved in a different way, I read the JSON as a document per line, and then at the end of the data flow transform it into the standard JSON file.

Thanks for your help!

Regards,

Jakub Ramut

This browser is no longer supported.

Upgrade to Microsoft Edge to take advantage of the latest features, security updates, and technical support.

Hi,

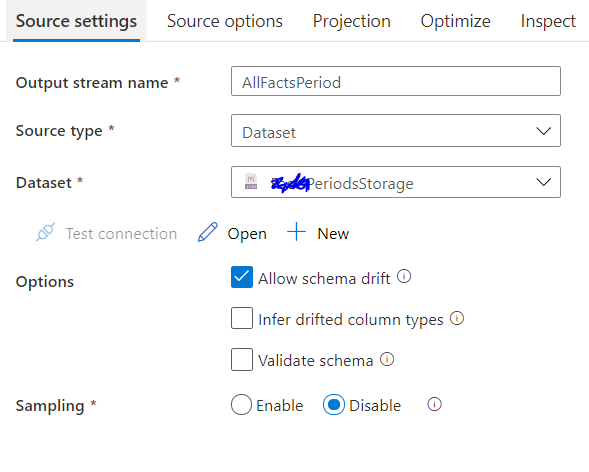

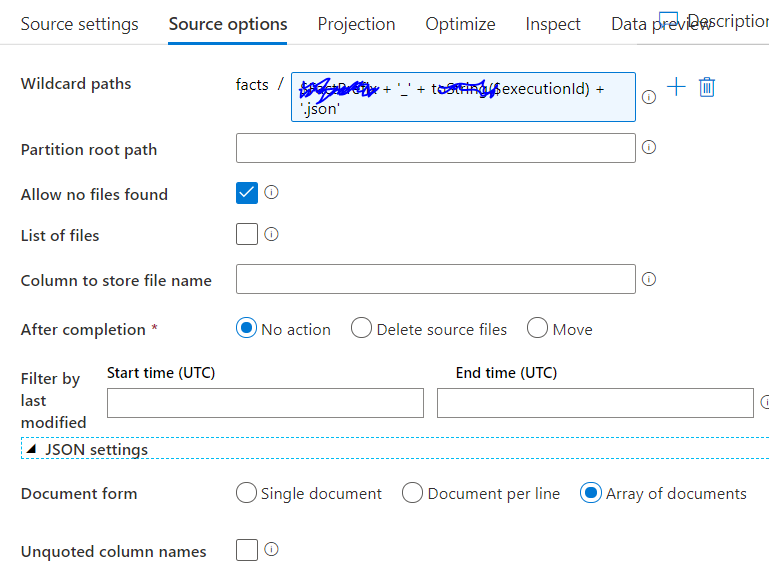

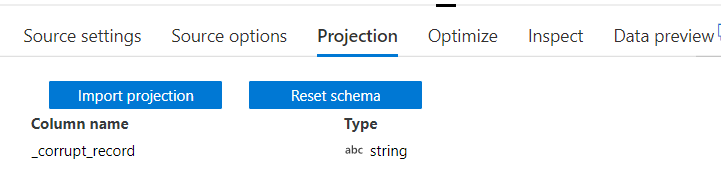

In Data flow object I'd like to read data from json files from blob storage (created in a separate pipeline), but when I try to click "Import projection" in "Projection" the result is only one column _corrupt_record:

The projection is successfully finished without any errors.

In "Source options" tab, Json settings we use "Array of documents" and the json is a valid array, e.g:

[

{

"PeriodId": 16,

"FactDdn": "account.status",

"FactValue": "2"

},

{

"PeriodId": 16,

"FactDdn": "ccm.rn.clear",

"FactValue": "0"

}

]

When I change the json file to the format:

{

"PeriodId": 16,

"FactDdn": "account.status",

"FactValue": "2"

},

{

"PeriodId": 16,

"FactDdn": "ccm.rn.clear",

"FactValue": "0"

}

and settings to "Document per line" everything is fine but it doesn't solve the problem because the results of the data flow should have the array of documents format (we need to read it from .net core and don't want to change anything there).

Is it some known issue or am I missing some settings?

Hi,

The problem was solved in a different way, I read the JSON as a document per line, and then at the end of the data flow transform it into the standard JSON file.

Thanks for your help!

Regards,

Jakub Ramut

Hi @HarithaMaddi-MSFT ,

Thank you for your warm welcome.

The full content from the example file:

[{"PeriodId":16,"FactDdn":"account.status","FactValue":"2"}

,{"PeriodId":16,"FactDdn":"ccm.rn.clear","FactValue":"0"}

,{"PeriodId":16,"FactDdn":"ccm.rn.clprep","FactValue":"0"}

,{"PeriodId":16,"FactDdn":"ccm.rn.open","FactValue":"0"}

,{"PeriodId":16,"FactDdn":"ccm.rn.total","FactValue":"0"}

,{"PeriodId":16,"FactDdn":"diag.eftotdr","FactValue":"4"}

,{"PeriodId":16,"FactDdn":"diag.total","FactValue":"5"}

,{"PeriodId":16,"FactDdn":"efilesttap1","FactValue":"Success"}

,{"PeriodId":17,"FactDdn":"account.status","FactValue":"2"}

,{"PeriodId":17,"FactDdn":"ccm.rn.clear","FactValue":"0"}

,{"PeriodId":17,"FactDdn":"ccm.rn.clprep","FactValue":"0"}

,{"PeriodId":17,"FactDdn":"ccm.rn.open","FactValue":"0"}

,{"PeriodId":17,"FactDdn":"ccm.rn.total","FactValue":"0"}

,{"PeriodId":17,"FactDdn":"diag.eftotdr","FactValue":"4"}

,{"PeriodId":17,"FactDdn":"diag.total","FactValue":"7"}

,{"PeriodId":17,"FactDdn":"efilesttap1","FactValue":"Success"}

]

it's a json file with content-type "application/octet-stream", the file should be read from the blob storage.

I can't add it as attachment because json type is not supported here.

The dataset for us is the json data set which pointing into the blob storage: