Hello and welcome to Microsoft Q&A, thank you for your question.

If I undestand correctly, the part you need help with, is not parameterizing the filepath, but making each pipeline run have a unique folder in the path, so that no two pipeline runs will copy to the same location. The difficulty with your example, is Data Factory does not have a pipeline run counter like that. I do have a solution, but first I'll offer two other alternatives.

- The easiest way to satisfy the uniqueness, is to use the pipeline run id as folder name instead of numbers. The pipeline id is not very human friendly, and does not indicate order.

Since your example uses numbers to indicate the ordinal of the run (first, second), I assume this is important.

- The next easiest way is to use pipeline or trigger start time instead of numbers.

To do exactly the way you ask, we need to store the number somewhere and increment. Since you are already using a SQL database, this is an ideal option.

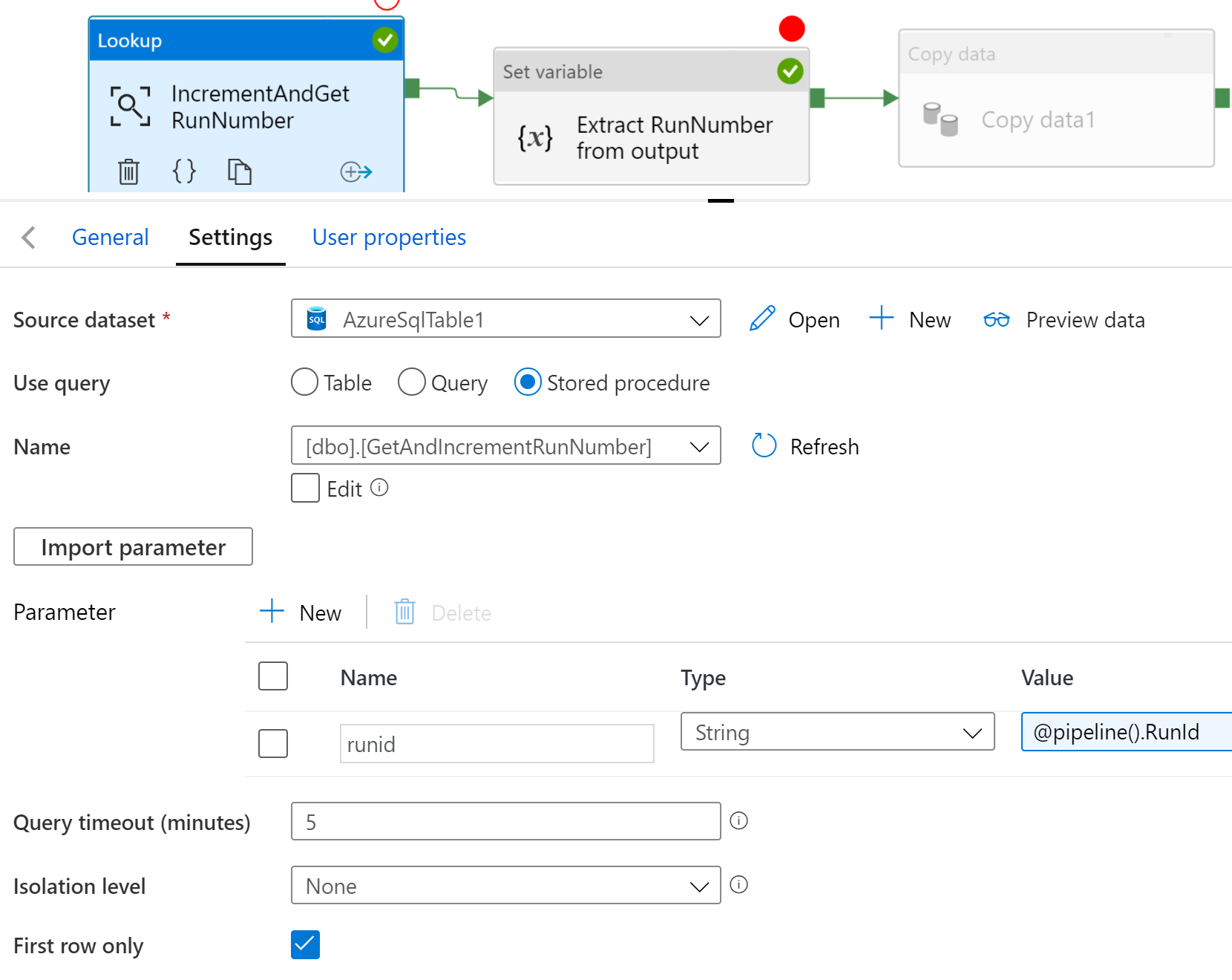

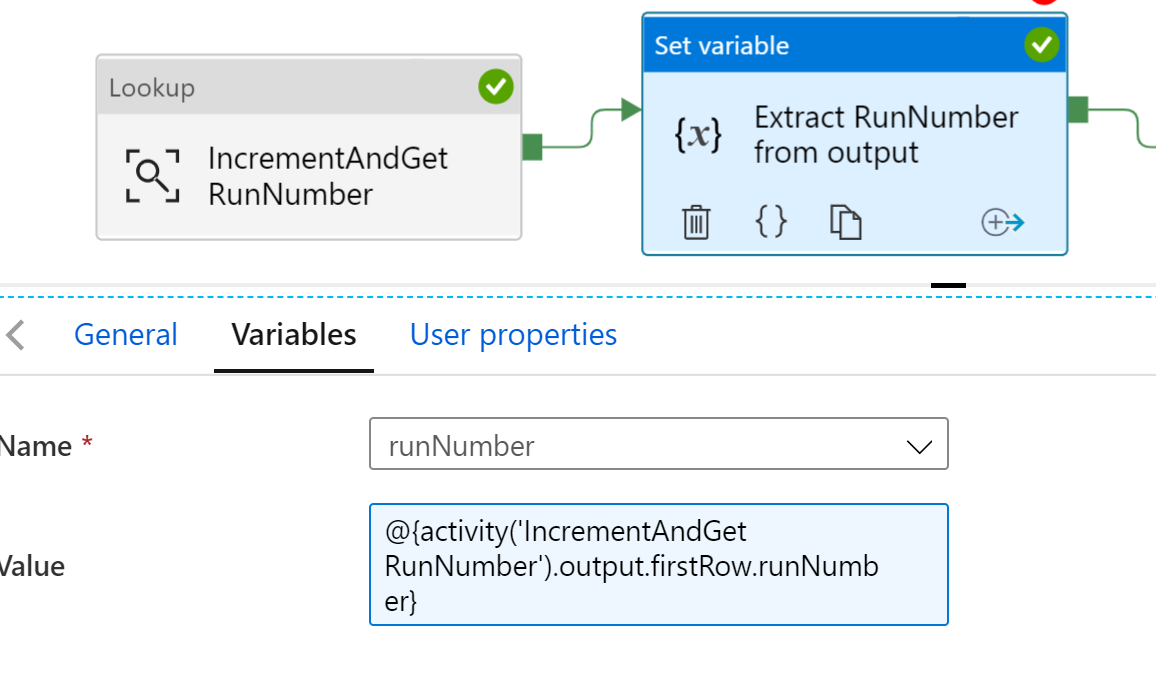

In my solution, I create a table to store the number, and a stored proc to increment and retrieve the largest value. In Data Factory, I use a Lookup activity with the stored procedure option to run the procedure and retrieve the output. The Data Factory Stored Proc activity does not retrieve output, this is why I use Lookup activity instead. Once I have this output, I use it in the folder path.

First, create a table to store the numbers.

CREATE TABLE [dbo].[runNumberTable](

[runNumber] [int] NOT NULL,

[pipelineRunId] [nchar](50) NULL,

CONSTRAINT [PK_runNumberTable] PRIMARY KEY CLUSTERED

(

[runNumber] ASC

)WITH (STATISTICS_NORECOMPUTE = OFF, IGNORE_DUP_KEY = OFF) ON [PRIMARY]

) ON [PRIMARY]

GO

Then insert a starter row ( 0, "anytext").

Next I define a stored proc to get and insert the next number.

CREATE PROCEDURE GetAndIncrementRunNumber

(

@runid nchar(49) = "none"

)

AS

BEGIN

-- SET NOCOUNT ON added to prevent extra result sets from

-- interfering with SELECT statements.

SET NOCOUNT ON

DECLARE @runnumber int;

SELECT @runnumber = MAX(runNumber) from runNumberTable;

SET @runnumber = @runnumber + 1;

INSERT INTO runNumberTable (runNumber , pipelineRunId)

VALUES (@runnumber , @runid);

SELECT @runnumber as 'runNumber';

END

GO

As an added feature, I added pipeline run id to the table, so you can easily go find out which pipeline run did with folder.

Then in the Data Factory,

and then use the variable to fill in the path in the copy activity.