How to use video translation

Note

This feature is currently in public preview. This preview is provided without a service-level agreement, and we don't recommend it for production workloads. Certain features might not be supported or might have constrained capabilities. For more information, see Supplemental Terms of Use for Microsoft Azure Previews.

In this article, you learn how to use Azure AI Speech video translation in the studio.

All it takes to get started is an original video. See if video translation supports your language and region.

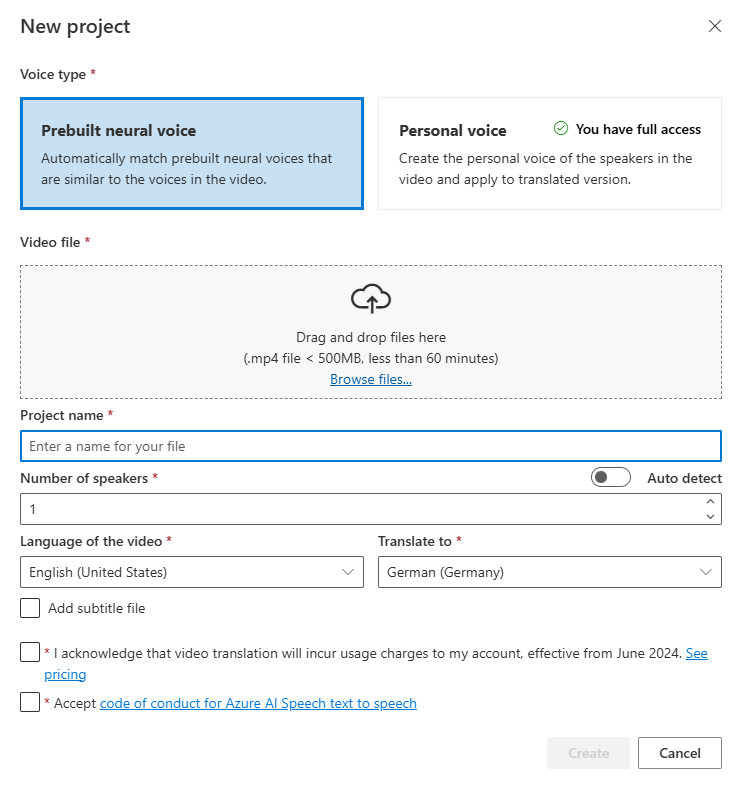

Create a video translation project

To create a video translation project, follow these steps:

Sign in to the Speech Studio.

Select the subscription and Speech resource to work with.

Select Video translation.

On the Create and Manage Projects page, select Create a project.

On the New project page, select Voice type.

You can select Prebuilt neural voice or Personal voice for Voice type. For prebuilt neural voice, the system automatically selects the most suitable prebuilt voice by matching the speaker's voice in the video with prebuilt voices. For personal voice, the system offers a model that generates high-quality voice replication in a few seconds.

Note

To use personal voice, you need to apply for access.

Upload your video file by dragging and dropping the video file or selecting the file manually.

Ensure the video is in .mp4 format, less than 500 MB, and shorter than 60 minutes.

Provide Project name, and select Number of speakers, Language of the video, Translate to language.

If you want to use your own subtitle files, select Add subtitle file. You can choose to upload either the source subtitle file or the target subtitle file. The subtitle file can be in WebVTT or JSON format. You can download a sample VTT file for your reference by selecting Download sample VTT file.

After reviewing the pricing information and code of conduct, then proceed to create the project.

Once the upload is complete, you can check the processing status on the project tab.

After the project is created, you can select the project to review detailed settings and make adjustments according to your preferences.

Check and adjust voice settings

On the project details page, the project offers two tabs Translated and Original under Video, allowing you to compare them side by side.

On the right side of the video, you can view both the original script and the translated script. Hovering over each part of the original script triggers the video to automatically jump to the corresponding segment of the original video, while hovering over each part of the translated script triggers the video to jump to the corresponding translated segment.

You can also add or remove segments as needed. When you want to add a segment, ensure that the new segment timestamp doesn't overlap with the previous and next segment, and the segment end time should be larger than the start time. The correct format of timestamp should be hh:mm:ss.ms. Otherwise, you can't apply the changes.

You can adjust the time frame of the scripts directly using the audio waveform below the video. After selecting Apply changes, the adjustments will be applied.

If you encounter segments with an "unidentified" voice name, it might be because the system couldn't accurately detect the voice, especially in situations where speaker voices overlap. In such cases, it's advisable to manually change the voice name.

If you want to adjust the voice, select Voice settings to make some changes. On the Voice settings page, you can adjust the voice type, gender, and the voice. Select the voice sample on the right of Voice to determine your voice selection. If you find there is missing voice, you can add the new voice name by selecting Add speaker. After changing the settings, select Update.

If you make changes multiple times but haven't finished, you only need to save the changes you've made by selecting Save. After making all changes, select Apply changes to apply them to the video. You'll be charged only after you select Apply changes.

You can translate the original video into a new language by selecting New language. On the Translate page, you can choose a new translated language and voice type. Once the video file has been translated, a new project is automatically created.

Related content

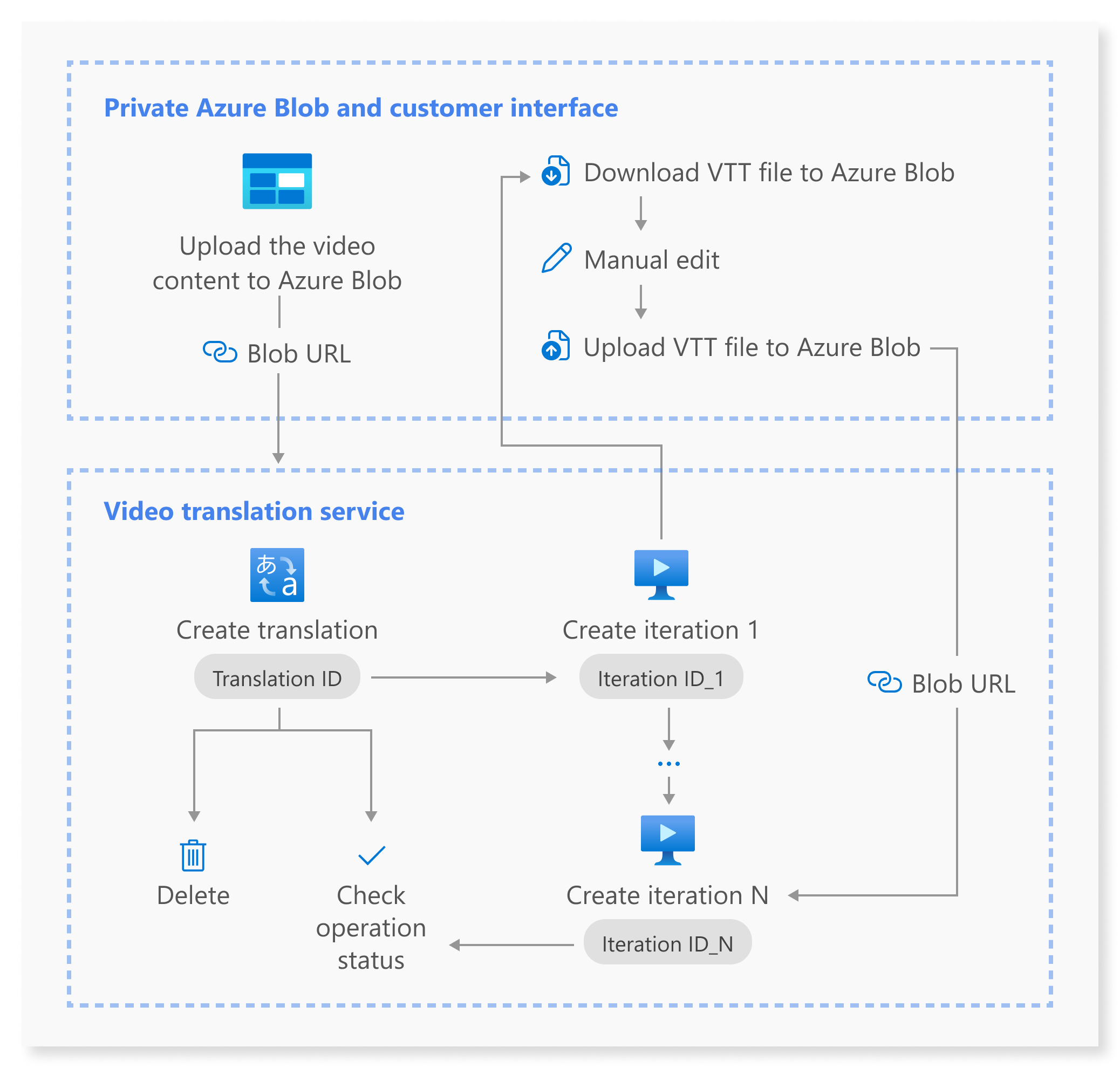

The video translation REST API facilitates seamless video translation integration into your applications. It supports uploading, managing, and refining video translations, with multiple iterations for continuous improvement. In this article, you learn how to utilize video translation through the REST API.

This diagram provides a high-level overview of the workflow.

You can use the following REST API operations for video translation:

| Operation | Method | REST API call |

|---|---|---|

| Create a translation | PUT |

/translations/{translationId} |

| List translations | GET |

/translations |

| Get a translation by translation ID | GET |

/translations/{translationId} |

| Create an iteration | PUT |

/translations/{translationId}/iterations/{iterationId} |

| List iterations | GET |

/translations/{translationId}/iterations |

| Get an iteration by iteration ID | GET |

/translations/{translationId}/iterations/{iterationId} |

| Get operation by operation ID | GET |

/operations/{operationId} |

| Delete a translation by translation ID | DELETE |

/translations/{translationId} |

For code samples, see GitHub.

This article outlines the primary steps of the API process, including creating a translation, creating an iteration, checking the status of each operation, getting an iteration by iteration ID, and deleting a translation by translation ID. For complete details, refer to the links provided for each API in the table.

Create a translation

To submit a video translation request, you need to construct an HTTP PUT request path and body according to the following instructions:

Specify

Operation-Id: TheOperation-Idmust be unique for each operation. It ensures that each operation is tracked separately. Replace[operationId]with an operation ID.Specify

translationId: ThetranslationIdmust be unique. Replace[translationId]with a translation ID.Set the required input: Include details like

sourceLocale,targetLocale,voiceKind, andvideoFileUrl. Ensure that you have the video URL from Azure Blob Storage. For the languages supported for video translation, refer to the supported source and target languages. You can setvoiceKindparameter to eitherPlatformVoiceorPersonalVoice. ForPlatformVoice, the system automatically selects the most suitable prebuilt voice by matching the speaker's voice in the video with prebuilt voices. ForPersonalVoice, the system offers a model that generates high-quality voice replication in a few seconds.Note

To use personal voice, you need to apply for access.

Replace

[YourResourceKey]with your Speech resource key and replace[YourSpeechRegion]with your Speech resource region.

Creating a translation doesn't initiate the translation process. You can start translating the video by creating an iteration. The following example is for Windows shell. Ensure to escape & with ^& if the URL contains &. In the following sample code, we’ll use a public video URL, which you’re welcome to use for your own testing.

curl -v -X PUT -H "Ocp-Apim-Subscription-Key: [YourResourceKey]" -H "Operation-Id: [operationId]" -H "Content-Type: application/json" -d "{\"displayName\": \"[YourDisplayName]\",\"description\": \"[OptionalYourDescription]\",\"input\": {\"sourceLocale\": \"[VideoSourceLocale]\",\"targetLocale\": \"[TranslationTargetLocale]\",\"voiceKind\": \"[PlatformVoice/PersonalVoice]\",\"speakerCount\": [OptionalVideoSpeakerCount],\"subtitleMaxCharCountPerSegment\": [OptionalYourPreferredSubtitleMaxCharCountPerSegment],\"exportSubtitleInVideo\": [Optional true/false],\"videoFileUrl\": \"https://speechstudioprodpublicsa.blob.core.windows.net/ttsvoice/VideoTranslation/PublicDoc/SampleData/es-ES-TryOutOriginal.mp4\"}}" "https://[YourSpeechRegion].api.cognitive.microsoft.com/videotranslation/translations/[translationId]?api-version=2024-05-20-preview"

Important

Data created through the API won't appear in Speech Studio, and the data between the API and Speech Studio isn't synchronized.

You should receive a response body in the following format:

{

"input": {

"sourceLocale": "zh-CN",

"targetLocale": "en-US",

"voiceKind": "PlatformVoice",

"speakerCount": 1,

"subtitleMaxCharCountPerSegment": 30,

"exportSubtitleInVideo": true

},

"status": "NotStarted",

"lastActionDateTime": "2024-09-20T06:25:05.058Z",

"id": "mytranslation0920",

"displayName": "demo",

"description": "for testing",

"createdDateTime": "2024-09-20T06:25:05.058Z"

}

The status property should progress from NotStarted status, to Running, and finally to Succeeded or Failed. You can call the Get operation by operation ID API periodically until the returned status is Succeeded or Failed. This operation allows you to monitor the progress of your creating translation process.

Get operation by operation ID

Check the status of a specific operation using its operation ID. The operation ID is unique for each operation, so you can track each operation separately.

Replace [YourResourceKey] with your Speech resource key, [YourSpeechRegion] with your Speech resource region, and [operationId] with the operation ID you want to check.

curl -v -X GET -H "Ocp-Apim-Subscription-Key:[YourResourceKey]" "https://[YourSpeechRegion].api.cognitive.microsoft.com/videotranslation/operations/[operationId]?api-version=2024-05-20-preview"

You should receive a response body in the following format:

{

"id": "createtranslation0920-1",

"status": "Running"

}

Create an iteration

To start translating your video or update an iteration for an existing translation, you need to construct an HTTP PUT request path and body according to the following instructions:

- Specify

Operation-Id: TheOperation-Idmust be unique for each operation, such as creating each iteration. Replace[operationId]with a unique ID for this operation. - Specify

translationId: If multiple iterations are performed under a single translation, the translation ID remains unchanged. - Specify

iterationId: TheiterationIdmust be unique for each operation. Replace[iterationId]with an iteration ID. - Set the required input: Include details like

speakerCount,subtitleMaxCharCountPerSegment,exportSubtitleInVideo, orwebvttFile. No subtitles are embedded in the output video by default. - Replace

[YourResourceKey]with your Speech resource key and replace[YourSpeechRegion]with your Speech resource region.

The following example is for Windows shell. Ensure to escape & with ^& if the URL contains &.

curl -v -X PUT -H "Ocp-Apim-Subscription-Key: [YourResourceKey]" -H "Operation-Id: [operationId]" -H "Content-Type: application/json" -d "{\"input\": {\"speakerCount\": [OptionalVideoSpeakerCount],\"subtitleMaxCharCountPerSegment\": [OptionalYourPreferredSubtitleMaxCharCountPerSegment],\"exportSubtitleInVideo\": [Optional true/false],\"webvttFile\": {\"Kind\": \"[SourceLocaleSubtitle/TargetLocaleSubtitle/MetadataJson]\", \"url\": \"[AzureBlobUrlWithSas]\"}}}" "https://[YourSpeechRegion].api.cognitive.microsoft.com/videotranslation/translations/[translationId]/iterations/[iterationId]?api-version=2024-05-20-preview"

Note

When creating an iteration, if you've already specified the optional parameters speakerCount, subtitleMaxCharCountPerSegment, and exportSubtitleInVideo during the creation of translation, you don’t need to specify them again. The values will inherit from translation settings. Once these parameters are defined when creating an iteration, the new values will override the original settings.

The webvttFile parameter isn't required when creating the first iteration. However, starting from the second iteration, you must specify the webvttFile parameter in the iteration process. You need to download the webvtt file, make necessary edits, and then upload it to your Azure Blob storage. You need to specify the Blob URL in the curl code.

Data created through the API won't appear in Speech Studio, and the data between the API and Speech Studio isn't synchronized.

The subtitle file can be in WebVTT or JSON format. If you're unsure about how to prepare a WebVTT file, refer to the following sample formats.

00:00:01.010 --> 00:00:06.030

Hello this is a sample subtitle.

00:00:07.030 --> 00:00:09.030

Hello this is a sample subtitle.

You should receive a response body in the following format:

{

"input": {

"speakerCount": 1,

"subtitleMaxCharCountPerSegment": 30,

"exportSubtitleInVideo": true

},

"status": "Not Started",

"lastActionDateTime": "2024-09-20T06:31:53.760Z",

"id": "firstiteration0920",

"createdDateTime": "2024-09-20T06:31:53.760Z"

}

You can use operationId you specified and call the Get operation by operation ID API periodically until the returned status is Succeeded or Failed. This operation allows you to monitor the progress of your creating the iteration process.

Get an iteration by iteration ID

To retrieve details of a specific iteration by its ID, use the HTTP GET request. Replace [YourResourceKey] with your Speech resource key, [YourSpeechRegion] with your Speech resource region, [translationId] with the translation ID you want to check, and [iterationId] with the iteration ID you want to check.

curl -v -X GET -H "Ocp-Apim-Subscription-Key: [YourResourceKey]" "https://[YourSpeechRegion].api.cognitive.microsoft.com/videotranslation/translations/[translationId]/iterations/[iterationId]?api-version=2024-05-20-preview"

You should receive a response body in the following format:

{

"input": {

"speaker Count": 1,

"subtitleMaxCharCountPerSegment": 30,

"exportSubtitleInVideo": true

},

"result": {

"translatedVideoFileUrl": "https://xxx.blob.core.windows.net/container1/video.mp4?sv=2023-01-03&st=2024-05-20T08%3A27%3A15Z&se=2024-05-21T08%3A27%3A15Z&sr=b&sp=r&sig=xxx",

"sourceLocaleSubtitleWebvttFileUrl": "https://xxx.blob.core.windows.net/container1/sourceLocale.vtt?sv=2023-01-03&st=2024-05-20T08%3A27%3A15Z&se=2024-05-21T08%3A27%3A15Z&sr=b&sp=r&sig=xxx",

"targetLocaleSubtitleWebvttFileUrl": "https://xxx.blob.core.windows.net/container1/targetLocale.vtt?sv=2023-01-03&st=2024-05-20T08%3A27%3A15Z&se=2024-05-21T08%3A27%3A15Z&sr=b&sp=r&sig=xxx",

"metadataJsonWebvttFileUrl": "https://xxx.blob.core.windows.net/container1/metadataJsonLocale.vtt?sv=2023-01-03&st=2024-05-20T08%3A27%3A15Z&se=2024-05-21T08%3A27%3A15Z&sr=b&sp=r&sig=xxx"

},

"status": "Succeeded",

"lastActionDateTime": "2024-09-20T06:32:59.933Z",

"id": "firstiteration0920",

"createdDateTime": "2024-09-20T06:31:53.760Z"

}

Delete a translation by translation ID

Remove a specific translation identified by translationId. This operation also removes all iterations associated with this translation. Replace [YourResourceKey] with your Speech resource key, [YourSpeechRegion] with your Speech resource region, and [translationId] with the translation ID you want to delete. If not deleted manually, the service retains the translation history for up to 31 days.

curl -v -X DELETE -H "Ocp-Apim-Subscription-Key: [YourResourceKey]" "https://[YourSpeechRegion].api.cognitive.microsoft.com/videotranslation/translations/[translationId]?api-version=2024-05-20-preview"

The response headers include HTTP/1.1 204 No Content if the delete request was successful.

Additional information

This section provides curl commands for other API calls that aren't described in detail above. You can explore each API using the following commands.

List translations

To list all video translations that have been uploaded and processed in your resource account, make an HTTP GET request as shown in the following example. Replace YourResourceKey with your Speech resource key and replace YourSpeechRegion with your Speech resource region.

curl -v -X GET -H "Ocp-Apim-Subscription-Key: [YourResourceKey]" "https://[YourSpeechRegion].api.cognitive.microsoft.com/videotranslation/translations?api-version=2024-05-20-preview"

Get a translation by translation ID

This operation retrieves detailed information about a specific translation, identified by its unique translationId. Replace [YourResourceKey] with your Speech resource key, [YourSpeechRegion] with your Speech resource region, and [translationId] with the translation ID you want to check.

curl -v -X GET -H "Ocp-Apim-Subscription-Key: [YourResourceKey]" "https://[YourSpeechRegion].api.cognitive.microsoft.com/videotranslation/translations/[translationId]?api-version=2024-05-20-preview"

List iterations

List all iterations for a specific translation. This request lists all iterations without detailed information. Replace [YourResourceKey] with your Speech resource key, [YourSpeechRegion] with your Speech resource region, and [translationId] with the translation ID you want to check.

curl -v -X GET -H "Ocp-Apim-Subscription-Key: [YourResourceKey]" "https://[YourSpeechRegion].api.cognitive.microsoft.com/videotranslation/translations/[translationId]/iterations?api-version=2024-05-20-preview"

HTTP status codes

The section details the HTTP response codes and messages from the video translation REST API.

HTTP 200 OK

HTTP 200 OK indicates that the request was successful.

HTTP 204 error

An HTTP 204 error indicates that the request was successful, but the resource doesn't exist. For example:

- You tried to get or delete a translation that doesn't exist.

- You successfully deleted a translation.

HTTP 400 error

Here are examples that can result in the 400 error:

- The source or target locale you specified isn't among the supported locales.

- You tried to use a F0 Speech resource, but the region only supports the Standard Speech resource pricing tier.

HTTP 500 error

HTTP 500 Internal Server Error indicates that the request failed. The response body contains the error message.