Pricing example: Copy data from AWS S3 to Azure Blob storage hourly

APPLIES TO:  Azure Data Factory

Azure Data Factory  Azure Synapse Analytics

Azure Synapse Analytics

Tip

Try out Data Factory in Microsoft Fabric, an all-in-one analytics solution for enterprises. Microsoft Fabric covers everything from data movement to data science, real-time analytics, business intelligence, and reporting. Learn how to start a new trial for free!

In this scenario, you want to copy data from AWS S3 to Azure Blob storage on an hourly schedule for 8 hours per day, for 30 days.

The prices used in this example below are hypothetical and aren't intended to imply exact actual pricing. Read/write and monitoring costs aren't shown since they're typically negligible and won't impact overall costs significantly. Activity runs are also rounded to the nearest 1000 in pricing calculator estimates.

Refer to the Azure Pricing Calculator for more specific scenarios and to estimate your future costs to use the service.

Configuration

To accomplish the scenario, you need to create a pipeline with the following items:

I'll copy data from AWS S3 to Azure Blob storage, and this will move 10 GB of data from S3 to blob storage. I estimate it will run for 2-3 hours, and I plan to set DIU as Auto.

A schedule trigger to execute the pipeline every hour for 8 hours every day. When you want to run a pipeline, you can either trigger it immediately or schedule it. In addition to the pipeline itself, each trigger instance counts as a single Activity run.

Costs estimation

| Operations | Types and Units |

|---|---|

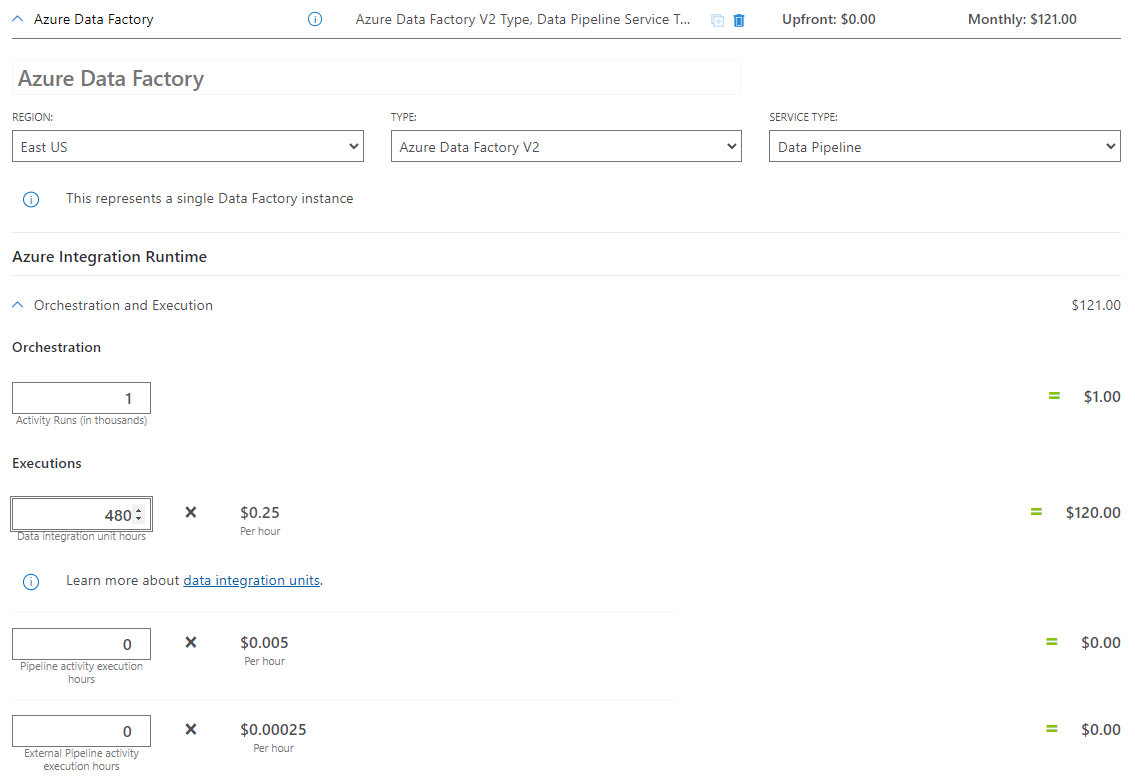

| Run Pipeline | 2 Activity runs per execution (1 for the trigger to run, 1 for activity to run) = 480 Activity runs, rounded up since the calculator only allows increments of 1000. |

| Copy Data Assumption: DIU hours per execution | 0.5 hours * 4 Azure Integration Runtime (default DIU setting = 4) For more information on data integration units and optimizing copy performance, see this article |

| Total execution hours: 8 executions per day for 30 days | 240 executions * 2 DIU/run = 480 DIUs |

Pricing calculator example

Total scenario pricing for 30 days: $122.00

Related content

- Pricing example: Copy data and transform with Azure Databricks hourly for 30 days

- Pricing example: Copy data and transform with dynamic parameters hourly for 30 days

- Pricing example: Run SSIS packages on Azure-SSIS integration runtime

- Pricing example: Using mapping data flow debug for a normal workday

- Pricing example: Transform data in blob store with mapping data flows

- Pricing example: Data integration in Azure Data Factory Managed VNET

- Pricing example: Get delta data from SAP ECC via SAP CDC in mapping data flows