Set daily cap on Log Analytics workspace

A daily cap on a Log Analytics workspace allows you to avoid unexpected increases in charges for data ingestion by stopping collection of billable data for the rest of the day whenever a specified threshold is reached. This article describes how the daily cap works and how to configure one in your workspace.

Important

You should use care when setting a daily cap because when data collection stops, your ability to observe and receive alerts when the health conditions of your resources will be impacted. It can also impact other Azure services and solutions whose functionality may depend on up-to-date data being available in the workspace. Your goal shouldn't be to regularly hit the daily limit but rather use it as an infrequent method to avoid unplanned charges resulting from an unexpected increase in the volume of data collected.

For strategies to reduce your Azure Monitor costs, see Cost optimization and Azure Monitor.

Permissions required

| Action | Permissions or role needed |

|---|---|

| Set the daily cap on a Log Analytics workspace | Microsoft.OperationalInsights/workspaces/write permissions to the Log Analytics workspaces you set the daily cap on, as provided by the Log Analytics Contributor built-in role, for example. |

| Set the daily cap on a classic Application Insights resource | microsoft.insights/components/CurrentBillingFeatures/write permissions to the classic Application Insights resources you set the daily cap on, as provided by the Application Insights Component Contributor built-in role, for example. |

| Create an alert when the daily cap for a Log Analytics workspace is reached | microsoft.insights/scheduledqueryrules/write permissions, as provided by the Monitoring Contributor built-in role, for example |

| Create an alert when the daily cap for a classic Application Insights resource is reached | microsoft.insights/activitylogalerts/write permissions, as provided by the Monitoring Contributor built-in role, for example |

| View the effect of the daily cap | Microsoft.OperationalInsights/workspaces/query/*/read permissions to the Log Analytics workspaces you query, as provided by the Log Analytics Reader built-in role, for example. |

How the daily cap works

Each workspace has a daily cap that defines its own data volume limit. When the daily cap is reached, a warning banner appears across the top of the page for the selected Log Analytics workspace in the Azure portal, and an operation event is sent to the Operation table under the LogManagement category. You can optionally create an alert rule to send an alert when this event is created.

The data size used for the daily cap is the size after customer-defined data transformations. (Learn more about data transformations in Data Collection Rules.)

Data collection resumes at the reset time which is a different hour of the day for each workspace. This reset hour can't be configured. You can optionally create an alert rule to send an alert when this event is created.

Note

The daily cap can't stop data collection at precisely the specified cap level and some excess data is expected. The data collection beyond the daily cap can be particularly large if the workspace is receiving high rates of data. If data is collected above the cap, it's still billed. See View the effect of the Daily Cap for a query that is helpful in studying the daily cap behavior.

When to use a daily cap

Daily caps are typically used by organizations that are particularly cost conscious. They shouldn't be used as a method to reduce costs, but rather as a preventative measure to ensure that you don't exceed a particular budget.

When data collection stops, you effectively have no monitoring of features and resources relying on that workspace. Instead of relying on the daily cap alone, you can create an alert rule to notify you when data collection reaches some level before the daily cap. Notification allows you to address any increases before data collection shuts down, or even to temporarily disable collection for less critical resources.

Application Insights

You should configure the daily cap setting for both Application Insights and Log Analytics to limit the amount of telemetry data ingested by your service. For workspace-based Application Insights resources, the effective daily cap is the minimum of the two settings. For classic Application Insights resources, only the Application Insights daily cap applies since their data doesn’t reside in a Log Analytics workspace.

Tip

If you're concerned about the amount of billable data collected by Application Insights, you should configure sampling to tune its data volume to the level you want. Use the daily cap as a safety method in case your application unexpectedly begins to send much higher volumes of telemetry.

The maximum cap for an Application Insights classic resource is 1,000 GB/day unless you request a higher maximum for a high-traffic application. When you create a resource in the Azure portal, the daily cap is set to 100 GB/day. When you create a resource in Visual Studio, the default is small (only 32.3 MB/day). The daily cap default is set to facilitate testing. It's intended that the user will raise the daily cap before deploying the app into production.

Note

If you are using connection strings to send data to Application Insights using regional ingestion endpoints, then the Application Insights and Log Analytics daily cap settings are effective per region. If you are using only instrumentation key (ikey) to send data to Application Insights using the global ingestion endpoint, then the Application Insights daily cap setting may not be effective across regions, but the Log Analytics daily cap setting will still apply.

We've removed the restriction on some subscription types that have credit that couldn't be used for Application Insights. Previously, if the subscription has a spending limit, the daily cap dialog has instructions to remove the spending limit and enable the daily cap to be raised beyond 32.3 MB/day.

Determine your daily cap

To help you determine an appropriate daily cap for your workspace, see Azure Monitor cost and usage to understand your data ingestion trends. You can also review Analyze usage in Log Analytics workspace which provides methods to analyze your workspace usage in more detail.

Workspaces with Microsoft Defender for Cloud

Important

Starting September 18, 2023, Azure Monitor caps all billable data types

when the daily cap is met. There is no special behavior for any data types when Microsoft Defender for Servers is enabled on your workspace.

This change improves your ability to fully contain costs from higher-than-expected data ingestion.

If you have a daily cap set on a workspace that has Microsoft Defender for Servers enabled,

be sure that the cap is high enough to accommodate this change.

Also, be sure to set an alert (see below) so that you are notified as soon as your daily cap is met.

Until September 18, 2023, if a workspace enabled the Microsoft Defenders for Servers solution after June 19, 2017, some security related data types are collected for Microsoft Defender for Cloud or Microsoft Sentinel despite any daily cap configured. The following data types will be subject to this special exception from the daily cap WindowsEvent, SecurityAlert, SecurityBaseline, SecurityBaselineSummary, SecurityDetection, SecurityEvent, WindowsFirewall, MaliciousIPCommunication, LinuxAuditLog, SysmonEvent, ProtectionStatus, Update, UpdateSummary, CommonSecurityLog and Syslog

Set the daily cap

Log Analytics workspace

To set or change the daily cap for a Log Analytics workspace in the Azure portal:

- From the Log Analytics workspaces menu, select your workspace, and then Usage and estimated costs.

- Select Daily Cap at the top of the page.

- Select ON and then set the data volume limit in GB/day.

Note

The reset hour for the workspace is displayed but cannot be configured.

To configure the daily cap with Azure Resource Manager, set the dailyQuotaGb parameter under WorkspaceCapping as described at Workspaces - Create Or Update.

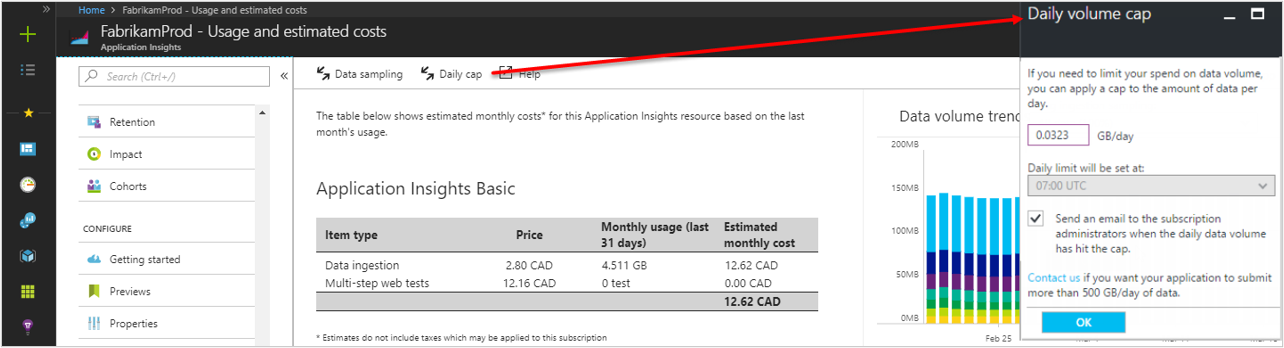

Classic Applications Insights resource

To set or change the daily cap for a classic Application Insights resource in the Azure portal:

- From the Monitor menu, select Applications, your application, and then Usage and estimated costs.

- Select Data Cap at the top of the page.

- Set the data volume limit in GB/day.

- If you want an email sent to the subscription administrator when the daily limit is reached, then select that option.

- Set the daily cap warning level in percentage of the data volume limit.

- If you want an email sent to the subscription administrator when the daily cap warning level is reached, then select that option.

To configure the daily cap with Azure Resource Manager, set the dailyQuota, dailyQuotaResetTime and warningThreshold parameters as described at Workspaces - Create Or Update.

Alert when daily cap is reached

When the daily cap is reached for a Log Analytics workspace, a banner is displayed in the Azure portal, and an event is written to the Operations table in the workspace. You should create an alert rule to proactively notify you when this occurs.

To receive an alert when the daily cap is reached, create a log search alert rule with the following details.

| Setting | Value |

|---|---|

| Scope | |

| Target scope | Select your Log Analytics workspace. |

| Condition | |

| Signal type | Log |

| Signal name | Custom log search |

| Query | _LogOperation | where Category =~ "Ingestion" | where Detail contains "OverQuota" |

| Measurement | Measure: Table rows Aggregation type: Count Aggregation granularity: 5 minutes |

| Alert Logic | Operator: Greater than Threshold value: 0 Frequency of evaluation: 5 minutes |

| Actions | Select or add an action group to notify you when the threshold is exceeded. |

| Details | |

| Severity | Warning |

| Alert rule name | Daily data limit reached |

Classic Application Insights resource

When the daily cap is reach for a classic Application Insights resource, an event is created in the Azure Activity log with the following signal names. You can also optionally have an email sent to the subscription administrator both when the cap is reached and when a specified percentage of the daily cap has been reached.

- Application Insights component daily cap warning threshold reached

- Application Insights component daily cap reached

To create an alert when the daily cap is reached, create an Activity log alert rule with the following details.

| Setting | Value |

|---|---|

| Scope | |

| Target scope | Select your application. |

| Condition | |

| Signal type | Activity Log |

| Signal name | Application Insights component daily cap reached Or Application Insights component daily cap warning threshold reached |

| Severity | Warning |

| Alert rule name | Daily data limit reached |

View the effect of the daily cap

The following query can be used to track the data volumes that are subject to the daily cap for a Log Analytics workspace between daily cap resets. In this example, the workspace's reset hour is 14:00. Change DailyCapResetHour to match the reset hour of your workspace which you can see on the Daily Cap configuration page.

let DailyCapResetHour=14;

Usage

| where TimeGenerated > ago(32d)

| extend StartTime=datetime_add("hour",-1*DailyCapResetHour,StartTime)

| where StartTime > startofday(ago(31d))

| where IsBillable

| summarize IngestedGbBetweenDailyCapResets=sum(Quantity)/1000. by day=bin(StartTime , 1d) // Quantity in units of MB

| render areachart

Add Update and UpdateSummary data types to the where Datatype line when the Update Management solution is not running on the workspace or solution targeting is enabled (learn more.)

Next steps

- See Azure Monitor Logs pricing details for details on how charges are calculated for data in a Log Analytics workspace and different configuration options to reduce your charges.

- See Azure Monitor Logs pricing details for details on how charges are calculated for data in a Log Analytics workspace and different configuration options to reduce your charges.

- See Analyze usage in Log Analytics workspace for details on analyzing the data in your workspace to determine to source of any higher than expected usage and opportunities to reduce your amount of data collected.