Deploy Azure Machine Learning extension on AKS or Arc Kubernetes cluster

To enable your AKS or Arc Kubernetes cluster to run training jobs or inference workloads, you must first deploy the Azure Machine Learning extension on an AKS or Arc Kubernetes cluster. The Azure Machine Learning extension is built on the cluster extension for AKS and cluster extension or Arc Kubernetes, and its lifecycle can be managed easily with Azure CLI k8s-extension.

In this article, you can learn:

- Prerequisites

- Limitations

- Review Azure Machine Learning extension config settings

- Azure Machine Learning extension deployment scenarios

- Verify Azure Machine Learning extension deployment

- Review Azure Machine Learning extension components

- Manage Azure Machine Learning extension

Prerequisites

- An AKS cluster running in Azure. If you haven't previously used cluster extensions, you need to register the KubernetesConfiguration service provider.

- Or an Arc Kubernetes cluster is up and running. Follow instructions in connect existing Kubernetes cluster to Azure Arc.

- If the cluster is an Azure RedHat OpenShift (ARO) Service cluster or OpenShift Container Platform (OCP) cluster, you must satisfy other prerequisite steps as documented in the Reference for configuring Kubernetes cluster article.

- For production purposes, the Kubernetes cluster must have a minimum of 4 vCPU cores and 14-GB memory. For more information on resource detail and cluster size recommendations, see Recommended resource planning.

- Cluster running behind an outbound proxy server or firewall needs extra network configurations.

- Install or upgrade Azure CLI to version 2.24.0 or higher.

- Install or upgrade Azure CLI extension

k8s-extensionto version 1.2.3 or higher.

Limitations

- Using a service principal with AKS is not supported by Azure Machine Learning. The AKS cluster must use a managed identity instead. Both system-assigned managed identity and user-assigned managed identity are supported. For more information, see Use a managed identity in Azure Kubernetes Service.

- When your AKS cluster used service principal is converted to use Managed Identity, before installing the extension, all node pools need to be deleted and recreated, rather than updated directly.

- Disabling local accounts for AKS is not supported by Azure Machine Learning. When the AKS Cluster is deployed, local accounts are enabled by default.

- If your AKS cluster has an Authorized IP range enabled to access the API server, enable the Azure Machine Learning control plane IP ranges for the AKS cluster. The Azure Machine Learning control plane is deployed across paired regions. Without access to the API server, the machine learning pods can't be deployed. Use the IP ranges for both the paired regions when enabling the IP ranges in an AKS cluster.

- Azure Machine Learning doesn't support attaching an AKS cluster cross subscription. If you have an AKS cluster in a different subscription, you must first connect it to Azure-Arc and specify in the same subscription as your Azure Machine Learning workspace.

- Azure Machine Learning doesn't guarantee support for all preview stage features in AKS. For example, Microsoft Entra pod identity isn't supported.

- If you've followed the steps from Azure Machine Learning AKS v1 document to create or attach your AKS as inference cluster, use the following link to clean up the legacy azureml-fe related resources before you continue the next step.

Review Azure Machine Learning extension configuration settings

You can use Azure Machine Learning CLI command k8s-extension create to deploy Azure Machine Learning extension. CLI k8s-extension create allows you to specify a set of configuration settings in key=value format using --config or --config-protected parameter. Following is the list of available configuration settings to be specified during Azure Machine Learning extension deployment.

| Configuration Setting Key Name | Description | Training | Inference | Training and Inference |

|---|---|---|---|---|

enableTraining |

True or False, default False. Must be set to True for Azure Machine Learning extension deployment with Machine Learning model training and batch scoring support. |

✓ | N/A | ✓ |

enableInference |

True or False, default False. Must be set to True for Azure Machine Learning extension deployment with Machine Learning inference support. |

N/A | ✓ | ✓ |

allowInsecureConnections |

True or False, default False. Can be set to True to use inference HTTP endpoints for development or test purposes. |

N/A | Optional | Optional |

inferenceRouterServiceType |

loadBalancer, nodePort or clusterIP. Required if enableInference=True. |

N/A | ✓ | ✓ |

internalLoadBalancerProvider |

This config is only applicable for Azure Kubernetes Service(AKS) cluster now. Set to azure to allow the inference router using internal load balancer. |

N/A | Optional | Optional |

sslSecret |

The name of the Kubernetes secret in the azureml namespace. This config is used to store cert.pem (PEM-encoded TLS/SSL cert) and key.pem (PEM-encoded TLS/SSL key), which are required for inference HTTPS endpoint support when allowInsecureConnections is set to False. For a sample YAML definition of sslSecret, see Configure sslSecret. Use this config or a combination of sslCertPemFile and sslKeyPemFile protected config settings. |

N/A | Optional | Optional |

sslCname |

An TLS/SSL CNAME is used by inference HTTPS endpoint. Required if allowInsecureConnections=False |

N/A | Optional | Optional |

inferenceRouterHA |

True or False, default True. By default, Azure Machine Learning extension deploys three inference router replicas for high availability, which requires at least three worker nodes in a cluster. Set to False if your cluster has fewer than three worker nodes, in this case only one inference router service is deployed. |

N/A | Optional | Optional |

nodeSelector |

By default, the deployed kubernetes resources and your machine learning workloads are randomly deployed to one or more nodes of the cluster, and DaemonSet resources are deployed to ALL nodes. If you want to restrict the extension deployment and your training/inference workloads to specific nodes with label key1=value1 and key2=value2, use nodeSelector.key1=value1, nodeSelector.key2=value2 correspondingly. |

Optional | Optional | Optional |

installNvidiaDevicePlugin |

True or False, default False. NVIDIA Device Plugin is required for ML workloads on NVIDIA GPU hardware. By default, Azure Machine Learning extension deployment won't install NVIDIA Device Plugin regardless Kubernetes cluster has GPU hardware or not. User can specify this setting to True, to install it, but make sure to fulfill Prerequisites. |

Optional | Optional | Optional |

installPromOp |

True or False, default True. Azure Machine Learning extension needs prometheus operator to manage prometheus. Set to False to reuse the existing prometheus operator. For more information about reusing the existing prometheus operator, see reusing the prometheus operator |

Optional | Optional | Optional |

installVolcano |

True or False, default True. Azure Machine Learning extension needs volcano scheduler to schedule the job. Set to False to reuse existing volcano scheduler. For more information about reusing the existing volcano scheduler, see reusing volcano scheduler |

Optional | N/A | Optional |

installDcgmExporter |

True or False, default False. Dcgm-exporter can expose GPU metrics for Azure Machine Learning workloads, which can be monitored in Azure portal. Set installDcgmExporter to True to install dcgm-exporter. But if you want to utilize your own dcgm-exporter, see DCGM exporter |

Optional | Optional | Optional |

| Configuration Protected Setting Key Name | Description | Training | Inference | Training and Inference |

|---|---|---|---|---|

sslCertPemFile, sslKeyPemFile |

Path to TLS/SSL certificate and key file (PEM-encoded), required for Azure Machine Learning extension deployment with inference HTTPS endpoint support, when allowInsecureConnections is set to False. Note PEM file with pass phrase protected isn't supported |

N/A | Optional | Optional |

As you can see from the configuration settings table, the combinations of different configuration settings allow you to deploy Azure Machine Learning extension for different ML workload scenarios:

- For training job and batch inference workload, specify

enableTraining=True - For inference workload only, specify

enableInference=True - For all kinds of ML workload, specify both

enableTraining=TrueandenableInference=True

If you plan to deploy Azure Machine Learning extension for real-time inference workload and want to specify enableInference=True, pay attention to following configuration settings related to real-time inference workload:

azureml-ferouter service is required for real-time inference support and you need to specifyinferenceRouterServiceTypeconfig setting forazureml-fe.azureml-fecan be deployed with one of followinginferenceRouterServiceType:- Type

LoadBalancer. Exposesazureml-feexternally using a cloud provider's load balancer. To specify this value, ensure that your cluster supports load balancer provisioning. Note most on-premises Kubernetes clusters might not support external load balancer. - Type

NodePort. Exposesazureml-feon each Node's IP at a static port. You'll be able to contactazureml-fe, from outside of cluster, by requesting<NodeIP>:<NodePort>. UsingNodePortalso allows you to set up your own load balancing solution and TLS/SSL termination forazureml-fe. - Type

ClusterIP. Exposesazureml-feon a cluster-internal IP, and it makesazureml-feonly reachable from within the cluster. Forazureml-feto serve inference requests coming outside of cluster, it requires you to set up your own load balancing solution and TLS/SSL termination forazureml-fe.

- Type

- To ensure high availability of

azureml-ferouting service, Azure Machine Learning extension deployment by default creates three replicas ofazureml-fefor clusters having three nodes or more. If your cluster has less than 3 nodes, setinferenceRouterHA=False. - You also want to consider using HTTPS to restrict access to model endpoints and secure the data that clients submit. For this purpose, you would need to specify either

sslSecretconfig setting or combination ofsslKeyPemFileandsslCertPemFileconfig-protected settings. - By default, Azure Machine Learning extension deployment expects config settings for HTTPS support. For development or testing purposes, HTTP support is conveniently provided through config setting

allowInsecureConnections=True.

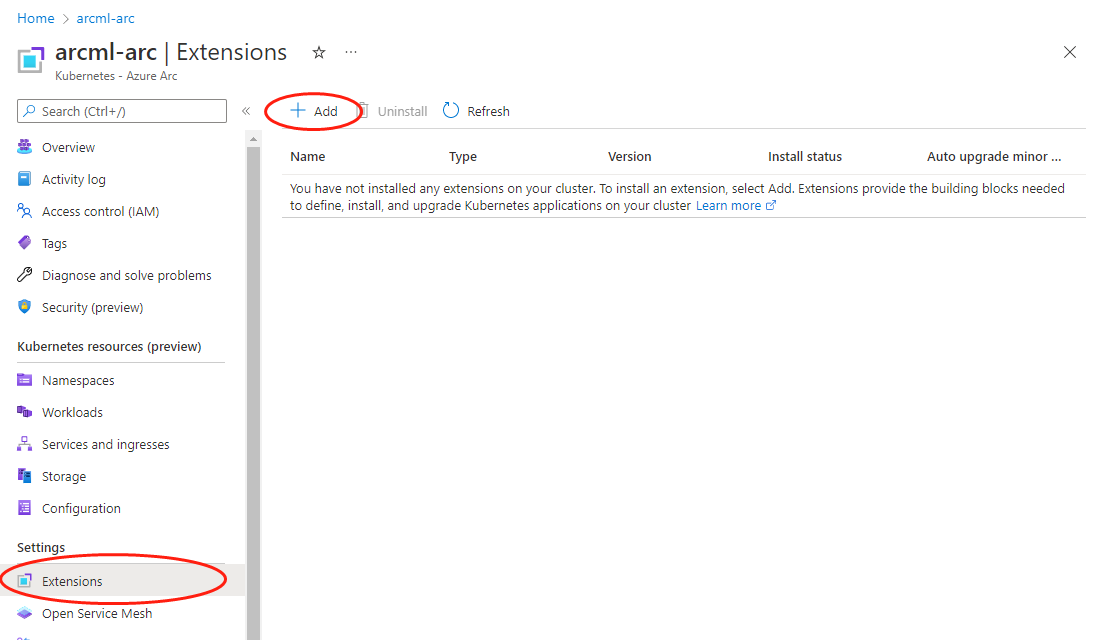

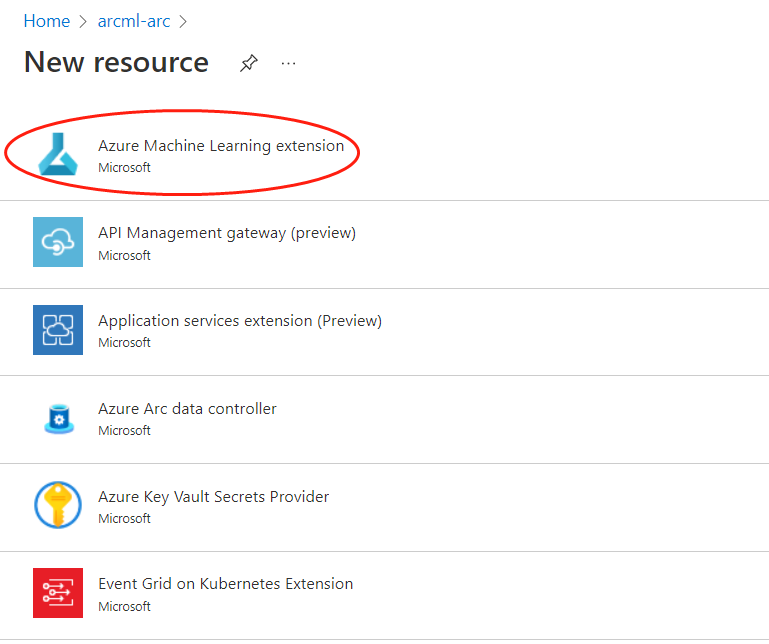

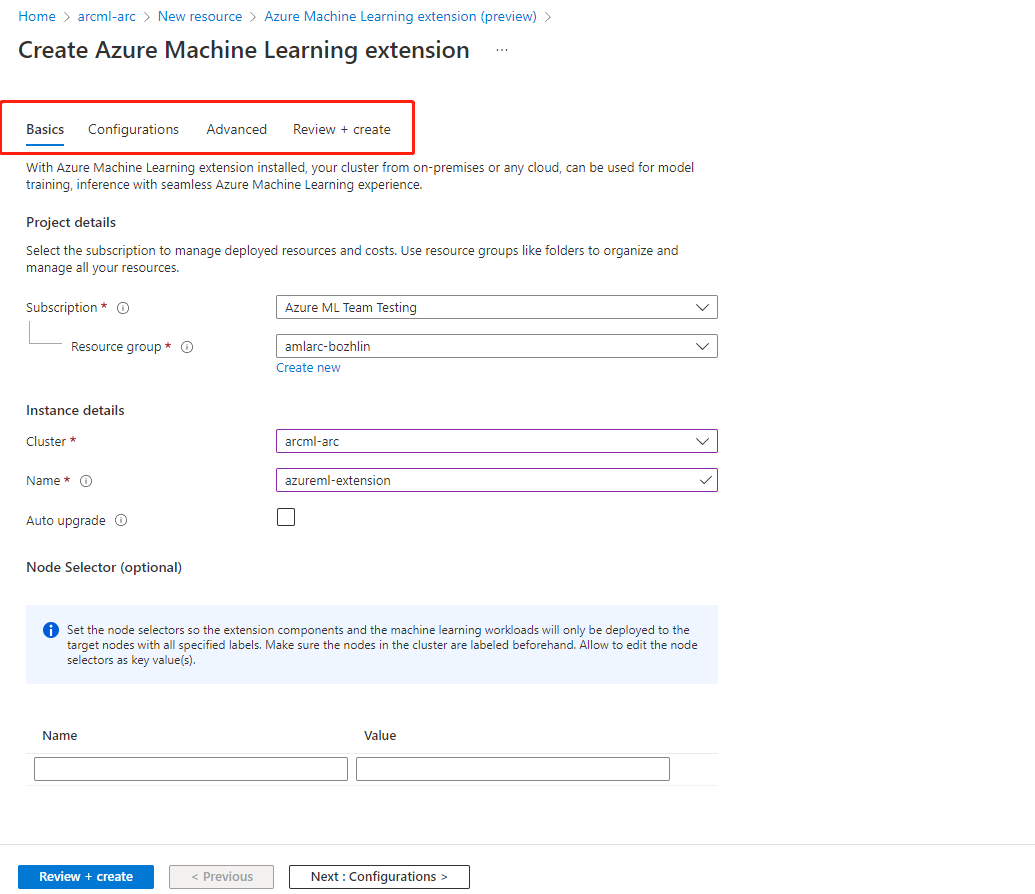

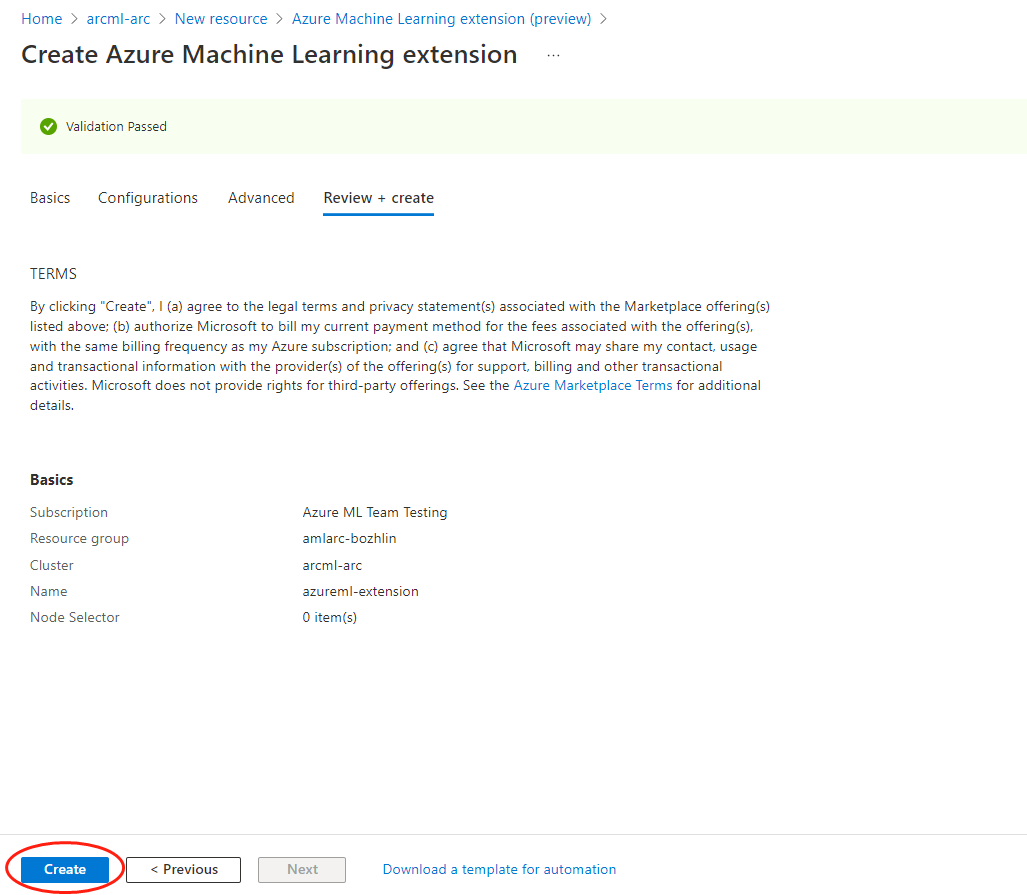

Azure Machine Learning extension deployment - CLI examples and Azure portal

To deploy Azure Machine Learning extension with CLI, use az k8s-extension create command passing in values for the mandatory parameters.

We list four typical extension deployment scenarios for reference. To deploy extension for your production usage, carefully read the complete list of configuration settings.

Use AKS cluster in Azure for a quick proof of concept to run all kinds of ML workload, i.e., to run training jobs or to deploy models as online/batch endpoints

For Azure Machine Learning extension deployment on AKS cluster, make sure to specify

managedClustersvalue for--cluster-typeparameter. Run the following Azure CLI command to deploy Azure Machine Learning extension:az k8s-extension create --name <extension-name> --extension-type Microsoft.AzureML.Kubernetes --config enableTraining=True enableInference=True inferenceRouterServiceType=LoadBalancer allowInsecureConnections=True InferenceRouterHA=False --cluster-type managedClusters --cluster-name <your-AKS-cluster-name> --resource-group <your-RG-name> --scope clusterUse Arc Kubernetes cluster outside of Azure for a quick proof of concept, to run training jobs only

For Azure Machine Learning extension deployment on Arc Kubernetes cluster, you would need to specify

connectedClustersvalue for--cluster-typeparameter. Run the following Azure CLI command to deploy Azure Machine Learning extension:az k8s-extension create --name <extension-name> --extension-type Microsoft.AzureML.Kubernetes --config enableTraining=True --cluster-type connectedClusters --cluster-name <your-connected-cluster-name> --resource-group <your-RG-name> --scope clusterEnable an AKS cluster in Azure for production training and inference workload For Azure Machine Learning extension deployment on AKS, make sure to specify

managedClustersvalue for--cluster-typeparameter. Assuming your cluster has more than three nodes, and you use an Azure public load balancer and HTTPS for inference workload support. Run the following Azure CLI command to deploy Azure Machine Learning extension:az k8s-extension create --name <extension-name> --extension-type Microsoft.AzureML.Kubernetes --config enableTraining=True enableInference=True inferenceRouterServiceType=LoadBalancer sslCname=<ssl cname> --config-protected sslCertPemFile=<file-path-to-cert-PEM> sslKeyPemFile=<file-path-to-cert-KEY> --cluster-type managedClusters --cluster-name <your-AKS-cluster-name> --resource-group <your-RG-name> --scope clusterEnable an Arc Kubernetes cluster anywhere for production training and inference workload using NVIDIA GPUs

For Azure Machine Learning extension deployment on Arc Kubernetes cluster, make sure to specify

connectedClustersvalue for--cluster-typeparameter. Assuming your cluster has more than three nodes, you use a NodePort service type and HTTPS for inference workload support, run following Azure CLI command to deploy Azure Machine Learning extension:az k8s-extension create --name <extension-name> --extension-type Microsoft.AzureML.Kubernetes --config enableTraining=True enableInference=True inferenceRouterServiceType=NodePort sslCname=<ssl cname> installNvidiaDevicePlugin=True installDcgmExporter=True --config-protected sslCertPemFile=<file-path-to-cert-PEM> sslKeyPemFile=<file-path-to-cert-KEY> --cluster-type connectedClusters --cluster-name <your-connected-cluster-name> --resource-group <your-RG-name> --scope cluster

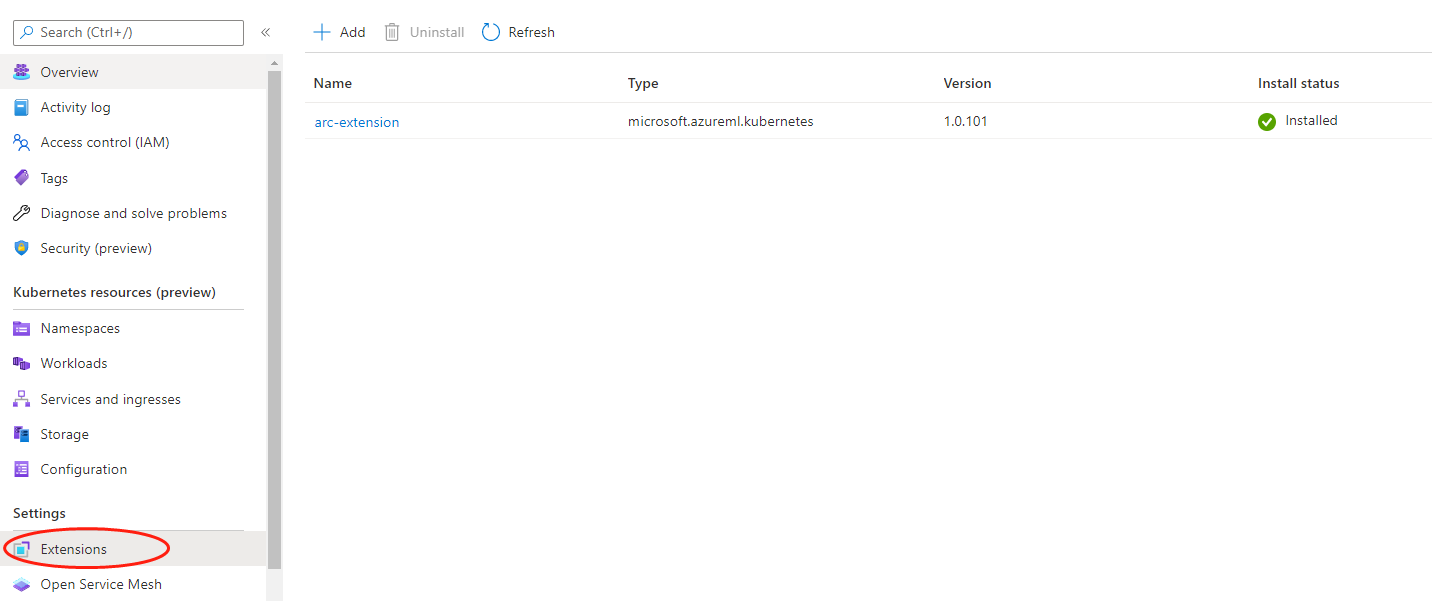

Verify Azure Machine Learning extension deployment

Run the following CLI command to check Azure Machine Learning extension details:

az k8s-extension show --name <extension-name> --cluster-type connectedClusters --cluster-name <your-connected-cluster-name> --resource-group <resource-group>In the response, look for "name" and "provisioningState": "Succeeded". Note it might show "provisioningState": "Pending" for the first few minutes.

If the provisioningState shows Succeeded, run the following command on your machine with the kubeconfig file pointed to your cluster to check that all pods under "azureml" namespace are in 'Running' state:

kubectl get pods -n azureml

Review Azure Machine Learning extension component

Upon Azure Machine Learning extension deployment completes, you can use kubectl get deployments -n azureml to see list of resources created in the cluster. It usually consists a subset of following resources per configuration settings specified.

| Resource name | Resource type | Training | Inference | Training and Inference | Description | Communication with cloud |

|---|---|---|---|---|---|---|

| relayserver | Kubernetes deployment | ✓ | ✓ | ✓ | Relay server is only created for Arc Kubernetes cluster, and not in AKS cluster. Relay server works with Azure Relay to communicate with the cloud services. | Receive the request of job creation, model deployment from cloud service; sync the job status with cloud service. |

| gateway | Kubernetes deployment | ✓ | ✓ | ✓ | The gateway is used to communicate and send data back and forth. | Send nodes and cluster resource information to cloud services. |

| aml-operator | Kubernetes deployment | ✓ | N/A | ✓ | Manage the lifecycle of training jobs. | Token exchange with the cloud token service for authentication and authorization of Azure Container Registry. |

| metrics-controller-manager | Kubernetes deployment | ✓ | ✓ | ✓ | Manage the configuration for Prometheus | N/A |

| {EXTENSION-NAME}-kube-state-metrics | Kubernetes deployment | ✓ | ✓ | ✓ | Export the cluster-related metrics to Prometheus. | N/A |

| {EXTENSION-NAME}-prometheus-operator | Kubernetes deployment | Optional | Optional | Optional | Provide Kubernetes native deployment and management of Prometheus and related monitoring components. | N/A |

| amlarc-identity-controller | Kubernetes deployment | N/A | ✓ | ✓ | Request and renew Azure Blob/Azure Container Registry token through managed identity. | Token exchange with the cloud token service for authentication and authorization of Azure Container Registry and Azure Blob used by inference/model deployment. |

| amlarc-identity-proxy | Kubernetes deployment | N/A | ✓ | ✓ | Request and renew Azure Blob/Azure Container Registry token through managed identity. | Token exchange with the cloud token service for authentication and authorization of Azure Container Registry and Azure Blob used by inference/model deployment. |

| azureml-fe-v2 | Kubernetes deployment | N/A | ✓ | ✓ | The front-end component that routes incoming inference requests to deployed services. | Send service logs to Azure Blob. |

| inference-operator-controller-manager | Kubernetes deployment | N/A | ✓ | ✓ | Manage the lifecycle of inference endpoints. | N/A |

| volcano-admission | Kubernetes deployment | Optional | N/A | Optional | Volcano admission webhook. | N/A |

| volcano-controllers | Kubernetes deployment | Optional | N/A | Optional | Manage the lifecycle of Azure Machine Learning training job pods. | N/A |

| volcano-scheduler | Kubernetes deployment | Optional | N/A | Optional | Used to perform in-cluster job scheduling. | N/A |

| fluent-bit | Kubernetes daemonset | ✓ | ✓ | ✓ | Gather the components' system log. | Upload the components' system log to cloud. |

| {EXTENSION-NAME}-dcgm-exporter | Kubernetes daemonset | Optional | Optional | Optional | dcgm-exporter exposes GPU metrics for Prometheus. | N/A |

| nvidia-device-plugin-daemonset | Kubernetes daemonset | Optional | Optional | Optional | nvidia-device-plugin-daemonset exposes GPUs on each node of your cluster | N/A |

| prometheus-prom-prometheus | Kubernetes statefulset | ✓ | ✓ | ✓ | Gather and send job metrics to cloud. | Send job metrics like cpu/gpu/memory utilization to cloud. |

Important

- Azure Relay resource is under the same resource group as the Arc cluster resource. It is used to communicate with the Kubernetes cluster and modifying them will break attached compute targets.

- By default, the kubernetes deployment resources are randomly deployed to 1 or more nodes of the cluster, and daemonset resources are deployed to ALL nodes. If you want to restrict the extension deployment to specific nodes, use

nodeSelectorconfiguration setting described in configuration settings table.

Note

- {EXTENSION-NAME}: is the extension name specified with

az k8s-extension create --nameCLI command.

Manage Azure Machine Learning extension

Update, list, show and delete an Azure Machine Learning extension.

- For AKS cluster without Azure Arc connected, refer to Deploy and manage cluster extensions.

- For Azure Arc-enabled Kubernetes, refer to Deploy and manage Azure Arc-enabled Kubernetes cluster extensions.