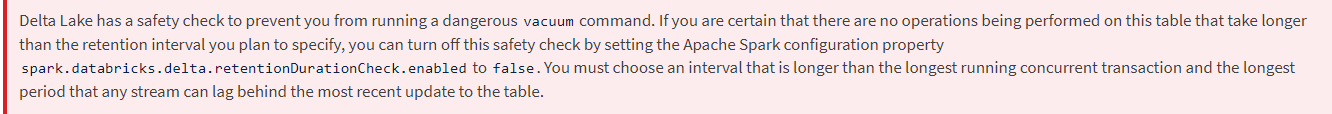

Delta Lake has a safety check to prevent you from running a dangerous VACUUM command. If you are certain that there are no operations being performed on this table that take longer than the retention interval you plan to specify, you can turn off this safety check by setting the Spark configuration property spark.databricks.delta.retentionDurationCheck.enabled to false. The default retention threshold for vacuum is 7 days (168 hours).

Synapse Analytics - Delta Lake - Vacuum is not working (Spark 3.0)

Hi All.

I tried to use vacuum on Synapse Analytics and it is not working for me. The files are not deleted. I am using Spark pool (Spark 3.0). I had tried this:

from delta.tables import *

spark.sql("SET spark.databricks.delta.retentionDurationCheck.enabled = false")

deltaTable = DeltaTable.forPath(spark, "abfss://bronze@xxxxxxxxxxxxx .dfs.core.windows.net/delta/atxx.db/peoplexxxx/")

deltaTable.vacuum(retentionHours = 10)

I also tried this:

deltaTable.vacuum()

I have files from 6th of July.

Thanks for your help.

Kind regards,

Anaid

Azure Synapse Analytics

2 answers

Sort by: Most helpful

-

-

Heimo Hiidenkari 1 Reputation point

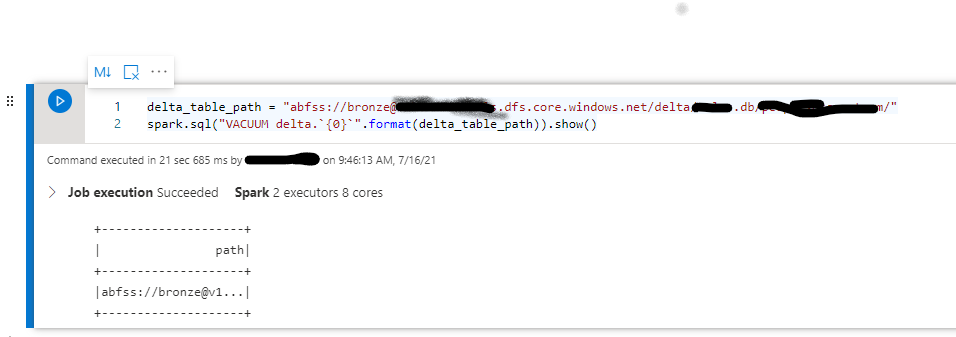

2021-08-13T07:17:06.113+00:00 I got the compressing and vacuuming to work in a Synapse pyspark notebook with this code. Hope this helps!

from pyspark.sql.functions import *

from pyspark.sql.types import *numOfFiles = 1

deltaPath = "abfss://******@yourstorageaccountname.dfs.core.windows.net/publish/data_table_name"(spark.read

.format("delta")

.load(deltaPath)

.repartition(numOfFiles)

.write

.option("dataChange", "false")

.format("delta")

.mode("overwrite")

.save(deltaPath))spark.sql("SET spark.databricks.delta.retentionDurationCheck.enabled = false")

spark.sql("VACUUM `publish`.`data_table_name` RETAIN 0 HOURS").show()- Heimo

]

]