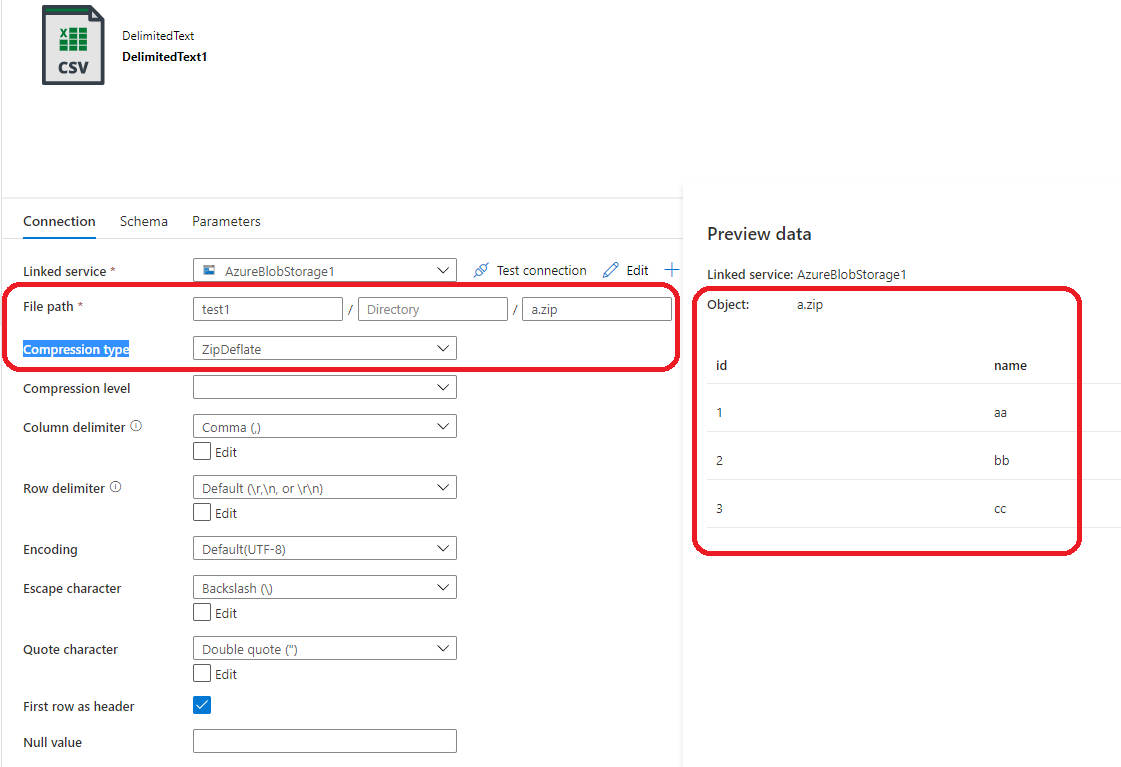

Data Factory supports read the file/data in the .zip file, we can set the Compression type for the .zip file and there is no need to unzip the contents of a zip file into separate folder.

But you may need to unzip them first to later perform transformations on the files unzipped as explained here.

You can use Azure Data Factory to copy and transform data before put the result on an Azure SQL (an Azure SQL Database Serverless (with auto-pause enabled when it has no activity). Use the mapping section on the Copy Activity to remove columns (make a click on the trash can next to columns you don't want) you don't want on the Azure SQL (sink or destination). Mapping data flows can help you create and update columns as explained here.

All elements involved are cheap: Azure Storage Account, Azure Data Factory and Azure SQL Serverless.