TLDR;

My expectations of how the mapping behaves is somewhat different to what I’ve found (quite willing to be educated though 😊).

I had the following expectations when it came to mapping:

1. No need to map every source and sink property – only the one’s that you want to come through – the rest would be ignored

2. No dangerous inference for un/badly mapped property(s) – they’re just ignored.

3. No dependence on sink table column ordinality for the source property(s) to be able to find their way to their appropriate target columns.

My experience so far, suggests to me that the use of the term “mapping” is a bit misleading, due to the very exacting conditions (unless I was doing something wrong :D) required to get data to come through correctly. What is even more problematic, is that data can still come through, but be potentially mislocated, which only may become apparent after intense inspection. The observations and findings above, make me think that column ordinality is at the root of successful mapping.

Question:

Are my expectations above reasonable Or have I misunderstood a fundamental?

Is there perhaps an alternative implementation pattern to get the functional behaviour I seek?

Have I been doing something wrong? (see the following Details)

Thanks for reading, and taking the time to respond.

My Best,

Patrick Fraioli

Details

Whilst getting to grips with this aspect of ADF-related technology, there have been a few problem scenarios that have arisen, in which the mapping of source field/property(s) has affected their successful arrival in the designated data sink (usually a Kusto table).

Scenario

1. Reading some RESTFUL API output, coming as an array of JSON object(s).

2. Each object is targeted for Kusto-based Sink table.

3. Using ADF Copy Activity mapping to try and get this (both hard-coded and dynamic Tabular Translator mappings)

4. The CollectionReference is set correctly as expected data is coming through to the sink table

Functional Perspective

Mapping Conditions

It seems that for a set of properties to successfully make it to their designated target, specific conditions are required, more so than the notion of field-mapping might suggest.

Success Condition 1 – Mappings exist for each and every source and target property

If we have a mapping for all the source and target properties, then the data tends to land in its target Sink table correctly

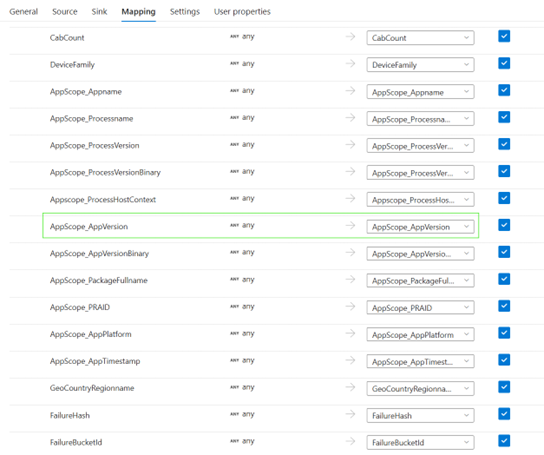

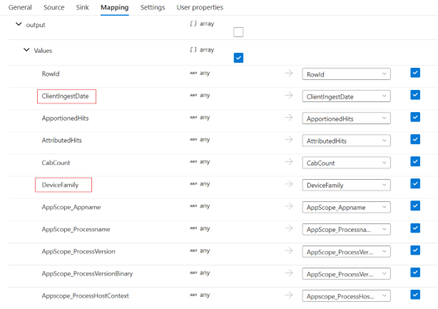

Mapping:

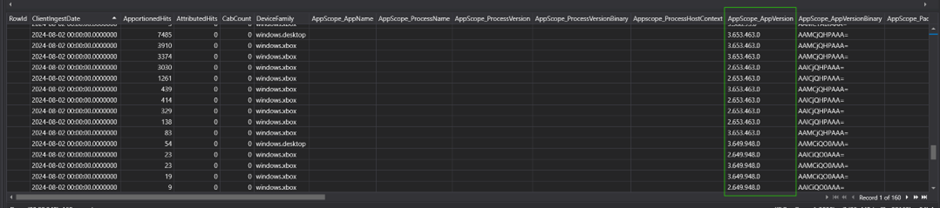

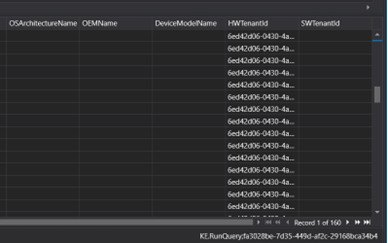

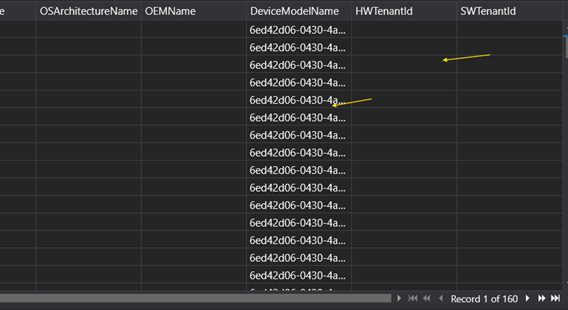

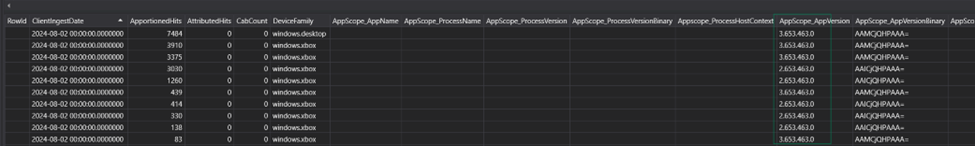

Sink Result:

Sink Result last column:

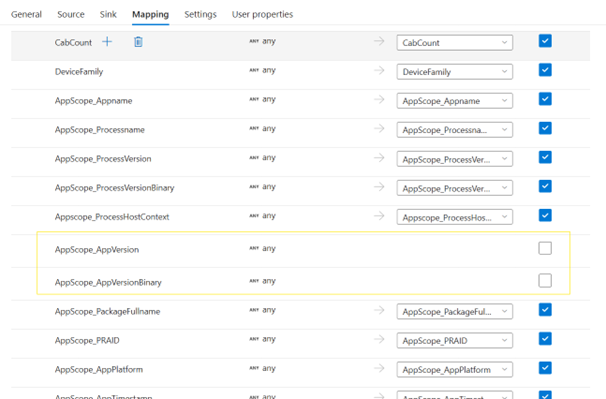

Failure Condition 1 – Removal of individual source->sink mapping definitions

If, however, we were to remove one of the mapping conditions for an expected not-null field, then we start to get column misalignment, if the surrounding columns are null-valuated.

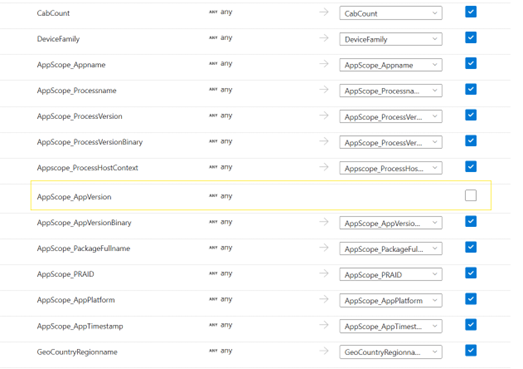

Mapping:

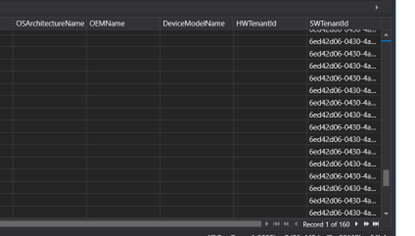

Sink Result:

Sink Result last column:

The data from the outlined column has now skipped to the previous unmapped column (presumably because the datatypes are the same).

We can exaggerate this example even further by not mapping two columns.

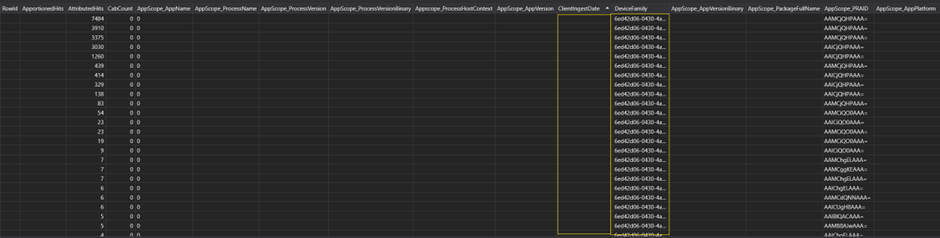

Mapping:

Sink Result last column:

Final column (SWTenantId) has now “skipped” two places to the left.

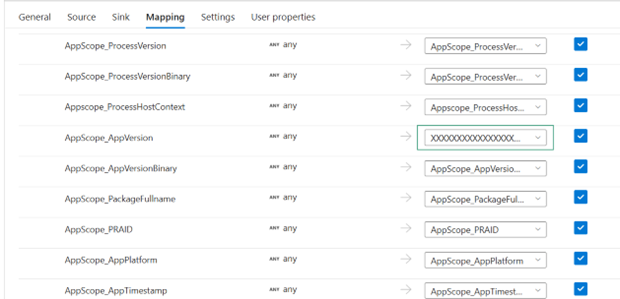

Failure Condition 2 – Mapping to a non-existing Column

Doing something like the following would suggest that the data for that particular source property will never land in its appropriate place in its target sink.

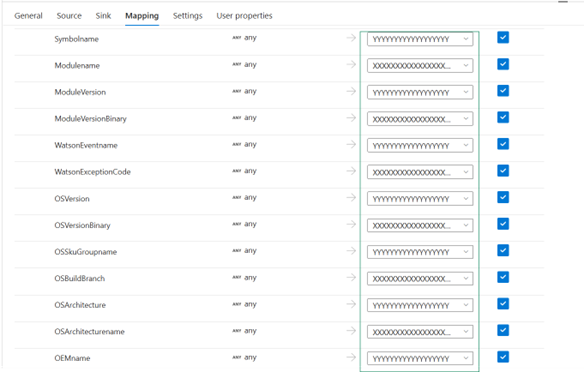

Mapping:

Sink Result:

Looks like the sink column value(s) in the malformed mapping still made it through successfully!

Sub-Investigation to see if the Sink Column Names matter

If we were to create a mapping like this, where we are mapping to the same two garbage values (XXXXX, YYYYY), then we’ll get an expected “duplicate column” error.

Mapping:

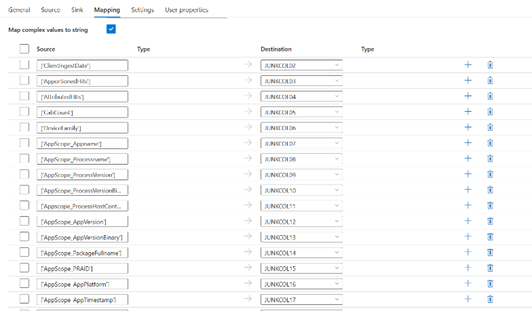

If we change the mapping to use unique garbage names for sink columns (that are non-existent in the target sink), so something like this:

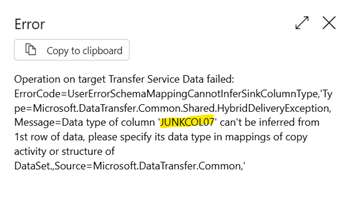

Then, we get this interesting exception:

This error suggests that the way the mapping service might work is that may not require the sink names at all. If they happen to be wrong/garbage, they will be inferred from the source property. Which could mean you could still get your data landing in the target sink table based on an optimistic best guess using matching datatypes. Looking at the image above, an error occurred in the mapping when the ADF service didn't have a type definition for COL07. Note: All previous 6 source properties were valuated, and it was COL07 that was the first null property, so no data, no data-inference, failure in mapping.

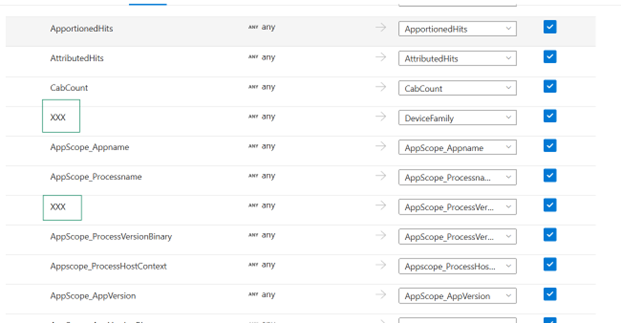

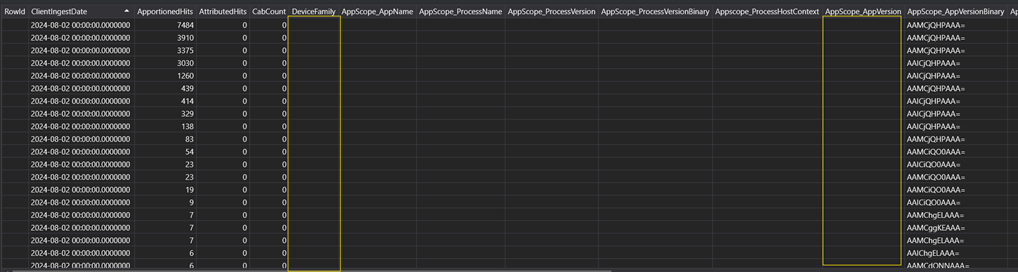

Failure Condition 3 – Full set of Mappings but Sink Table Columns have different ordinality.

If we have a full set of mappings for all the properties of both source and target, and they’re all correctly named, there is still a problem scenario, if the target sink table does not respect the ordinality of column declaration in the Mapping section.

Mapping:

Table Creation DDL (Kusto/ADX Explorer):

.create table SinkTable_Remixed

(

RowId:long,

ApportionedHits:real,

AttributedHits:real,

CabCount:real,

AppScope_AppName:string,

AppScope_ProcessName:string,

AppScope_ProcessVersion:string,

AppScope_ProcessVersionBinary:string,

Appscope_ProcessHostContext:string,

AppScope_AppVersion:string,

AppScope_AppVersionBinary:string,

AppScope_PackageFullName:string,

AppScope_PRAID:string,

AppScope_AppPlatform:string,

AppScope_AppTimestamp:string,

GeoCountryRegionName:string,

ClientIngestDate:datetime,

FailureHash:guid,

FailureBucketId:string,

SymbolName:string,

WatsonEventName:string,

WatsonExceptionCode:string,

OSVersion:string,

OSVersionBinary:string,

OSSkuGroupName:string,

OSBuildBranch:string,

OSArchitecture:int,

OSArchitectureName:string,

ModuleName:string,

ModuleVersion:string,

ModuleVersionBinary:string,

OEMName:string,

DeviceModelName:string,

HWTenantId:guid,

SWTenantId:guid,

DeviceFamily:string

)

Here, I’ve moved two properties around, ClientIngestDate and DeviceFamily.

Sink Result:

· ClientIngestDate is now just empty

· DeviceFamily has values from the wrong column in it.

Programmatic Perspective

In trying to derive a programmatic way to create dynamic mappings, I came across the following “challenge” in that the Tabular Translator mapping that can be injected as an expression into the “translator” property of the Copy Activity JSON, must have lower-case property names.

So, this is good:

"translator": {

"type": "TabularTranslator",

"mappings": [

{

"source": {

"path": "['ApportionedHits']"

},

"sink": {

"name": "ApportionedHits",

"type": "Double"

}

},

{

"source": {

"path": "['AppScope_ProcessName']"

},

"sink": {

"name": "AppScope_ProcessName",

"type": "String"

}

},

{

"source": {

"path": "['ClientIngestDate']"

},

"sink": {

"name": "ClientIngestDate",

"type": "DateTime"

}

}

],

"collectionReference": "$['output'][0]['Values']",

"mapComplexValuesToString": true

}

But something like this cannot be parsed and when in use triggers an exception: "TRANSLATOR": {

"TYPE": "TabularTranslator",

"MAPPINGS": [

{

"SOURCE": {

"PATH": "['ApportionedHits']"

},

"SINK": {

"NAME": "ApportionedHits",

"TYPE": "Double"

}

},

{

"SOURCE": {

"path": "['AppScope_ProcessName']"

},

"SINK": {

"NAME": "AppScope_ProcessName",

"TYPE": "String"

}

},

{

"SOURCE": {

"path": "['ClientIngestDate']"

},

"SINK": {

"NAME": "ClientIngestDate",

"TYPE": "DateTime"

}

}

],

"COLLECTIONREFERENCE": "$['output'][0]['Values']",

"MAPCOMPLEXVALUESTOSTRING": true

}

Not sure as to why there is a dependency/expectation for all the property names to either be lower/camelcased here. I'm guessing there is a deserialization dependency higher up the processing chain but it took a while to find out why my dynamically generated TabularTranslator was throwing exceptions (unless it's something else? !)

Use Case

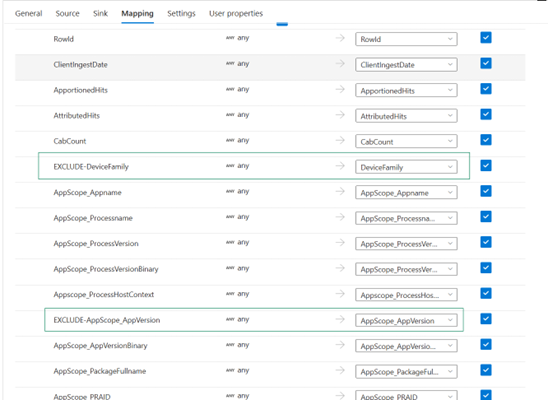

Column Masking – Prevention of certain columns from getting to Sink.

Could we try the reverse of instead of using garbage sink column names (see above), we use garbage source column names?

Mapping:

This fails with ‘duplicate column’ exception – fair enough :D

Corrected Mapping:

Sink Result:

The data associated with the two non-null source columns have not made it to their Sink destination 😊

So, by simply renaming the source property/field in some way and retaining a uniqueness with the rest of the source fields, it’s possible to mask/prevent data travel.

e.g.

AppScope_AppVersion -> EXCLUDE-AppScope_AppVersion