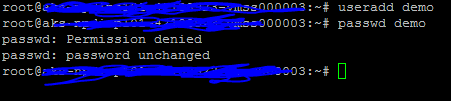

@Vignesh Murugan , Thank you for sharing your concern. While we are investigating the possibility of using passwd on AKS nodes, here are two workarounds as of now:

- You can add the sha512 encrypted password manually to the

/etc/shadowfile.

i. You can get the sha512 encrypted password usingopenssl passwd -6 -stdin,then type/paste your password, thenENTER, thenCtrl+D("end of file"). No password will be seen in process list and no password will be saved into shell history.

ii. You can now copy the encrypted text.- Edit the

/etc/shadowfile as following: - Go to the line that says something like demo:!:xxxxxx:x:xx:xx:xx::

- Replace only the

!symbol with the copied encrypted text.- If the AKS node pool is of type

VirtualMachineScaleSetsthen: CLUSTER_RESOURCE_GROUP=$(az aks show --resource-group <Resource-Group-Name> --name <Cluster-Name> --query nodeResourceGroup -o tsv)

SCALE_SET_NAME=$(az vmss list --resource-group $CLUSTER_RESOURCE_GROUP --query '[0].name' -o tsv)

az vmss extension set \

--resource-group $CLUSTER_RESOURCE_GROUP \

--vmss-name $SCALE_SET_NAME \

--name VMAccessForLinux \

--publisher Microsoft.OSTCExtensions \

--version 1.4 \

--protected-settings "{\"username\":\"demo\", \"password\":\"Your-Password\"}"

az vmss update-instances --instance-ids '*' \

--resource-group $CLUSTER_RESOURCE_GROUP \

--name $SCALE_SET_NAME

Note: This will update the user credentials on all the nodes. If you want to update only one node, please replace the*inaz vmss update-instances --instance-ids '*'with the Virtual Machine Scale Set instance number (instance numbers range between 0 and N-1 where N is the total number of scale set instances)

- If the AKS node pool is of type

AvailabilitySetthen:

Important: Please replaceCLUSTER_RESOURCE_GROUP=$(az aks show --resource-group <Resource-Group-Name> --name <Cluster-Name> --query nodeResourceGroup -o tsv) az vm user update \ --resource-group $CLUSTER_RESOURCE_GROUP \ --name VirtualMachineName \ --username demo \ --password Your-PasswordYour-Passwordwith a strong password. - Edit the

Having said that, users added manually on the AKS nodes will not be persisted if the node undergoes a node image upgrade (which can also be part of an update operation on the AKS cluster, like node pool Kubernetes version upgrade, agent pool reconciliations, service principal profile refresh, certificate rotation) or if the node is destroyed during a scale down operation.

Hope this helps.

Please "Accept as Answer" if it helped, so that it can help others in the community looking for help on similar topics.