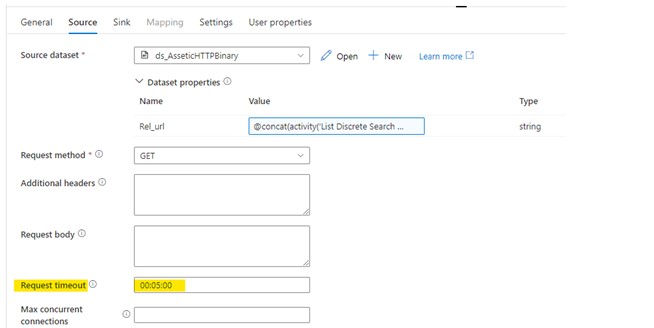

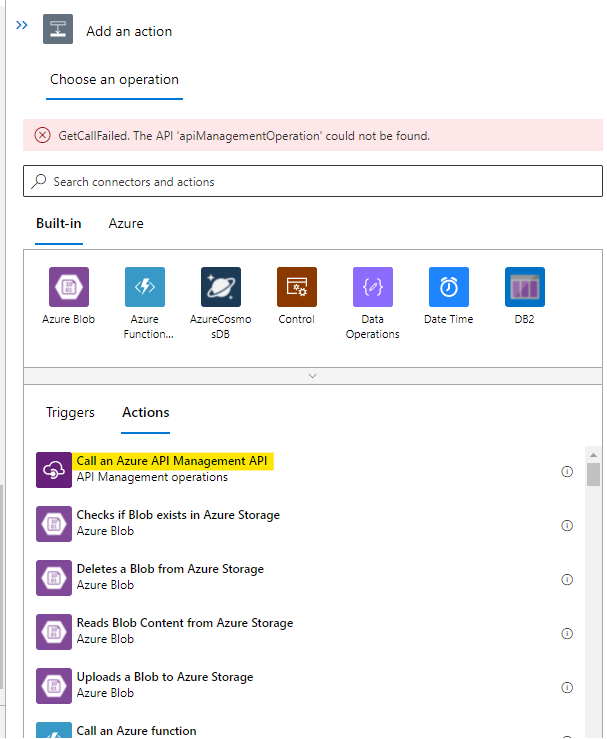

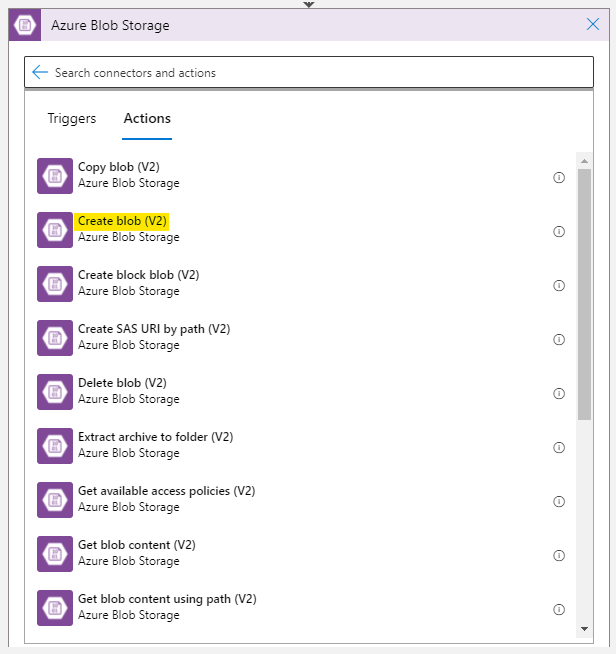

@AMJ Apology for the delay. It looks like there are two issues. One while using the logic app HTTP connector and the other data factory activity call. I have added the azure-data-factory tag so the expert can comment on your second issue but it is always suggested to create two different issues so it would be easier for the community to search for a different issue with a different service. I will reach out to azure data factory team to comment on your second issue.

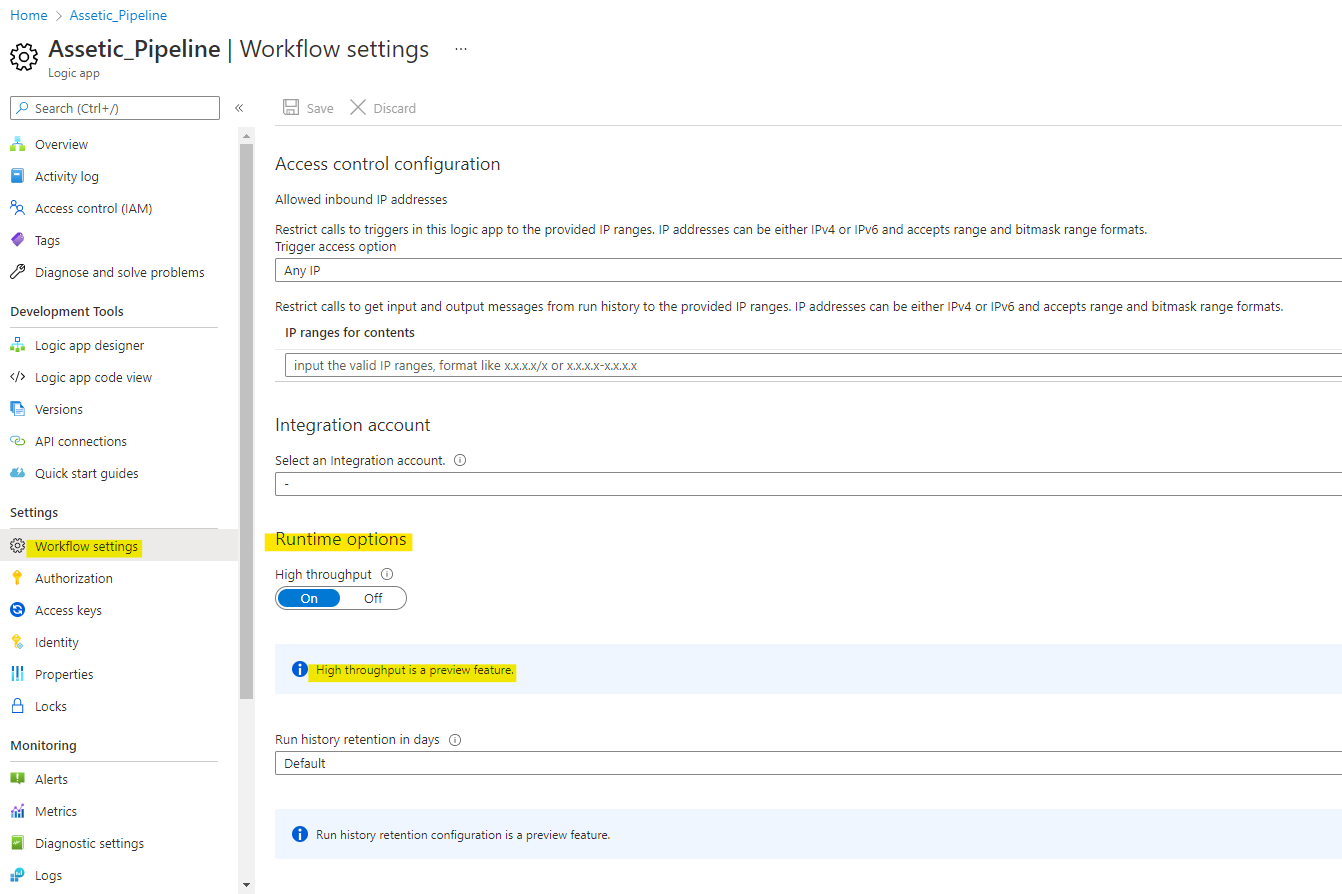

On your first issue with logic app HTTP connector. This is the hard limit of the consumption logic app as mentioned here for the Maximum input or output size and holds true for the HTTP trigger. Depending on the different connector this can go up to 1GB.

This Maximum input or output size can be changed in the case of single tenant logic app as mentioned here. With regards to the maximum limit for the single tenant logic app, it depends on various factors like what is the compute and memory size (Workflow Standard plan type) you are using and how many of such messages will be processed in parallel, etc.