Hi @Anonymous ,

Thank you for posting query in Microsoft Q&A Platform.

Could you please clarify where exactly you are facing this error? Is it while loading data to blob or while loading data synapse table?

If it's while loading data to Synapse then kindly check if your sink table column length may be less than source column length. Using Sink table column data type as nvarchar(max) may help.

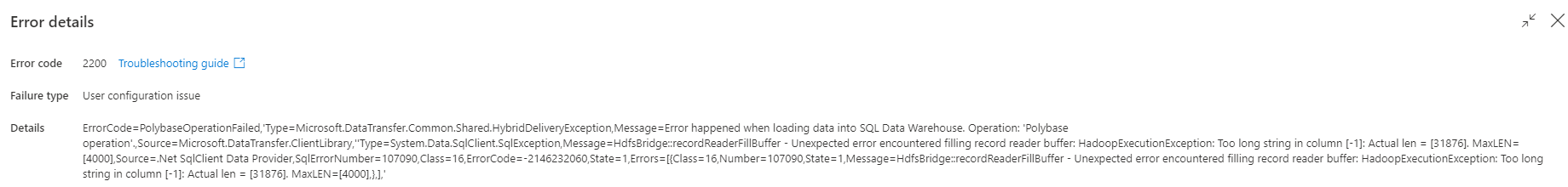

If you are using Polybase option then please note, Currently using Polybase has this limitation of 1mb and length of column is greater than that. The work around is to use bulk insert in copy activity of ADF or chunk the source data into 8K columns and load into target staging table with 8K columns as well. Check this document for more details on this limitation.

If you're using PolyBase external tables to load your tables, the defined length of the table row can't exceed 1 MB. When a row with variable-length data exceeds 1 MB, you can load the row with BCP, but not with PolyBase.

Try using "bulk insert" option in the ADF pipeline. It may be helpful. Please let us know how it goes. Thank you.