Hi @Shambhu Rai ,

Thanks for posting question in Microsoft Q&A platform and for using Azure Services..

As I understand your ask, you want to understand filter and case usage in Databricks using Pyspark.

Please correct if my understanding does not comply.

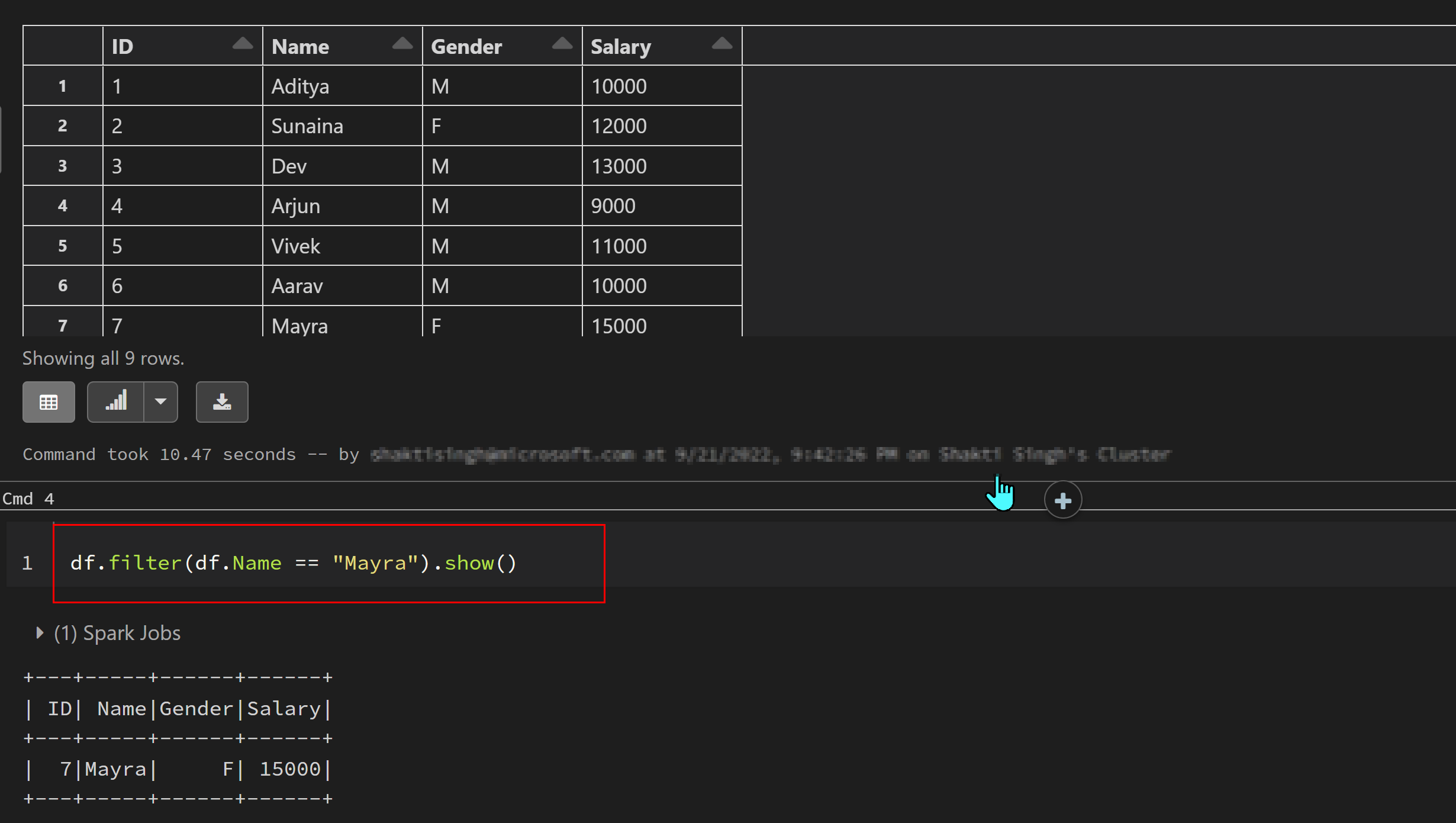

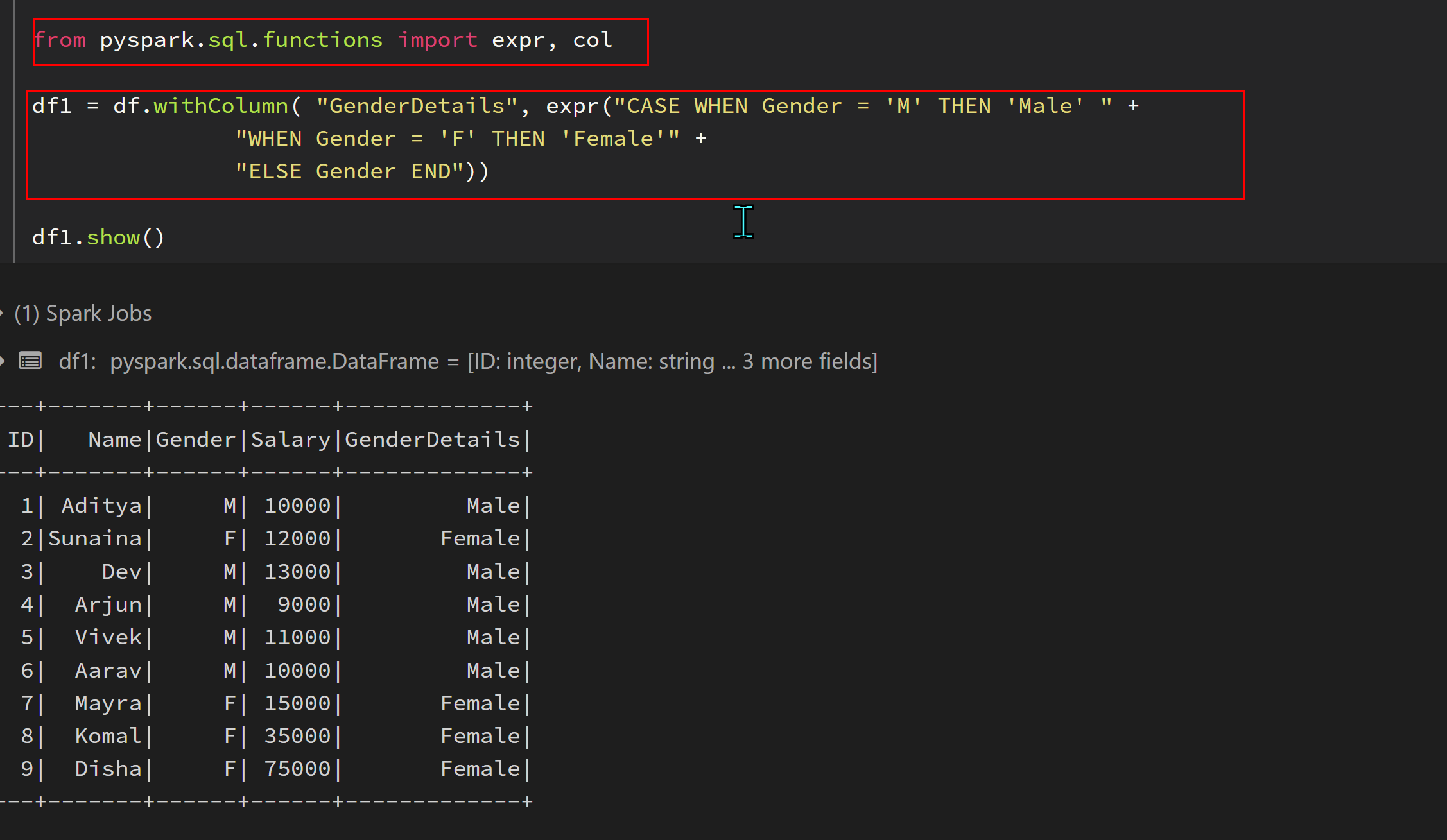

For illustration, in the below sample example, source data is having four columns as ID, Name, Gender, Salary.

We are required to first Filter data for a particular person, then using Case, we need to create a new column with Gender details.

- Filter Condition:

Make use of filter function as shown below:

df.filter(df.Name == "Mayra").show()

- Case condition:

Using case when then as below:

from pyspark.sql.functions import expr, col

df1 = df.withColumn( "GenderDetails", expr("CASE WHEN Gender = 'M' THEN 'Male' " +

"WHEN Gender = 'F' THEN 'Female'" +

"ELSE Gender END"))

For Filter, refer to the link pyspark-where-filter for more examples.

For Case, refer to this link pyspark-when-otherwise for variety of examples.

Hope this will help. Please let us know if any further queries.

------------------------------

- Please don't forget to click on

or upvote

or upvote  button whenever the information provided helps you.

button whenever the information provided helps you.

Original posters help the community find answers faster by identifying the correct answer. Here is how - Want a reminder to come back and check responses? Here is how to subscribe to a notification