Azure ML Notebook: The code being run in the notebook may have caused a crash or the compute may have run out of memory

Ankit19 Gupta

46

Reputation points

I am using Azure ML Notebook with python kernel to run the following code:

%reload_ext rpy2.ipython

from azureml.core import Dataset, Datastore,Workspace

subscription_id = 'abc'

resource_group = 'pqr'

workspace_name = 'xyz'

workspace = Workspace(subscription_id, resource_group, workspace_name)

datastore = Datastore.get(workspace, 'mynewdatastore')

# create tabular dataset from all parquet files in the directory

tabular_dataset_1 = Dataset.Tabular.from_parquet_files(path=(datastore,'/RNM/CRUD_INDIFF/CrudeIndiffOutput_PRD/RW_Purchases/2022-09-05/RW_Purchases_2022-09-05T17:23:01.01.parquet'))

df=tabular_dataset_1.to_pandas_dataframe()

print(df)

After executing this code, I am getting the Cancelled message from the notebook cell and also getting the message on top of the cell as:

The code being run in the notebook may have caused a crash or the compute may have run out of memory.

Jupyter kernel is now idle.

Kernel restarted on the server. Your state is lost.

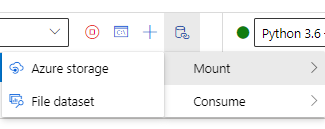

The Parquet file which I am using in the code is of size 20.25 GiB and I think due to the large size of this file, this problem is being created. Can anyone please help me how to resolve this error without breaking the file into multiple files of small sizes. Any help would be appreciated.

Azure Machine Learning

Azure Machine Learning

An Azure machine learning service for building and deploying models.

Sign in to answer