Seems we found a solution by adjusting the packet size on the SAP side. Might not have been an issue with ADF after all. So far been able to copy several million rows without failure.

SAP to ADF using ODP connector - Error (Source Failure, System Out of Memory)

Setting up a connection between SAP and Azure Data Factory using the new ODP connector (preview).

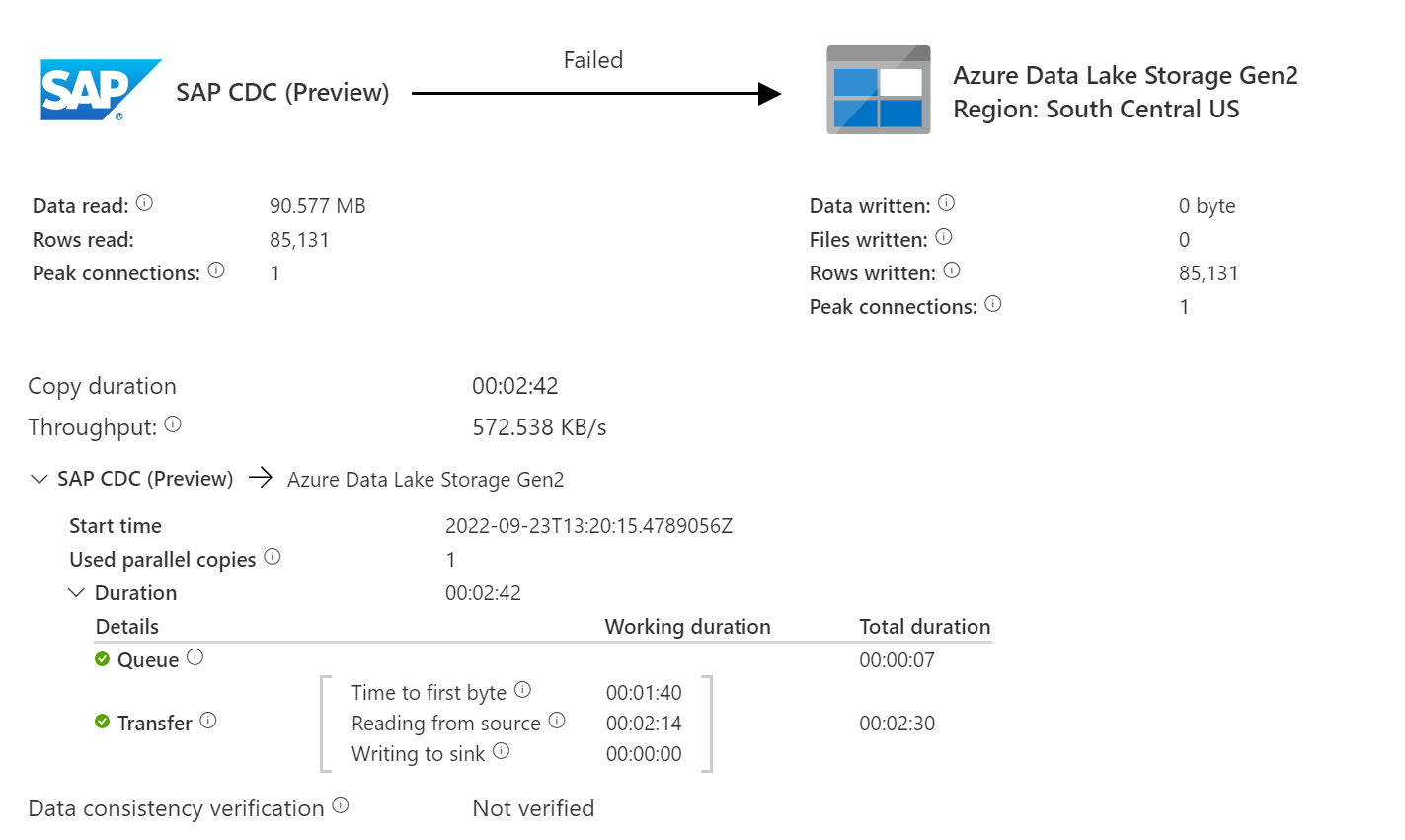

When running a pipeline to pull data from a CDS view in SAP to ALDS Gen2, this error pops up. It hasn't been present on smaller files, and only seems to show up when the files start getting into the range of 30k rows and more. In ADF I have tried changing the Block Size, Degree of Parallelism, and other parameters. In some cases they improved the pipeline's performance a bit, but still haven't been enough to avoid failure for many of the pipelines.

All the answers I have seen for this type of memory error were not using the ODP connector and seem to typically show up on the 'sink' side.

I have a suspicion it has to do with the IR, but need some advice on what needs to be changed.

Error Details:

Error code - 1000

Error Source - System Error

Details -

Failure happened on 'Source' side. ErrorCode=SystemErrorOutOfMemory,'Type=Microsoft.DataTransfer.Common.Shared.HybridDeliveryException,Message=A task failed with out of memory.,Source=Microsoft.DataTransfer.TransferTask,''Type=System.OutOfMemoryException,Message=Exception of type 'System.OutOfMemoryException' was thrown.,Source=Microsoft.DataTransfer.ClientLibrary,'

Thank you.