Hello @Amar Agnihotri and welcome back to Microsoft Q&A.

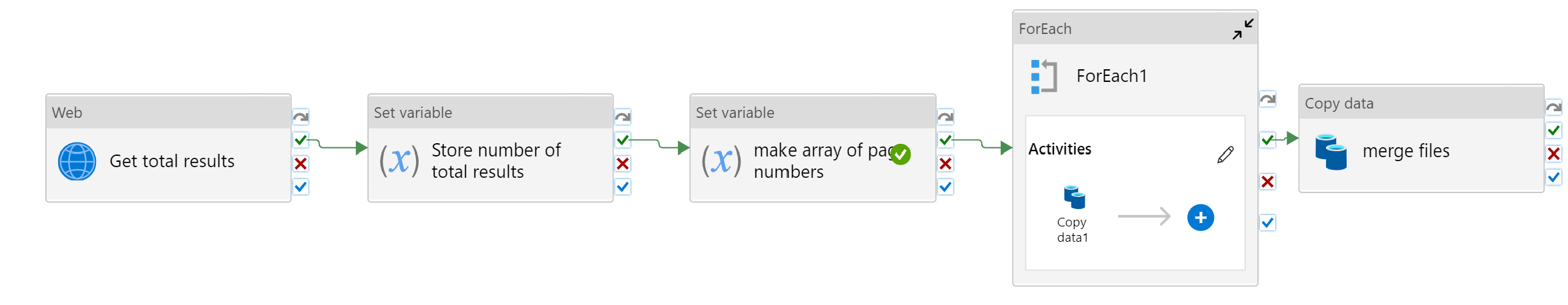

While you could get the data using the Copy activity REST pagination feature, it looks like you want to split into multiple copy activities to do things faster because of small page size and large result set. Is this correct?

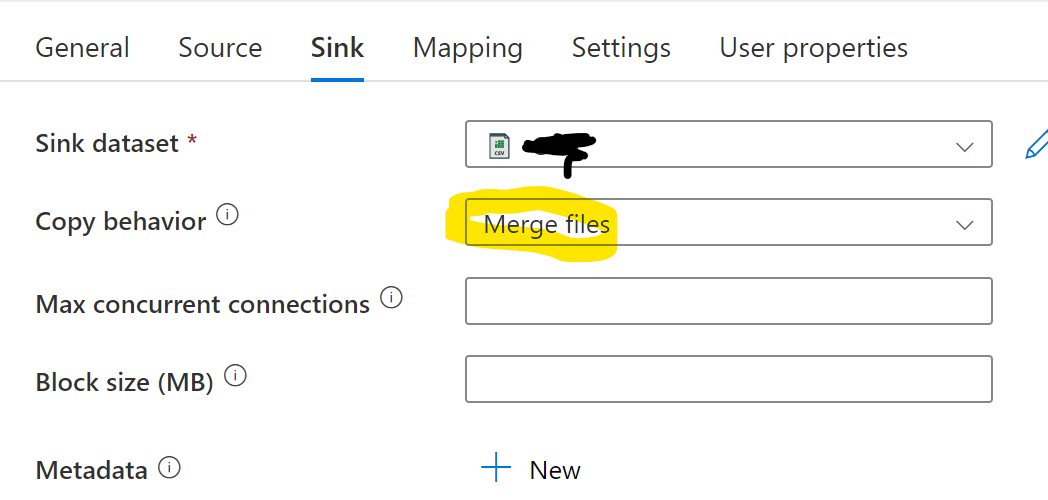

Do note that splitting up into multiple copy activities also means making many small output files because ADF doesn't do append type writes to files. To end up with a single file, have another copy activity afterwards to merge the files.

So there are a few parts to doing this. I'm assuming you want to put the copy activity inside a for each loop and do it very parallel.

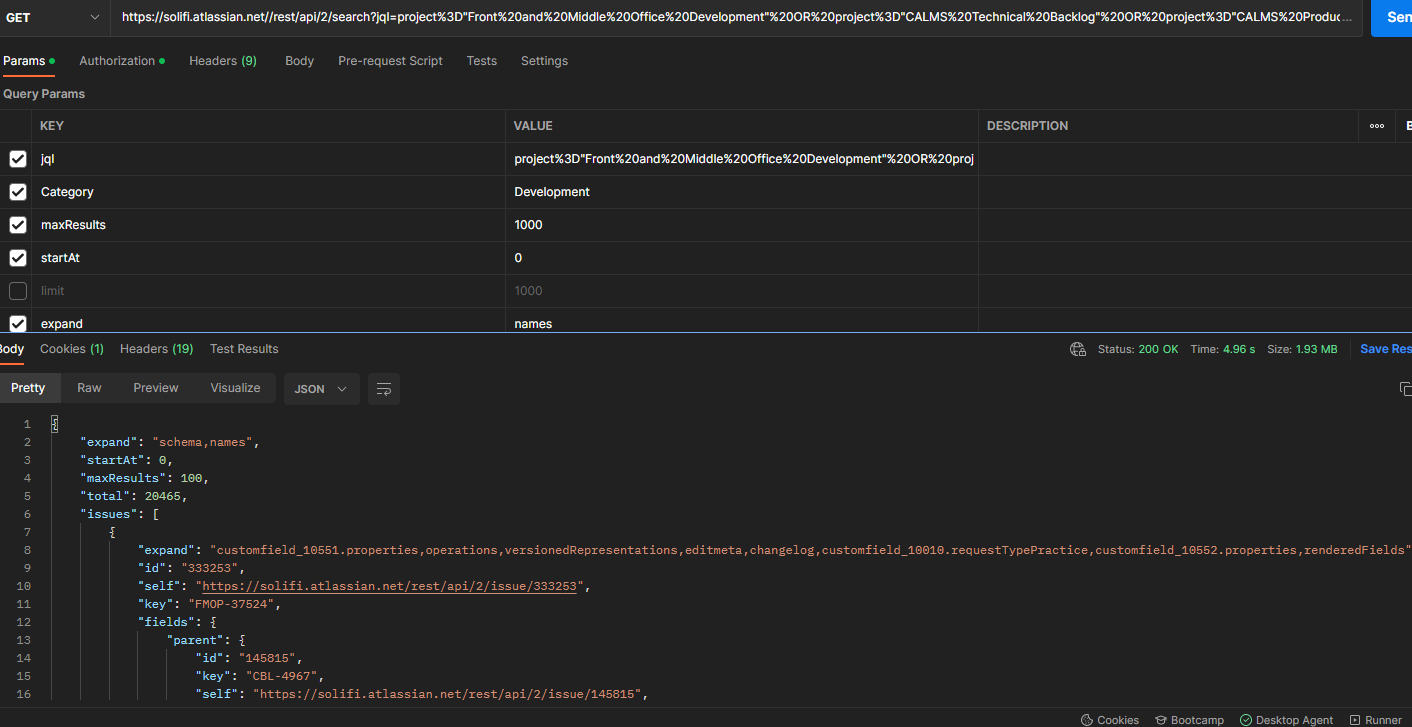

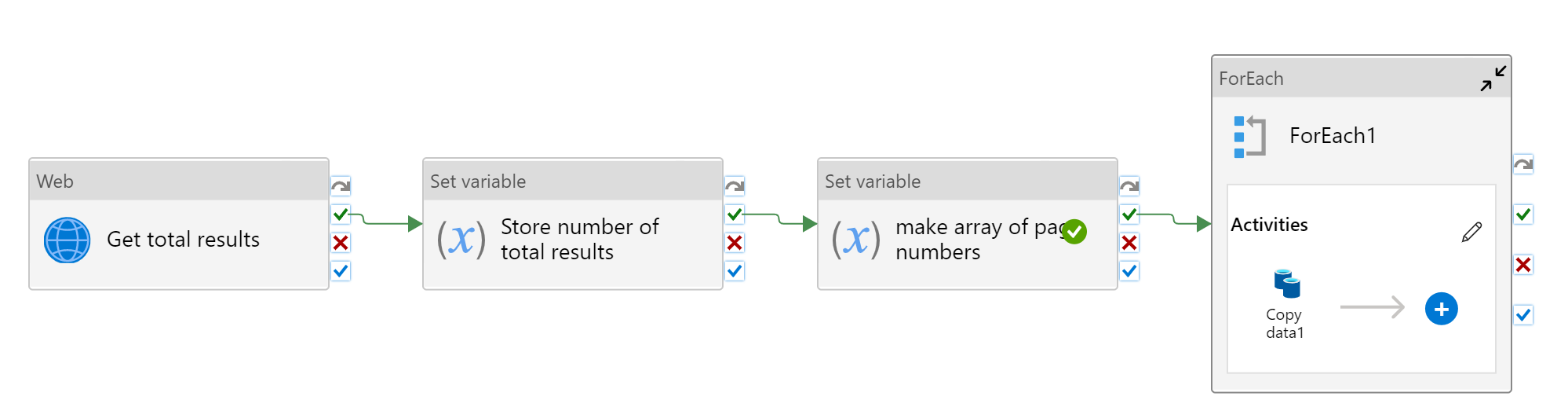

The first step is to generate a set of items to iterate over. This would be a value for each page/call. For this we need to know how many results total there are. Use a web activity to get the total results like in your screenshot.

Create a list/array type variable. Use Set Variable to create all the elements at once, using the range function.

total / maxResults = number of pages

if ( 0 < (total % maxResults) ) then we need to add another page for remainder

Put together, this takes the form of:

@range(0,

if(less(0,mod(int(variables('total')),pipeline().parameters.maxResults)),

add(1,div(int(variables('total')),pipeline().parameters.maxResults)),

div(int(variables('total')),pipeline().parameters.maxResults))

)

This would output an array of integers [0,1,2,3 ... 202, 203, 204]

This is the startAt value divided by maxResults (100). We pass this array to the ForEach. In the copy activity we will need to multiply by maxresults (100).

@mul(item(), pipeline().parameters.maxResults)

This value is not only used in the api call, but also in the parameterized sink dataset filename, so we don't overwrite the results of every other call.