Disk Full Error when submitting a Azure ML Job from Azure Devops

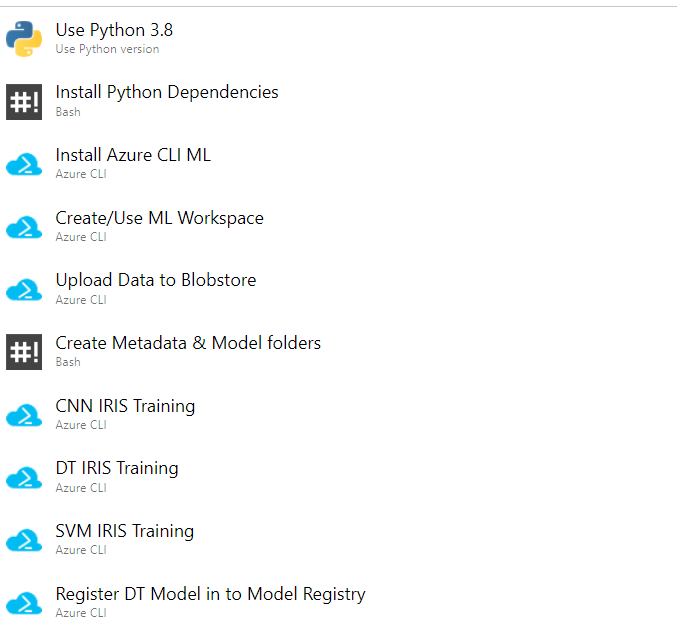

I am in the process of creating a pipeline for Continuous training of several ML models using Azure DevOps.

Within the Pipeline, I use Azure CLI with help of azure ml extension to submit training jobs for each of the ML models. I have attached an image below to give you an idea of how the pipeline looks.

The main problem I faced was that when I wanted to use TensorFlow, several dependency issues came up. So I decided to use the base image which came with TensorFlow 2.7.0 pre-installed, and then add the rest of the needed libraries. The base image is mcr.microsoft.com/azureml/curated/tensorflow-2.7-ubuntu20.04-py38-cuda11-gpu:23.

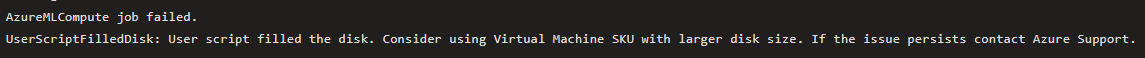

But now when I run the pipeline I get an error saying that the disk is full.

To mitigate the issue, I tried using a better compute instance, I was initially using 2 cores which I then upgraded to 4, and still, the error persists. FYI, The data that I am using is not big since I am trying to build the architecture of the pipeline.

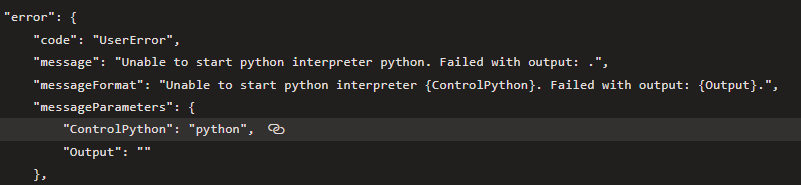

I created an azure virtual machine and attached it as a compute for training the model, and yet I get another error.

What should I do now? How can get past this issue? Is there a better to train the models than this? My aim is to train the models in the CI pipeline and deploy the best one in a release pipeline.