Hello @Smitha Krishna Murthy ,

Thanks for the question and using MS Q&A platform.

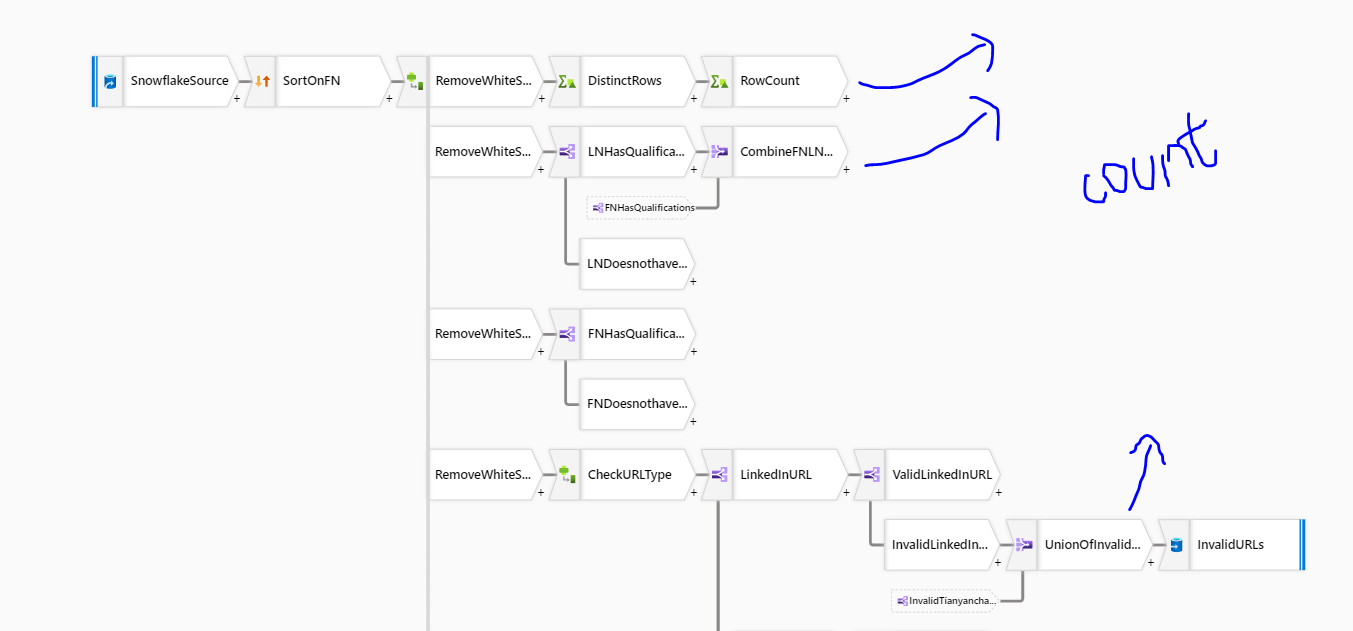

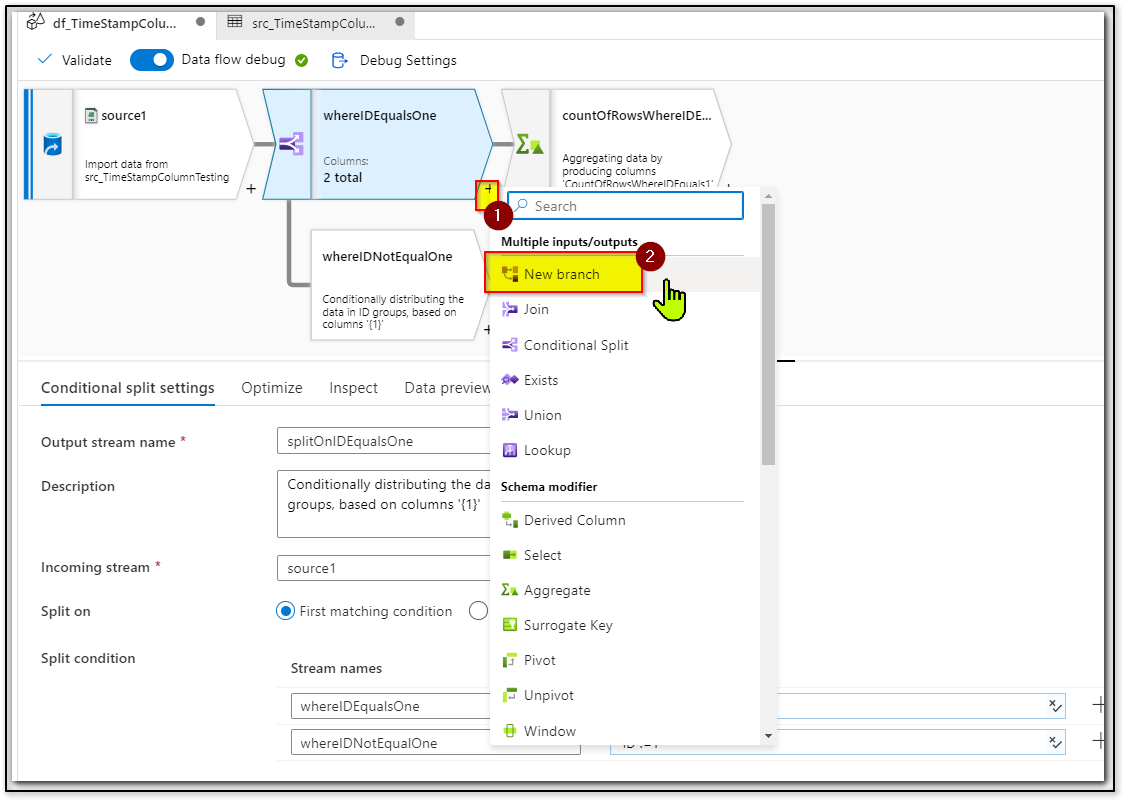

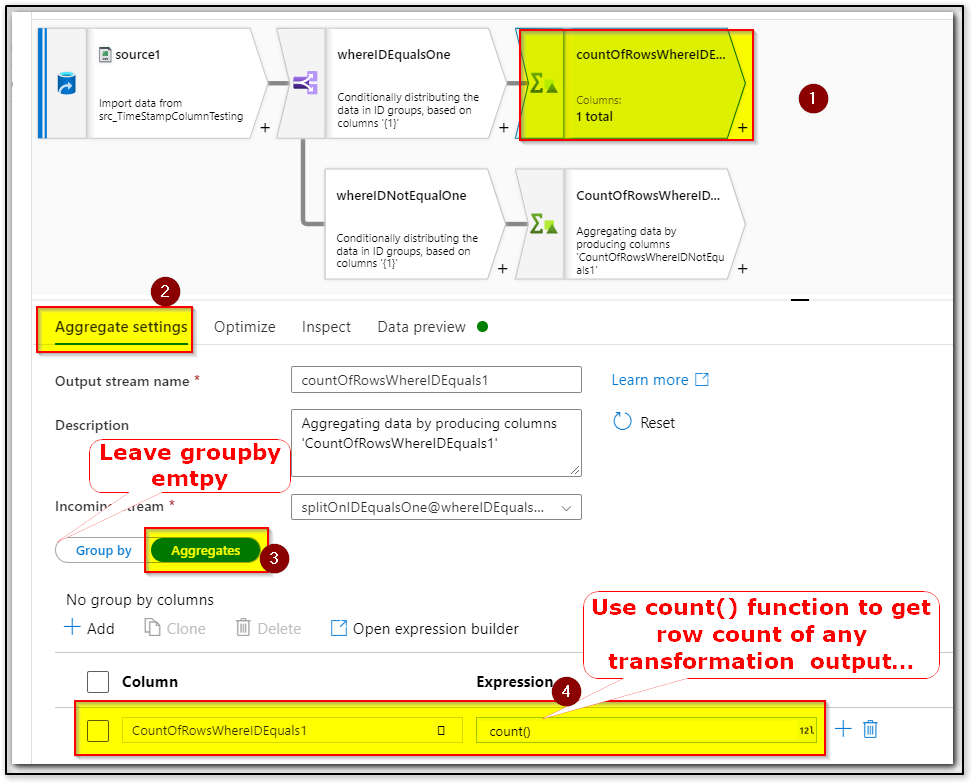

As per my understanding you would like to get the count of records for desired transformations(stream) and log them to your desired sink. In order to get the count of a transformation output you will have to create a new branch (nothing but a new stream) and then add an Aggregate transformation and leave group by property as empty as it is optional and then in the aggregate configuration, give a column name as rowCountOfX (just an example) and use count() in the expression box.

Please see below example for reference:

Once you have the row count details, then have a sink transformation with your desired data store and map the column to store the row count data.

To explore more about aggregate transformation please refer to this video: Aggregate Transformation in Mapping Data Flow in Azure Data Factory

Hope this will help. Please let us know if any further queries.

------------------------------

- Please don't forget to click on

or upvote

or upvote  button whenever the information provided helps you. Original posters help the community find answers faster by identifying the correct answer. Here is how

button whenever the information provided helps you. Original posters help the community find answers faster by identifying the correct answer. Here is how