I've found and tested a workaround. I haven't found a way to pass a map parameter from a pipeline but I can output an array from the first data flow, add(collect(keys), collect(values)), use an array parameter in the inner pipeline and data flow, and in the inner data flow convert the array to a map with, keyValues(slice($array, 1, size($array)/2), slice($array, (size($array)/2)+1)). This solves the problem of me not wanting to look up the map in each data flow that will be using it but is still wasteful since the map needs built from the array in each data flow. I'm still interested in knowing if there's a better solution.

How to pass a map that is output from a data flow activity as parameter into another activity

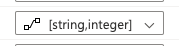

I have a pipeline structured like activity1 -> activity2. Activity1 runs a data flow that outputs a map. A little oddly in the data preview in the data flow it looks like a map { "key1": 1, "key2": 2 } but in the activity1 output and activity2 input it looks like an array [{ "key": "key1", "value": 1 }, { "key": "key2", "value": 2 }]. Activity2 has a parameter that I've tried with Type = array and Type = object. Activity2 is a run pipeline activity that runs a pipeline with a data flow with a Map[string, integer] parameter. In both cases I get com.microsoft.dataflow.broker.InvalidParameterTypeException: Invalid type for parameter: the map parameter.

How can I pass a map that is an output from a data flow activity as a parameter to run pipeline activity that runs a data flow activity? I thought about outputting 2 arrays from the first activity and using a data flow expression to turn them into a map but I can't do that because the data flow expression doesn't have access to activity outputs or pipeline variables.

The data flow is using the keyValues function to create the map. My only guess right now about the root cause of this issue is that the keyValues function doesn't actually output a map, but an array.

Here's a rough diagram of the pipeline. I show the map how it appears in the data flow output and input. Like I said before, I'm using the keyValues function in the data flow in the outer pipeline to generate the "map".

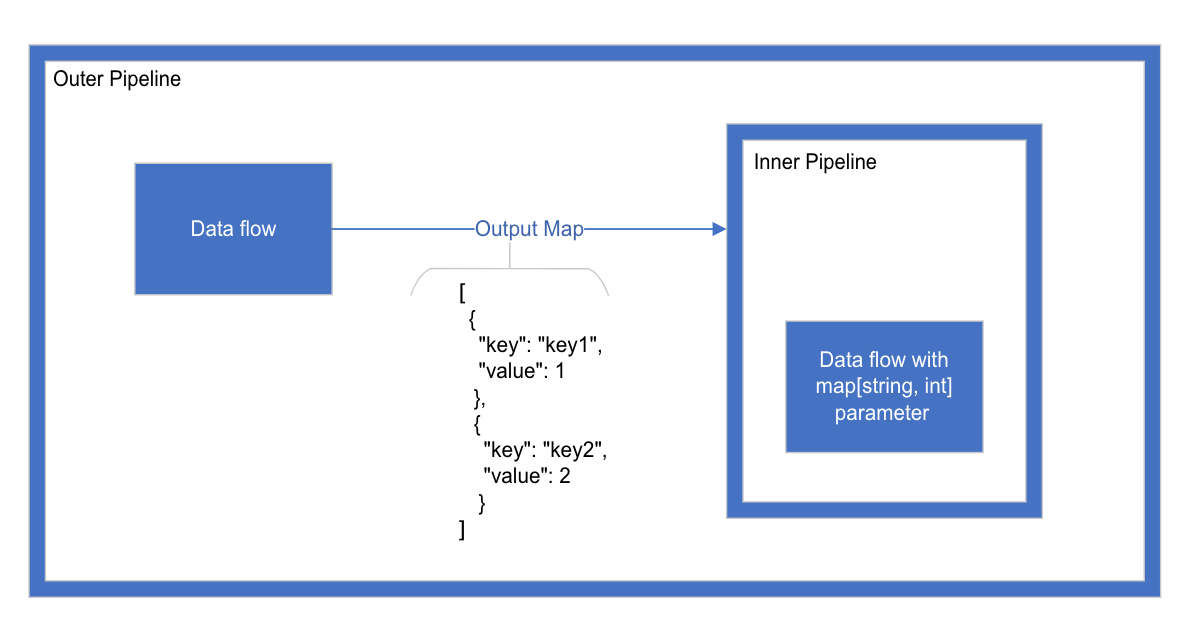

The first data flow output does show the output as a map

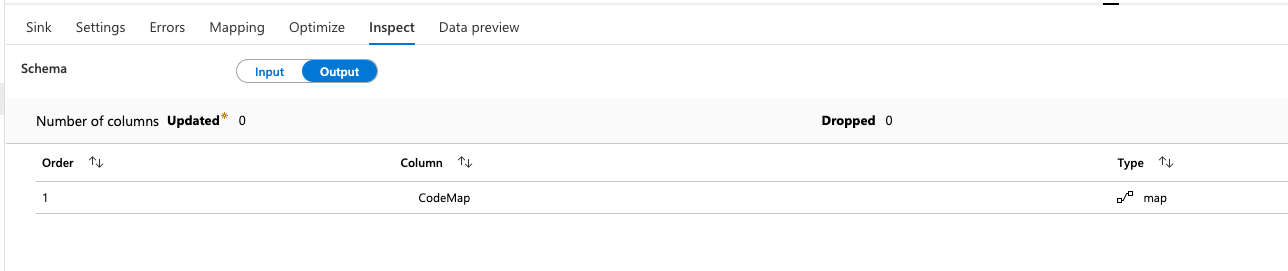

And the parameter type in the second data flow is a map[string,integer]