@Chand, Anupam SBOBNG-ITA/RX Thanks for reaching out to Microsoft Q&A. I understand that you want to allow access to your Azure Storage Account from Databricks but you see that its not part of the trusted services list.

Please refer to this similar thread- https://stackoverflow.com/questions/54018584/azure-databricks-accessing-blob-storage-behind-firewall

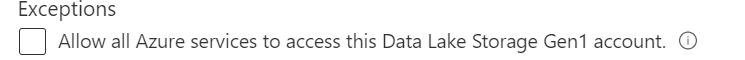

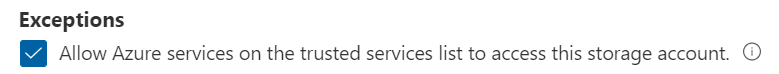

Yes, the Azure Databricks does not count as a trusted Microsoft service, you could see the supported trusted Microsoft services with the storage account firewall.

Here are two suggestions:

- Find the Azure datacenter IP address and scope a region where your Azure Databricks located. Whitelist the IP list in the storage account firewall.

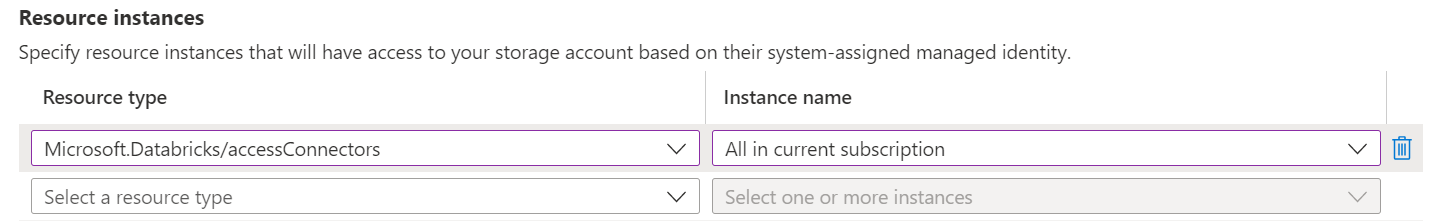

- Deploy Azure Databricks in your Azure Virtual Network (Preview) then whitelist the VNet address range in the firewall of the storage account. You could refer to configure Azure Storage firewalls and virtual networks. Also, you have NSG to restrict inbound and outbound traffics from this Azure VNet. Note: you need to deploy Azure Databricks to your own VNet.

Hope this helps. Please let us know if you have any more questions and we will be glad to assist you further. Thank you!

Remember:

Please accept an answer if correct. Original posters help the community find answers faster by identifying the correct answer. Here is how.

Want a reminder to come back and check responses? Here is how to subscribe to a notification.