Dear All,

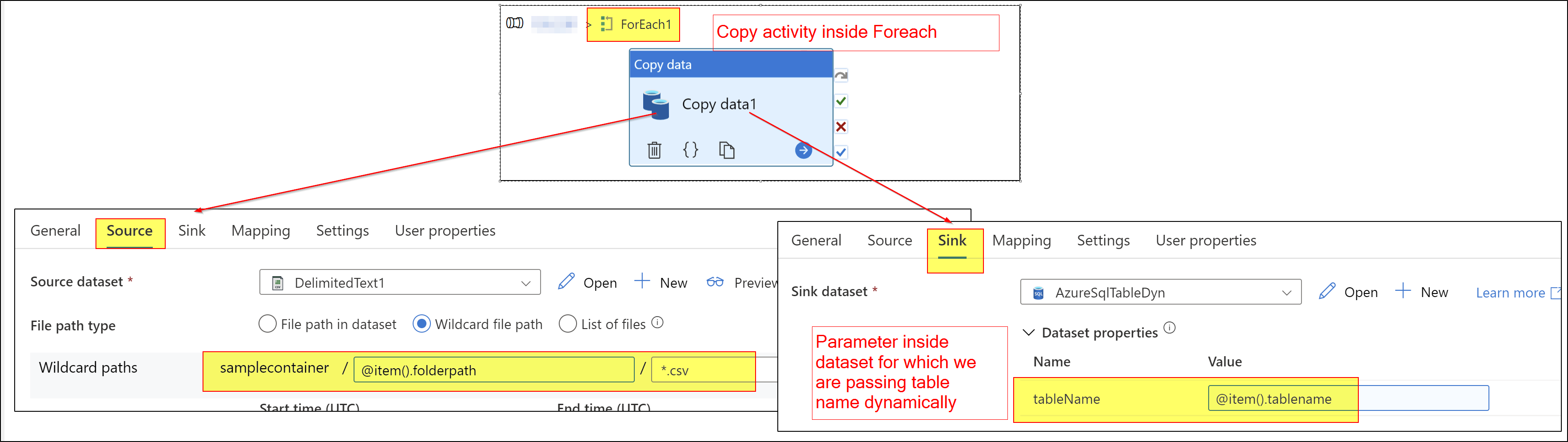

My project structure is the source system CRM to load the data to ADLS Gen2 using dataverse / synapse link. after that we are planned to load the data on premise SQL. so that i am using copy activity to load the data each table. here our expectation is each table i needs to create separate copy activity. instead of that, i would like to optimize single copy activity needs to load all the tables without fail. keep in mind my source data is different folder structure which means, each table, i have folder in my source.

example: source folder ( testA--> under folder multiple partitioned files (csv format)) ---> copy activity my source dataset marked as testA with wild card files --> sink --- connected on premise SQL with upsert types to load the data to SQL DB.

i have 15 tables, so that now, i have create 15 copy activity to load the data, instead of that, i would like to make dynamic with load all the 15 tables with different source of path location. in this regard, how do we handle, what are the possibility solution. kindly suggest.

tomorrow, if i have to load another 100 tables, we do not want create copy activity and other stuff. please help me in this manner.

or upvote

or upvote  button whenever the information provided helps you. Original posters help the community find answers faster by identifying the correct answer. Here is

button whenever the information provided helps you. Original posters help the community find answers faster by identifying the correct answer. Here is