Hello @Yuji Masaoka ,

On inspecting the requirements.txt file, I see randit is not valid python library on PyPI repo.

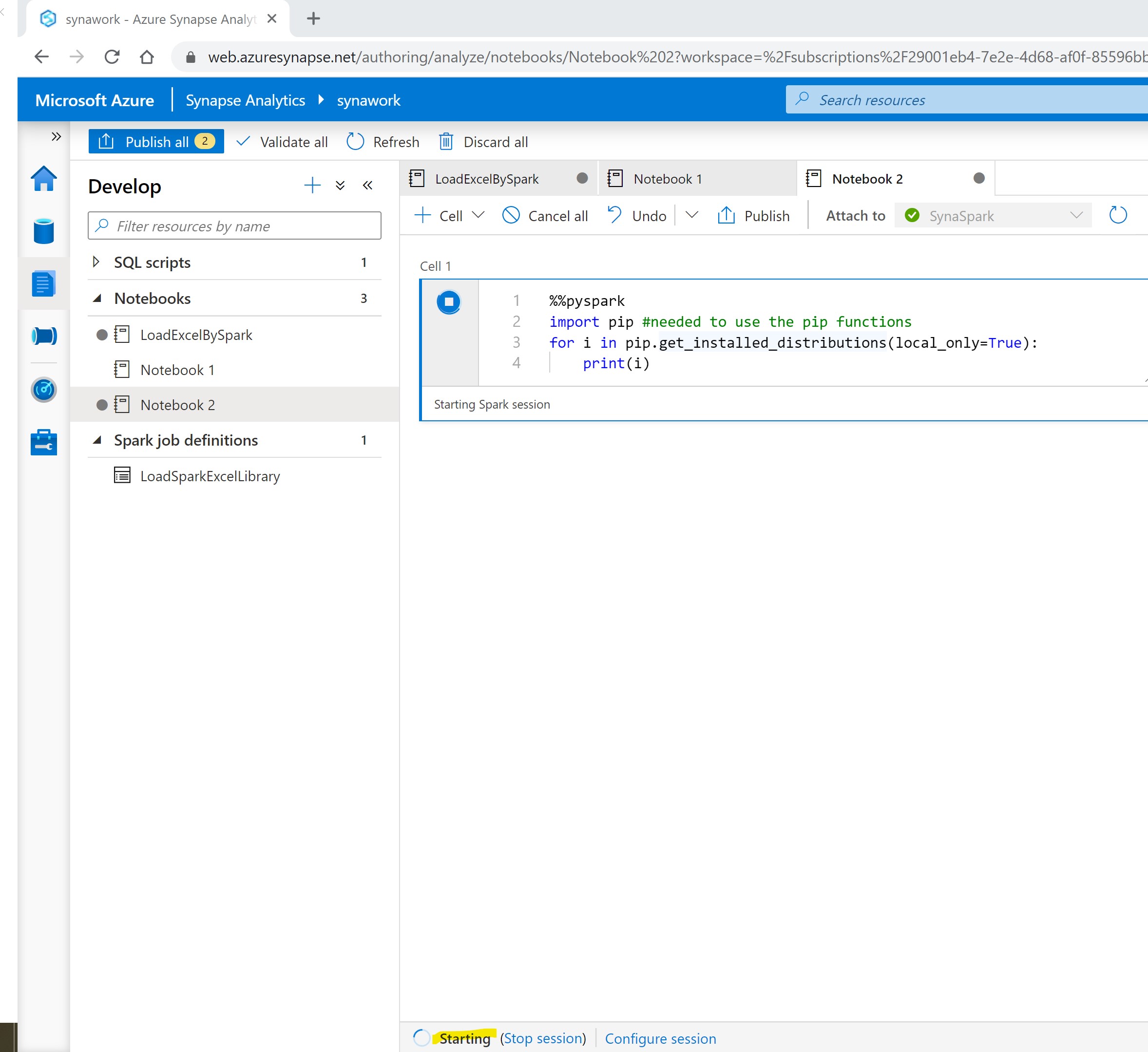

I had modified the requirements.txt file as follows and it worked without any issue.

pymongo==2.8.1

aenum==2.1.2

backports-abc==0.5

bson==0.5.10

Also updating that the format of pip freeze expects valid PyPi package name listed along with an exact version (https://learn.microsoft.com/en-us/azure/synapse-analytics/spark/apache-spark-azure-portal-add-libraries#requirements-format)

Hope this helps. Do let us know if you any further queries.

----------------------------------------------------------------------------------------

Do click on "Accept Answer" and Upvote on the post that helps you, this can be beneficial to other community members.