Hi @NIKHIL KUMAR ,

Thanks for posting this question in Microsoft Q&A platform and for using Azure Services.

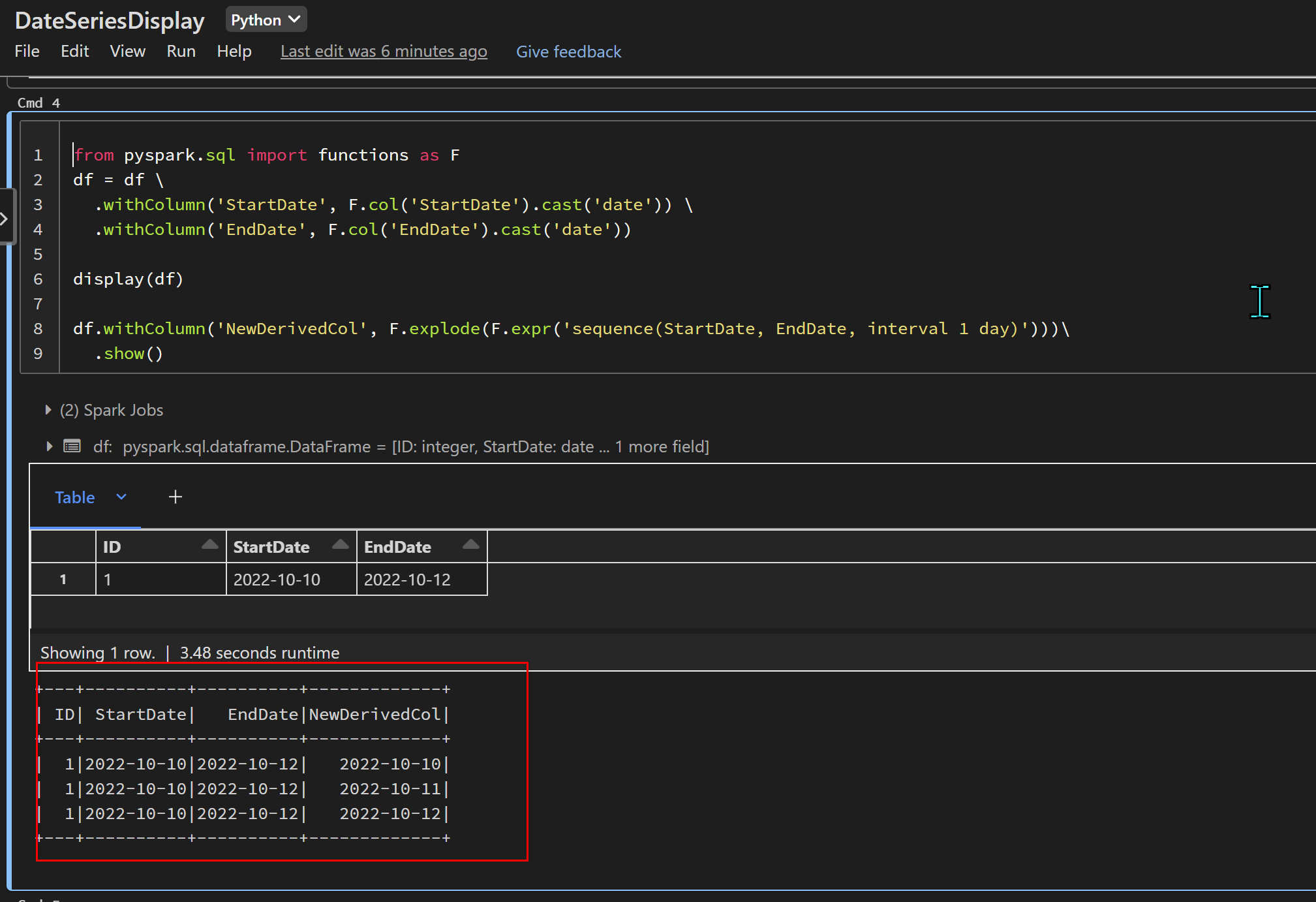

Regarding the above Input dataset and required Output Dataset with New Column as Date Series, we can use below code in Databricks using Pyspark SQL functions:

from pyspark.sql import functions as F

df = df \

.withColumn('StartDate', F.col('StartDate').cast('date')) \

.withColumn('EndDate', F.col('EndDate').cast('date'))

display(df)

df.withColumn('NewDerivedCol', F.explode(F.expr('sequence(StartDate, EndDate, interval 1 day)')))\

.show()

Here we have used Sequence function which generates an array of elements from start to stop (inclusive), incrementing by step. The type of the returned elements is the same as the type of argument expressions.

Supported types are byte, short, integer, long, date, timestamp.

It is used to create an array containing all dates between StartDate and EndDate.

This array can then be exploded using explode function which returns a new row for each element in the given array.

Reference Links: pyspark.sql.functions.explode.html

pyspark.sql.functions.sequence.html

Hope this will help. Please let us know if any further queries.

------------------------------

- Please don't forget to click on

or upvote

or upvote  button whenever the information provided helps you.

button whenever the information provided helps you.

Original posters help the community find answers faster by identifying the correct answer. Here is how - Want a reminder to come back and check responses? Here is how to subscribe to a notification