Hi I thought that I would follow up.

Mapping Data flow Inline Source and Sink for Delta now read and write for Delta tables which have

| Key | Value |

|---|---|

| delta.minReaderVersion | 1 |

| delta.minWriterVersion | 3 |

This was not true mid-August so great to see that resolved finally.

This means Delta with Table Constraints.

Operation on target Data flow1 failed: {"StatusCode":"DFExecutorUserError","Message":"Job failed due to reason: at Sink 'sink1': Cannot write to table with delta.enableChangeDataFeed set. Change data feed from Delta is not yet available.","Details":"org.apache.spark.sql.AnalysisException: Cannot write to table with delta.enableChangeDataFeed set. Change data feed from Delta is not yet available.\n\tat org.apache.spark.sql.delta.DeltaErrors$.cdcWriteNotAllowedInThisVersion(DeltaErrors.scala:407)\n\tat org.apache.spark.sql.delta.files.TransactionalWrite.writeFiles(TransactionalWrite.scala:156)\n\tat org.apache.spark.sql.delta.files.TransactionalWrite.writeFiles$(TransactionalWrite.scala:150)\n\tat org.apache.spark.sql.delta.OptimisticTransaction.writeFiles(OptimisticTransaction.scala:84)\n\tat org.apache.spark.sql.delta.files.TransactionalWrite.writeFiles(TransactionalWrite.scala:143)\n\tat org.apache.spark.sql.delta.files.TransactionalWrite.writeFiles$(TransactionalWrite.scala:142)\n\tat org.apache.spark.sql.delta.OptimisticTransaction.writeFiles(OptimisticTransaction.scala:84)\n\tat org.apache.spark.sql.delta.commands.WriteIntoDelta.write(WriteIntoDelta.scala:107)\n\tat org.apache.spark.sql.delta.commands.WriteIntoDelta.$anonfun$run$1(WriteIntoDelta.scala:66)\n\tat org.apac"}

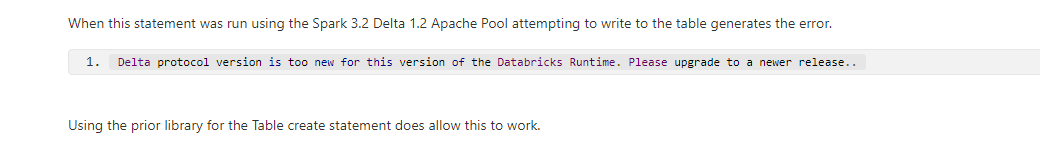

In further testing, I see that enabling CDC on a table which raises the minWriterVersion to 4 does not yet work. However there is definite progress.