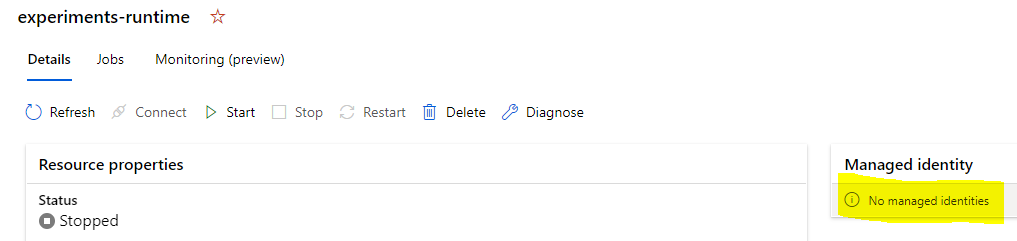

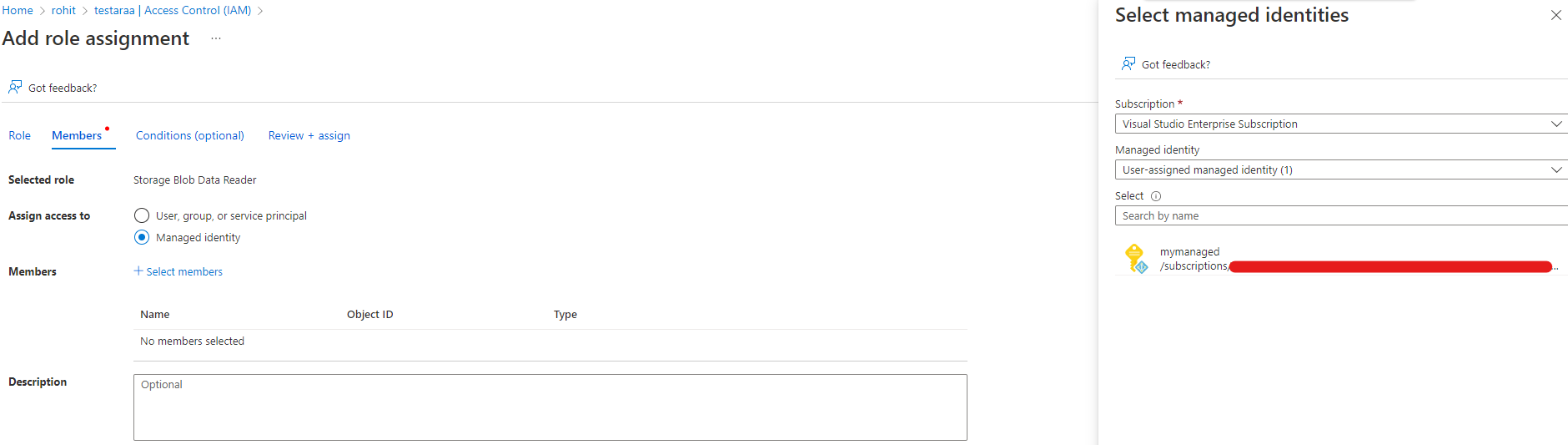

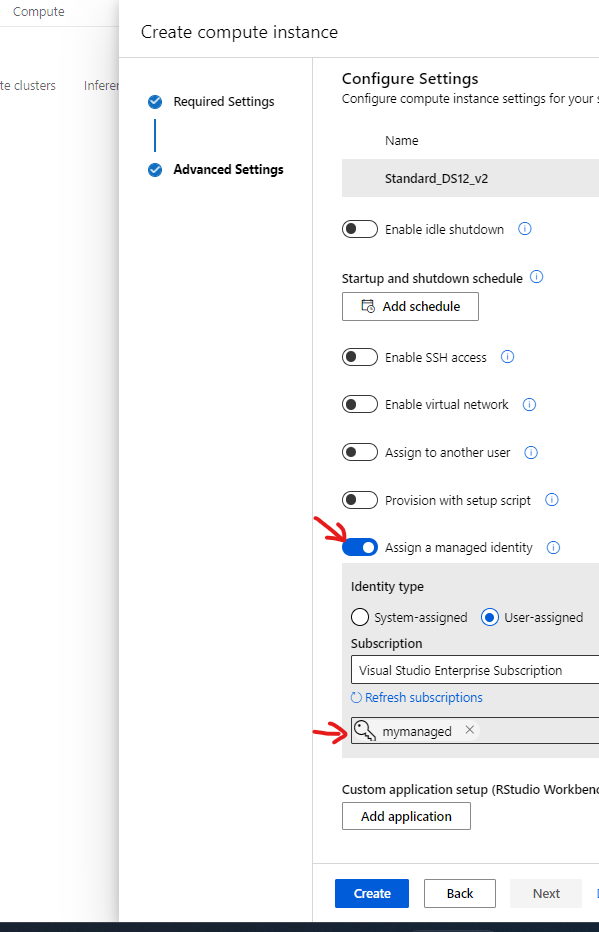

It's possible you defined the datalake as a datastore with identity-based access and also defined your compute instance/cluster with managed identity enabled. In this case, you'll need to grant the Compute Cluster Managed Identity access to the Data Lake through RBAC. Check this link for more details: https://learn.microsoft.com/en-us/azure/machine-learning/how-to-identity-based-service-authentication

Access to Azure ML named datasets

We have a computer vision pipeline that finetunes a vision model.

The data for training the vision model is a large collection of images that lands in our data lake.

The problem is that we are not able to mount this dataset to our training job.

In Azure ML portal we defined a named dataset for the image folder in our data lake (not the default workspace data source).

But if in our job control script we will try to reference the dataset by name and mount it to the training job:

docker_config = DockerConfiguration(use_docker=True)

# Access the dataset

dataset = Dataset.get_by_name(ws, 'the name of our named dataset')

# Run the experiment

args = ['--data-folder', dataset.as_mount() ]

print("Mounted dataset")

src = ScriptRunConfig(source_directory='./src',

script='balearms_cnn_training.py',

arguments=args,

compute_target=compute_target,

environment=keras_env,

docker_runtime_config=docker_config)

The job will fail with the error:

{"NonCompliant":"UserErrorException:\n\tMessage: Cannot mount Dataset(id='389fb088-59eb-4288-8225-aa9fb55f14c0', name='balearms', version=1). Error Message: DataAccessError(PermissionDenied(Some(This request is not authorized to perform this operation using this permission.)))\n\tInnerException None\n\tErrorResponse \n{\n \"error\": {\n \"code\": \"UserError\",\n \"message\": \"Cannot mount Dataset(id='389fb088-59eb-4288-8225-aa9fb55f14c0', name='balearms', version=1). Error Message: DataAccessError(PermissionDenied(Some(This request is not authorized to perform this operation using this permission.)))\"\n }\n}"}

…

To overcome this problem we use the default workspace dataset.

datastore = ws.get_default_datastore()

dataset = Dataset.File.from_files(path=(datastore, 'datasets/balearms/ExtractedImages/'))

# Run the experiment

args = ['--data-folder', dataset.as_mount() ]

print("Mounted dataset")

src = ScriptRunConfig(source_directory='./src',

script='balearms_cnn_training.py',

arguments=args,

compute_target=compute_target,

environment=keras_env,

docker_runtime_config=docker_config)

It works but it also means that we have to copy the files to a different storage account (the default workspace dataset) and that creates complexities.

There is no doubt that we should be able to mount a named dataset. I believe that we miss just a small detail…

Would you be able to help us to figure out how to use named datasets and mount them to our training jobs?

Thanks

Manu

Azure Machine Learning

2 answers

Sort by: Most helpful

-

-

Manu Cohen-Yashar 71 Reputation points

Manu Cohen-Yashar 71 Reputation points2022-11-17T21:05:10.023+00:00 The dataset that I needed to mount is a folder in my data lake (gen2).

As you would imagine the Azure ML data store I use is of type the data lake, and it is configured with a service principal that has all the permissions to access the data lake, but still, I cannot access the data.BUT. It is possible to create a blob data store (that uses the access key) to reference the same data.

Yes, it's not RBAC but when creating a data set from that blob-based data store, I am able to access the dataset, mount it, etc.Something is broken in my data lake gen2 data store. I do not know what it is, but the blob data store is a reasonable workaround.