Hi Team,

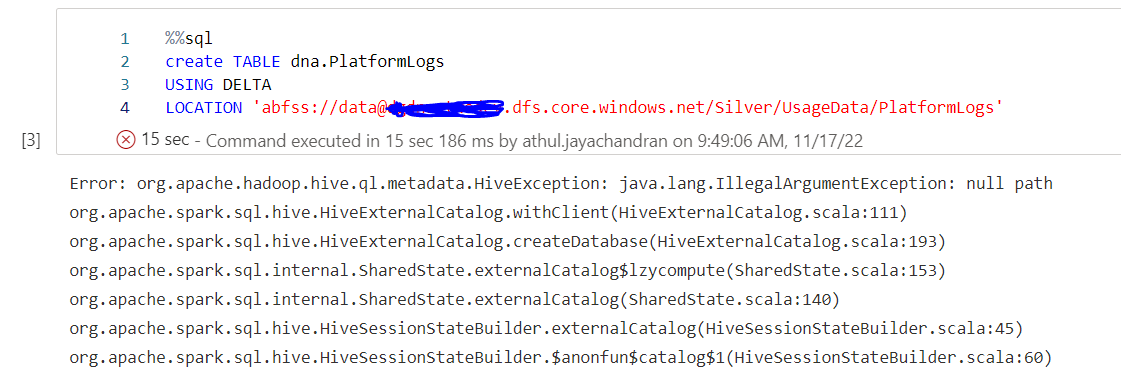

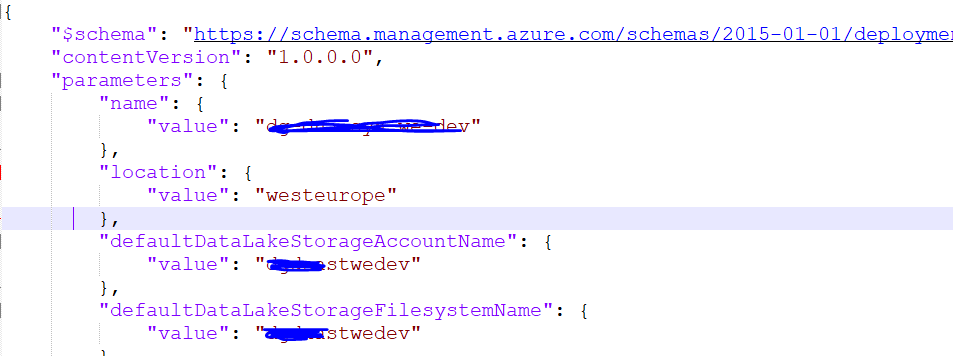

We were able to figure out the problem, the problem was that the default container name specified during the creation of synapse workspace was no longer available under the storage account.

we had the same name for storageaccountname and storagefilesystem name at the time of creation. PFB

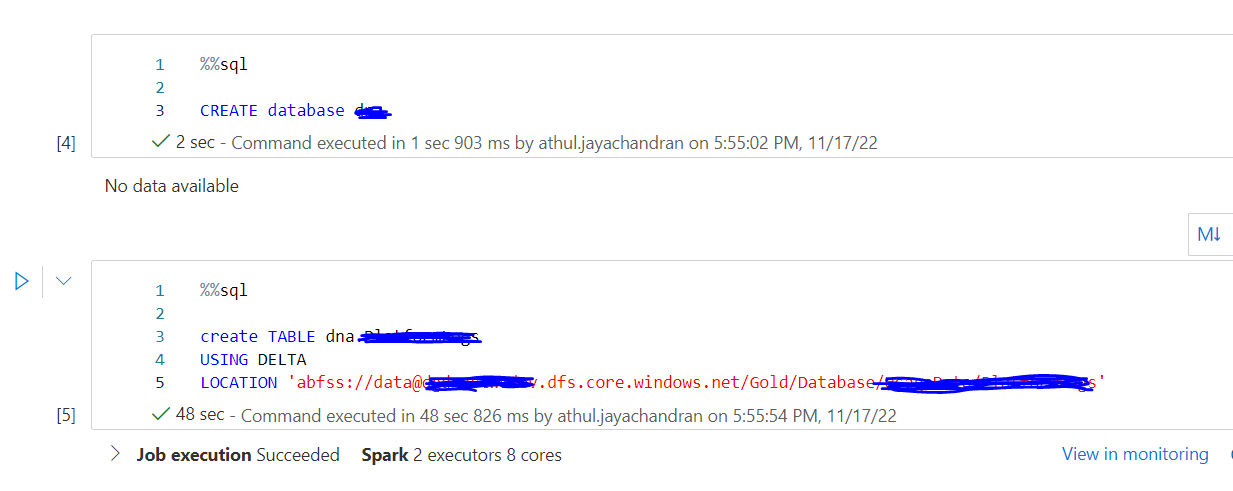

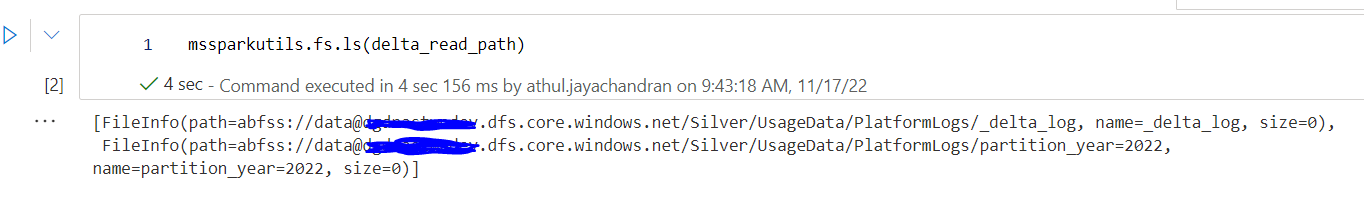

Once we created the default container name again in the datalake, we were able to run and create delta lake table successfully.

Quick question on the same, is it possible to change the default container name under a storage account post creation of Synapse workspace. Would love to have this as a feature.