Hi @Omar Navarro , thank you for posting this question.

Apologies for the delayed feedback on this. I have investigated further into the implementation of the Data Collection Rule and discovered that it is possible to create a single rule to push data from both text files onto to the same Analytics end point. Please refer to the Data collection rule for text log section in the article which provides a Custom template sample showing multiple data sources bound to an end point. Please find part of the deployment snippet below

"dataSources": {

"logFiles": [

{

"streams": [

"Custom-MyLogFileFormat"

],

"filePatterns": [

"C:\\JavaLogs\\*.log"

],

"format": "text",

"settings": {

"text": {

"recordStartTimestampFormat": "ISO 8601"

}

},

"name": "myLogFileFormat-Windows"

},

{

"streams": [

"Custom-MyLogFileFormat"

],

"filePatterns": [

"//var//*.log"

],

"format": "text",

"settings": {

"text": {

"recordStartTimestampFormat": "ISO 8601"

}

},

"name": "myLogFileFormat-Linux"

}

]

},

"destinations": {

"logAnalytics": [

{

"workspaceResourceId": "[parameters('workspaceResourceId')]",

"name": "[parameters('workspaceName')]"

}

]

},

"dataFlows": [

{

"streams": [

"Custom-MyLogFileFormat"

],

"destinations": [

"[parameters('workspaceName')]"

],

"transformKql": "source",

"outputStream": "Custom-MyTable_CL"

}

]

Kindly note that, for this to work, the log files would have to follow a similar structure and can be transformed using a single "transformKql" before they get bound to the end point.

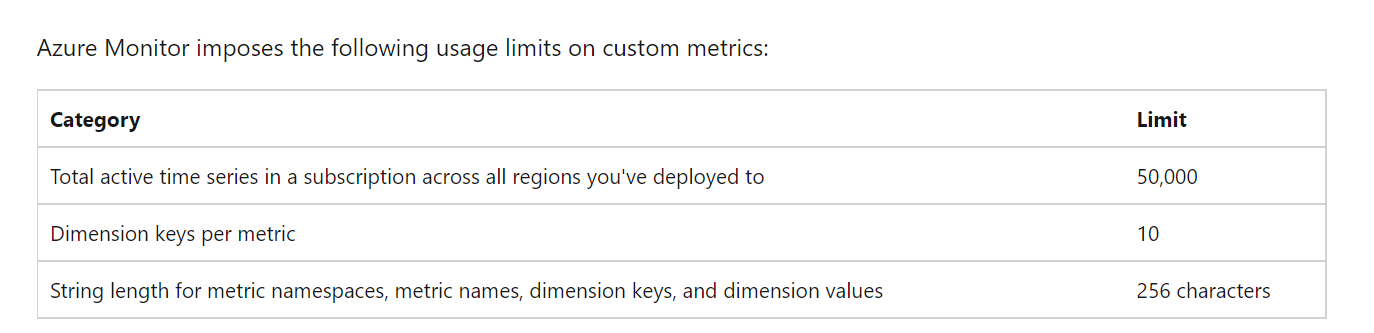

Please factor in the Quotas and Limits and Design limitations and considerations as you create and utilize the data collection rule.

Please reach out to us if you have any further questions or need clarification on the information posted.

----------

Kindly accept answer or upvote if the response is helpful so that it would benefit other community members facing the same issue. I highly appreciate your contribution to the community.