Hi @Anonymous ,

Thank you for posting query in Microsoft Q&A platform.

You can achieve this using Azure data factory. Below are the few work arounds.

Approach1: Use GetMetaData activity to get list of files from folder and then use ForEach activity to loop them and inside ForEach activity use Copy activity to copy each file. Here Inside copy activity we should use parameterized datasets.

Please note, here after copy activity we should log copied filenames to some log table. And Before copy we should check if that file is already copied or not by comparing with log table. We can use Lookup activity or Script activity to run SQL query to check if file name presents or not in log table.

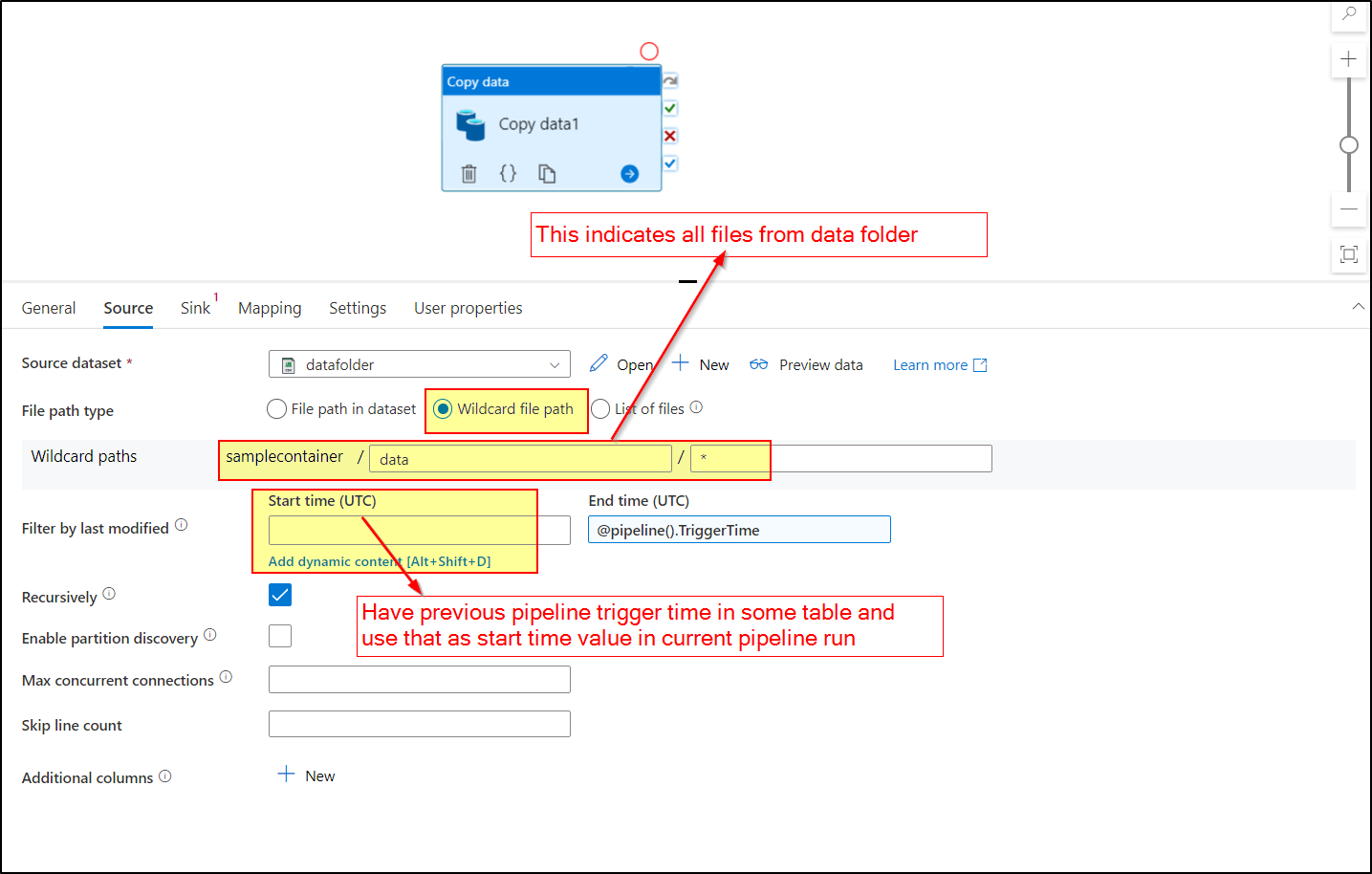

Approach2: Using Wild card file path in Copy activity.

Inside Copy activity use wild card file path to point to all files and then use Filter By last modified filed values to filter files based on last modified times to Copy.

Refer below video for better understanding of Approach 2.

Incrementally copy new and changed files based on Last Modified Date in Azure Data Factory

Below are few useful videos you can refer:

Get Metadata Activity in Azure Data Factory

ForEach Activity in Azure Data Factory

Script Activity in Azure Data Factory or Azure Synapse

Hope this helps. Please let me know if any further queries.

---------------

Please consider hitting Accept Answer button. Accepted answers help community as well.