Hi,

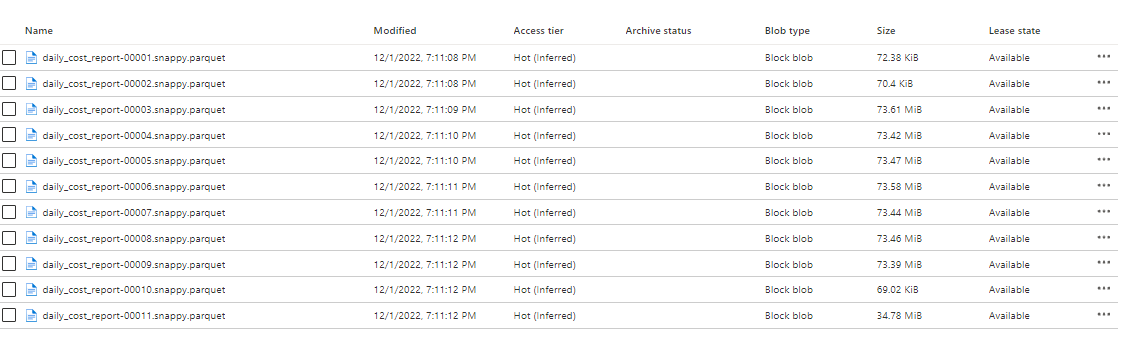

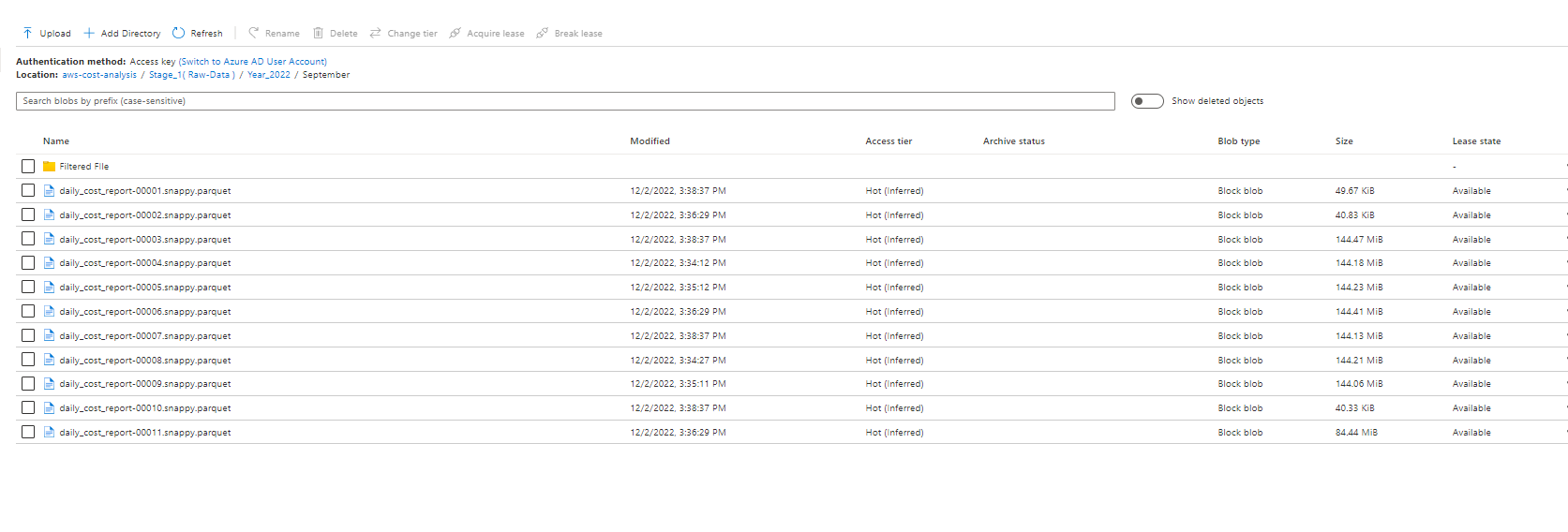

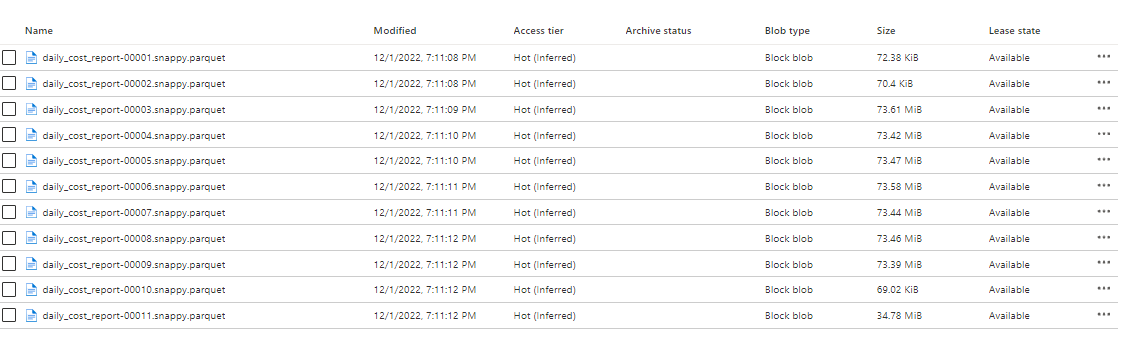

I am having 11 parquet files in datalake

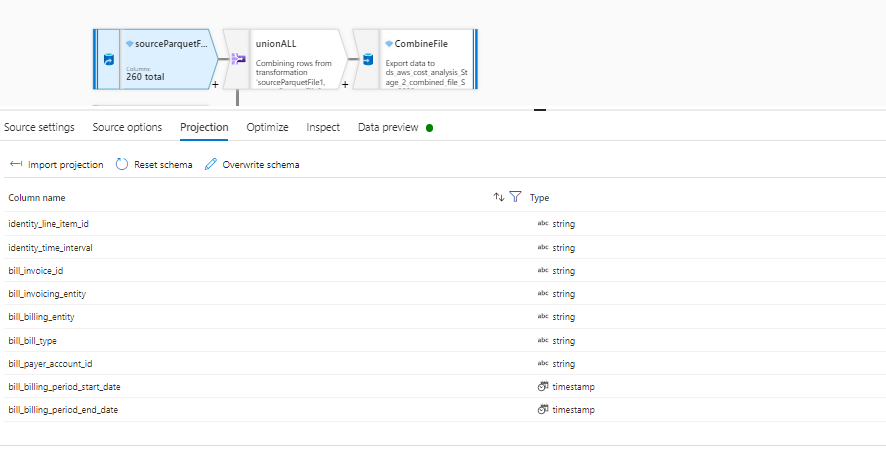

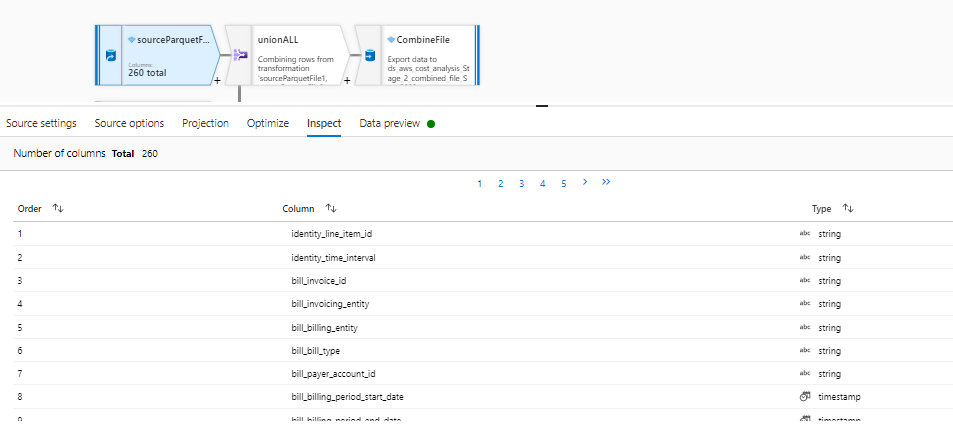

Each file is having 260 columns and approx 500k rows. I have to pull all these 11 files and then filter the number of columns down to 60 only from each file and then have to combine all these files in a single file.

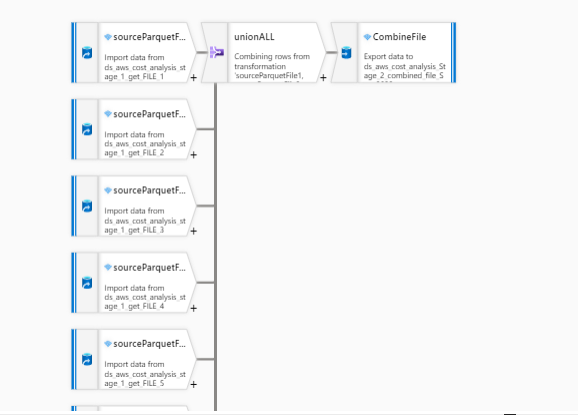

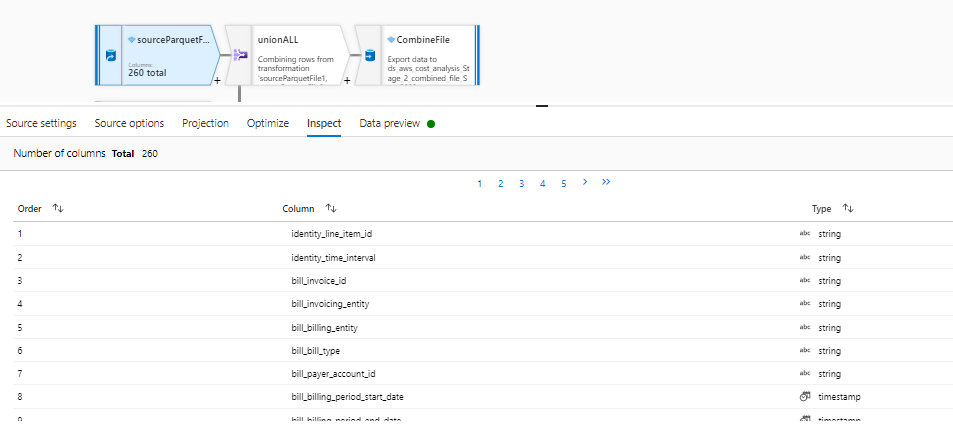

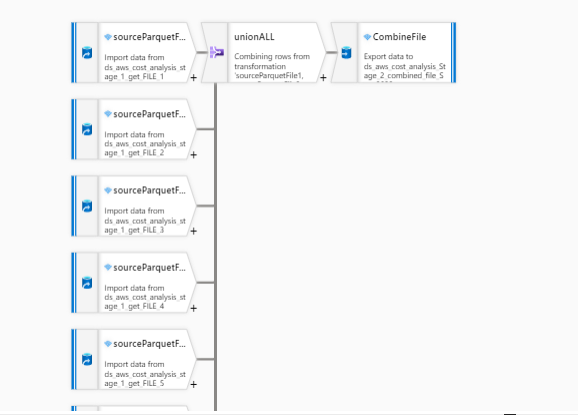

I am trying to achieve this in mapping dataflows like this.

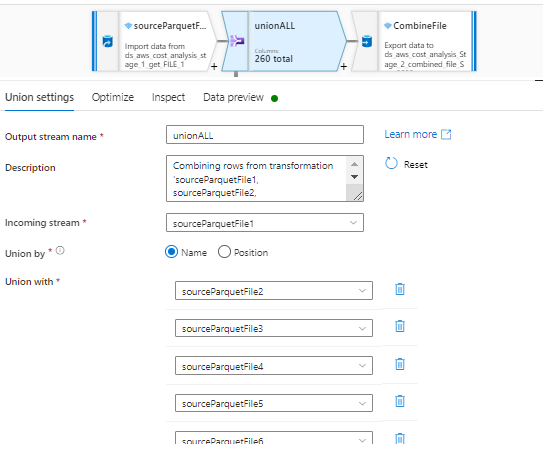

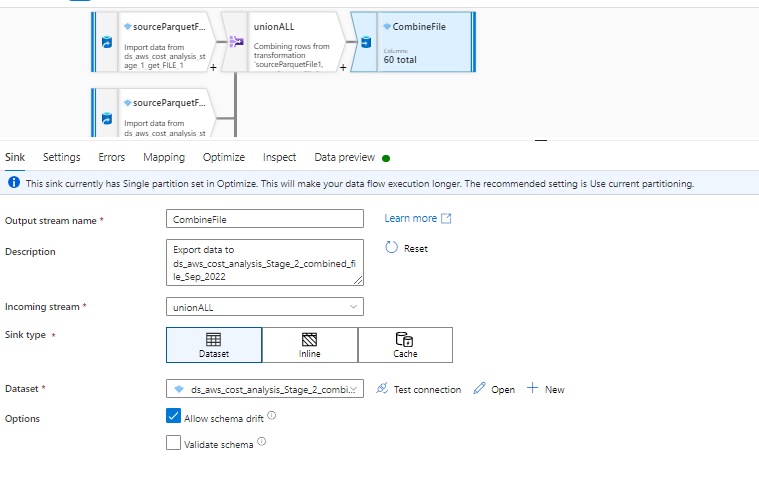

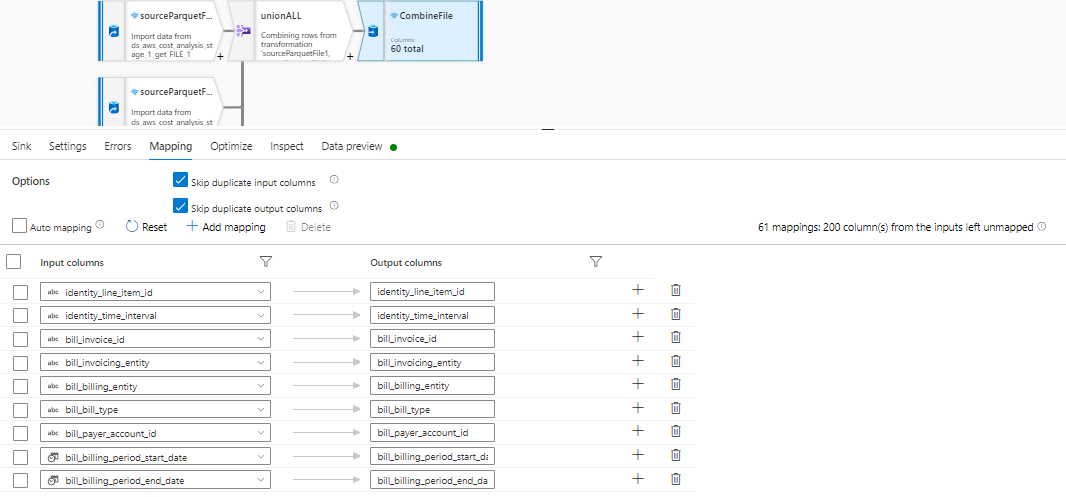

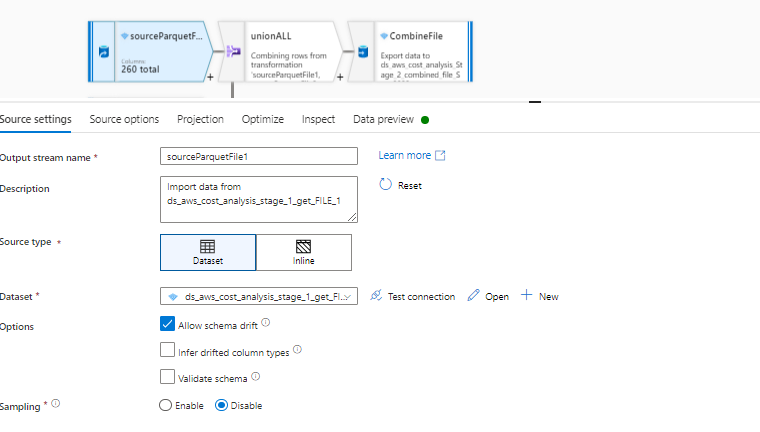

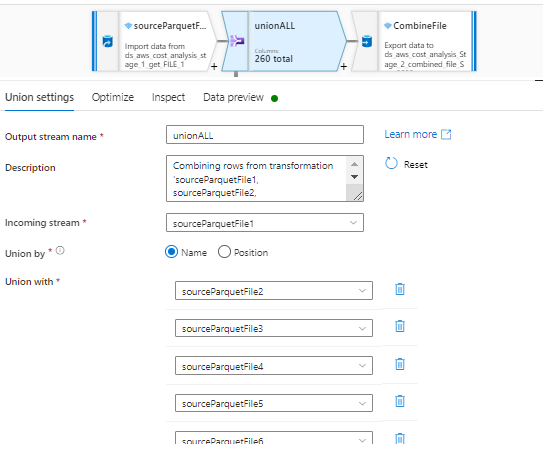

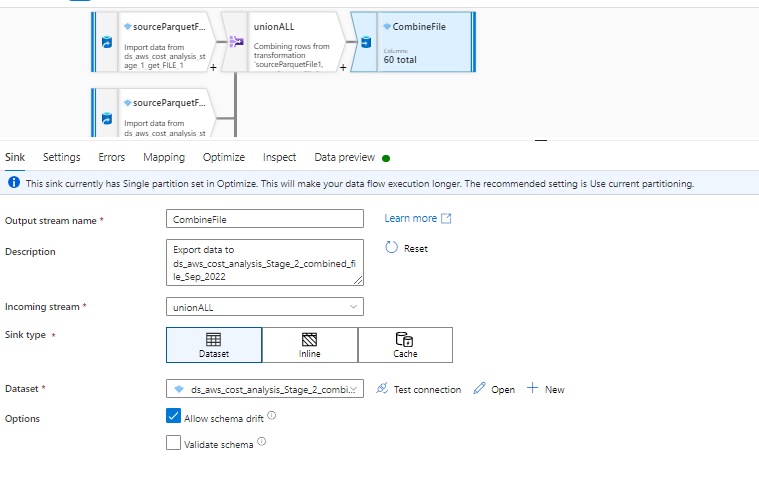

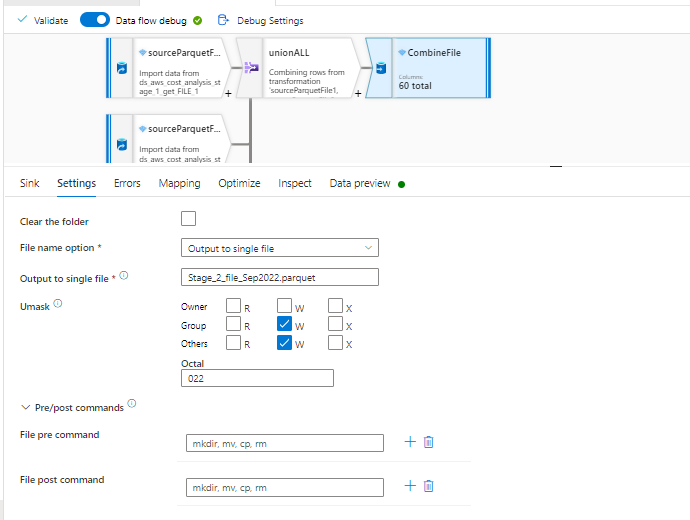

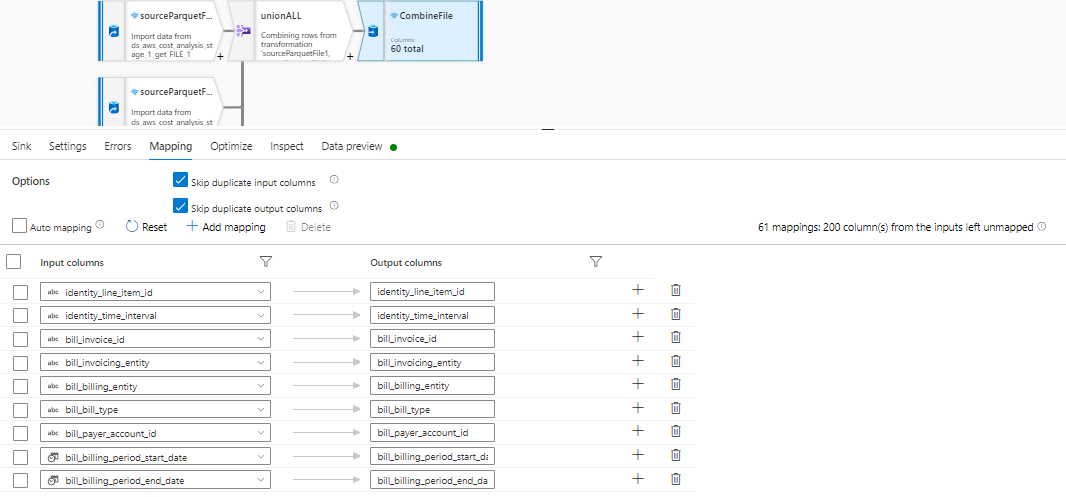

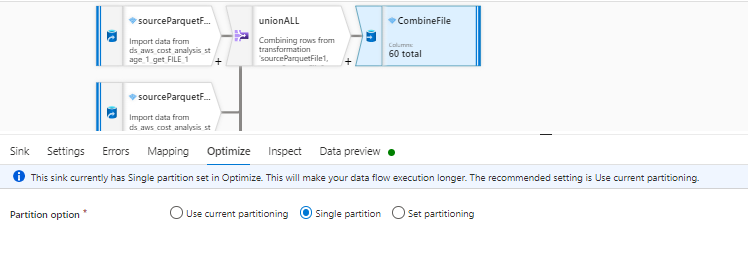

I created 11 incoming streams for each file and the used UNION to union all and then selected only 60 useful columns in the mapping section ( I used manual mapping ) and then used the SINK with output to a single file option.

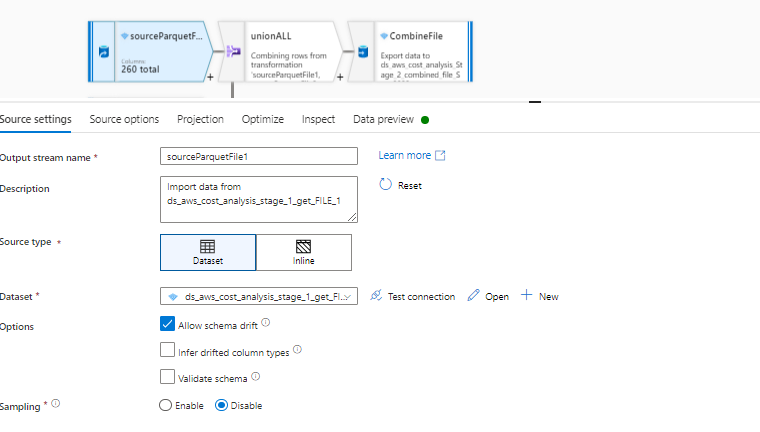

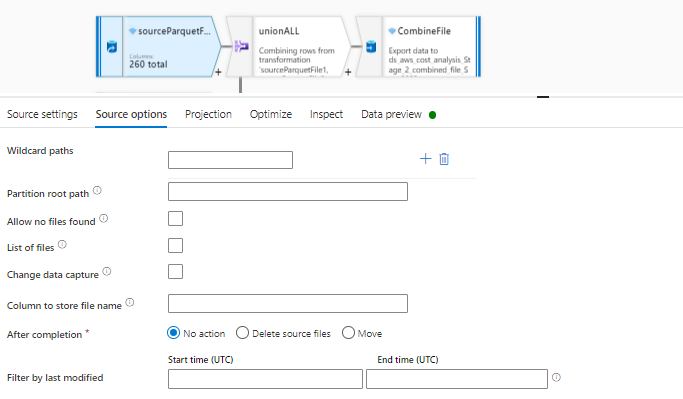

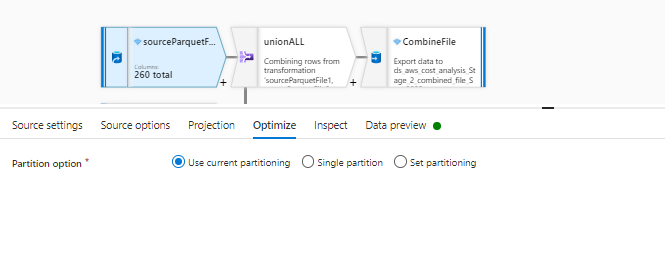

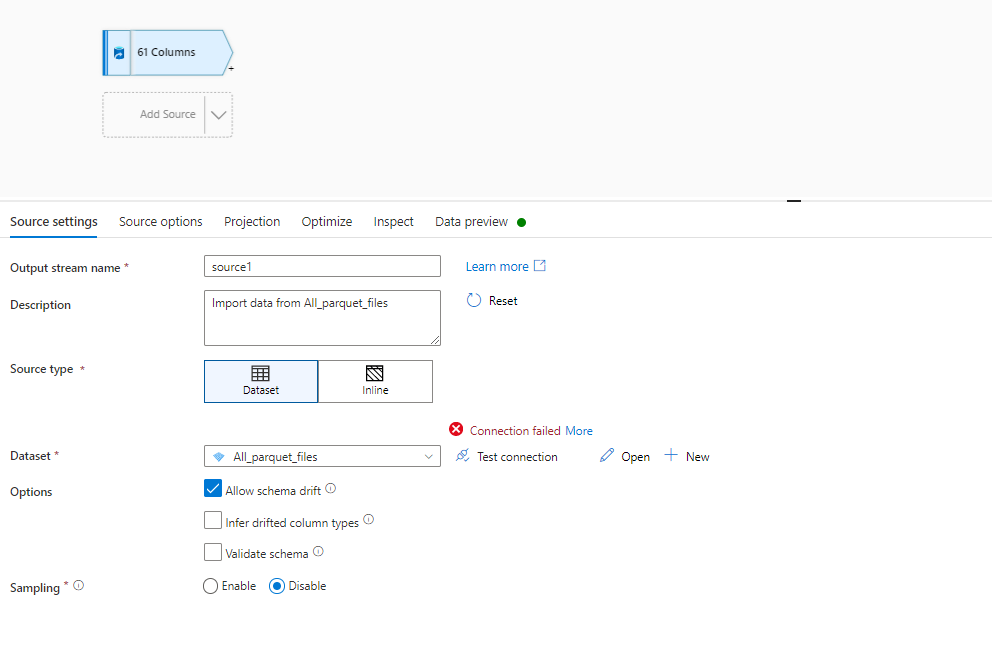

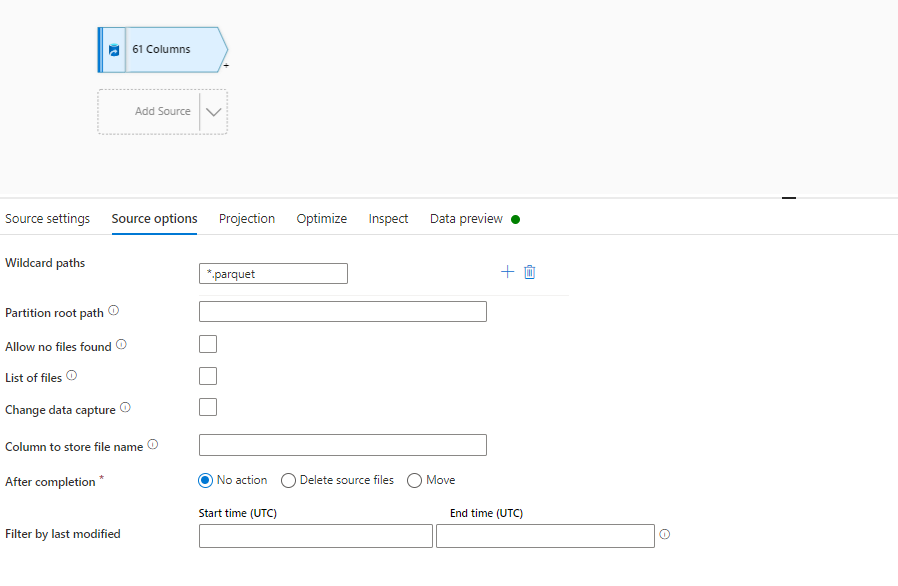

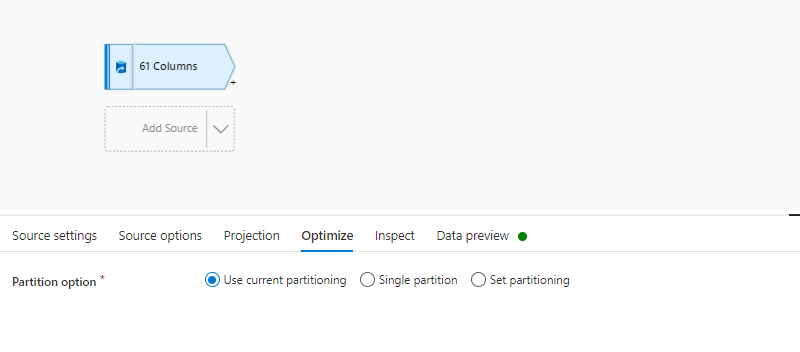

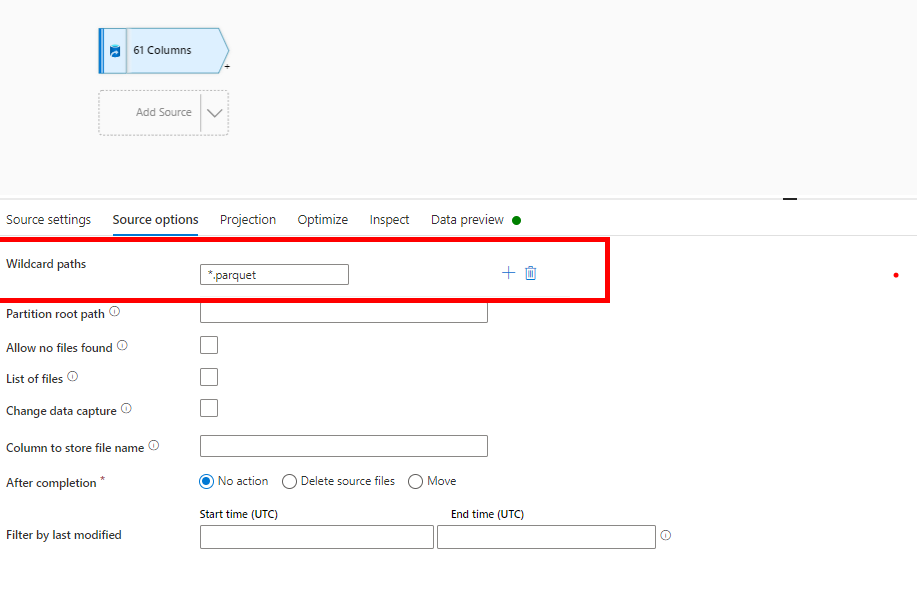

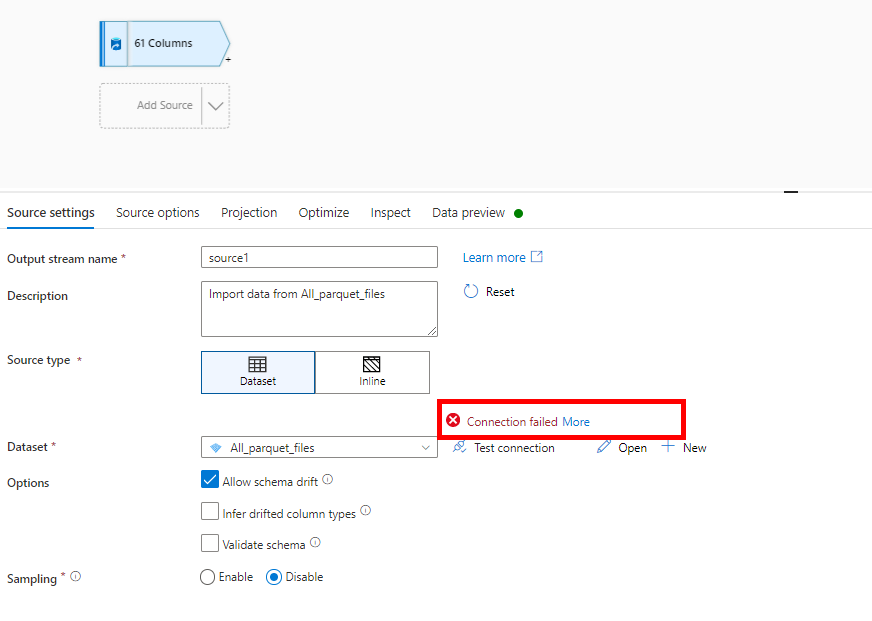

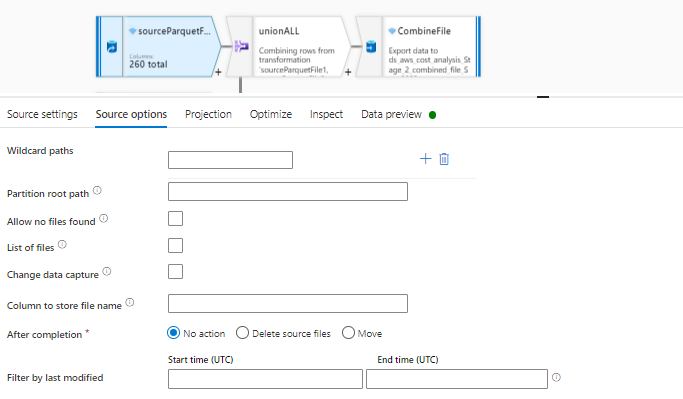

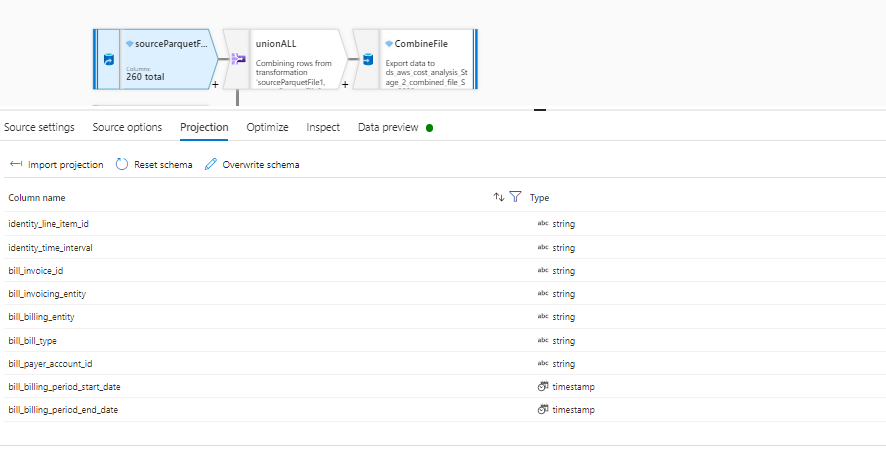

These are my settings for the incoming streams

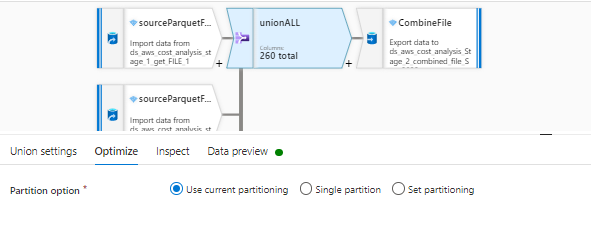

These are the settings in UNION

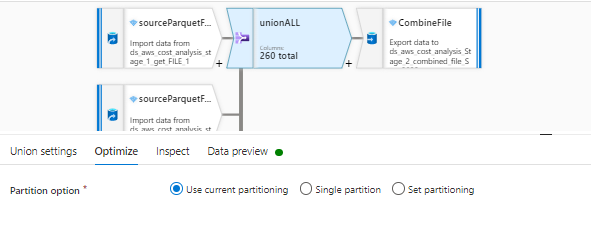

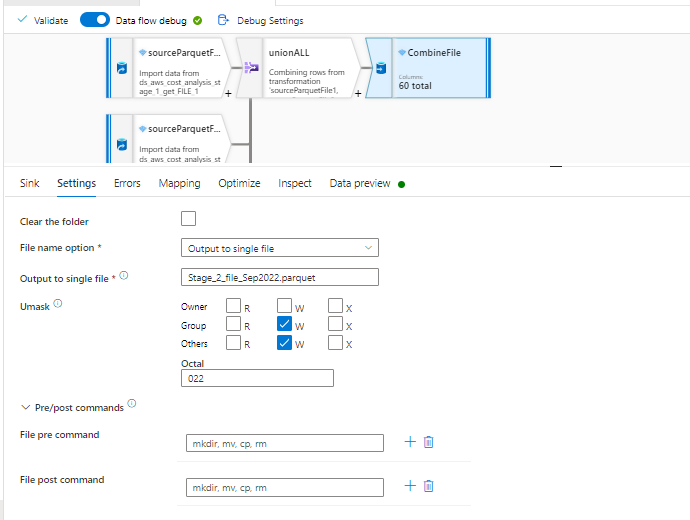

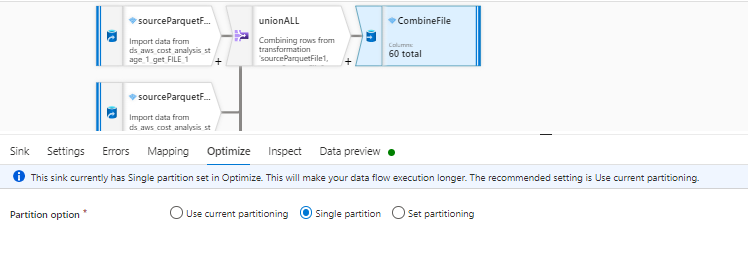

These are the settings in the SINK

Now when i ran this dataflow it is taking infinite time even when i used 64 Cores in dataflow activity pipeline. I believe it is happening because it is first doing UNION of all the files which is resulting into a large file of 260 columns and 40M rows and then filtering columns is getting done on this gigantic file.

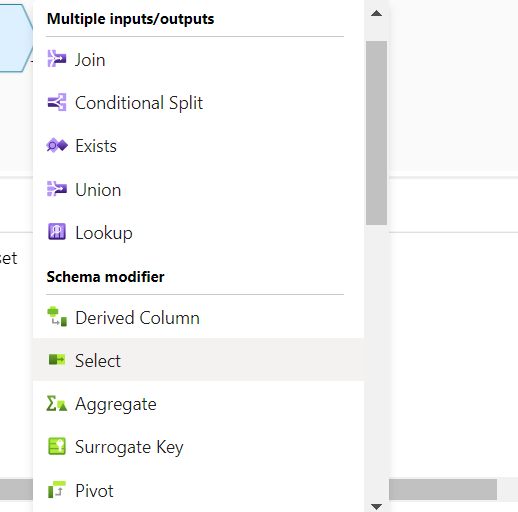

I want to change my logic now. I want to filter the columns down to 60 first on all the 11 files and then i want to do union on all these files having only 60 columns.

Can anyone suggest the work path to achieve this in mapping dataflows or is there any better way to achieve this in an efficient and cost effective way.

Thanks