@Ammar Mohanna : yes, it seems that there is memory pressure on the nodes. To validate the same, you can connect to your AKS cluster and run kubectl top nodes, which will show you the CPU and memory usage on the nodes, and will help you to confirm if there is with lack of memory, and if yes, you can scale up the number of nodes to be able to get those pods into running state which are currently stuck in pending.

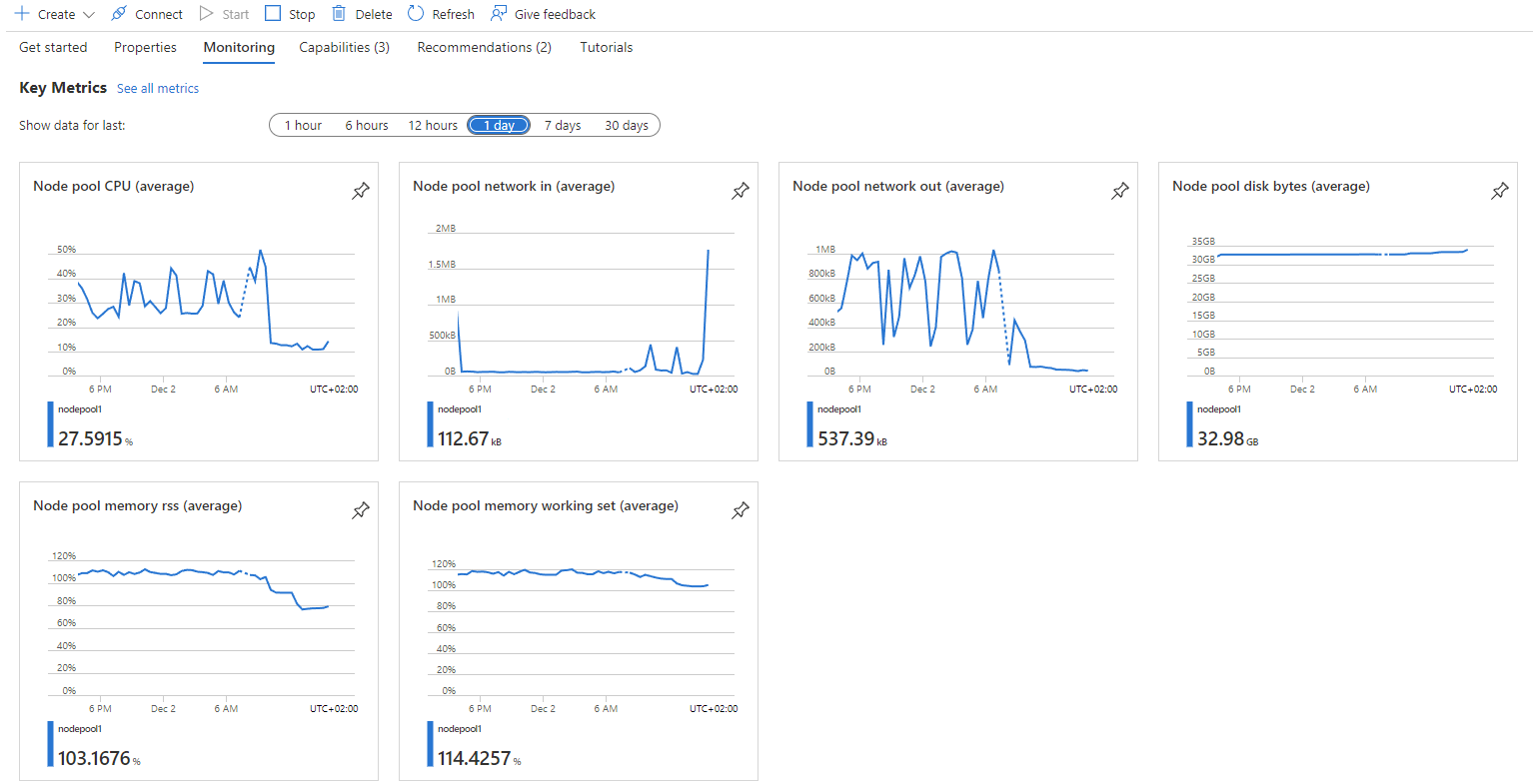

AKS deployment memory pressure - Node pool memory working set

Ammar Mohanna

26

Reputation points

- Description:

I created an AKS cluster, and I am adding several services to it (around 10), each one of these is configured to have 3 pods.

The node pool has the following parameter:

Node pools: 1 node pool

Kubernetes versions: 1.23.12

Node sizes: Standard_DS2_v2

- Status quo:

Some of the pods are stuck on 'Pending' status, and therefore their API response remains '503 Service Temporarily Unavailable'.

And sometimes, the pods are turning off and on constantly. - Questions:

Am I using too many services for this size of the cluster?

Are there any suggestions or additional information that I can provide to make my question clearer?

Azure Kubernetes Service

Azure Kubernetes Service

An Azure service that provides serverless Kubernetes, an integrated continuous integration and continuous delivery experience, and enterprise-grade security and governance.

2,447 questions