Hi @Dadheech, Raveesh ,

Thank you for posting query in Microsoft Q&A Platform.

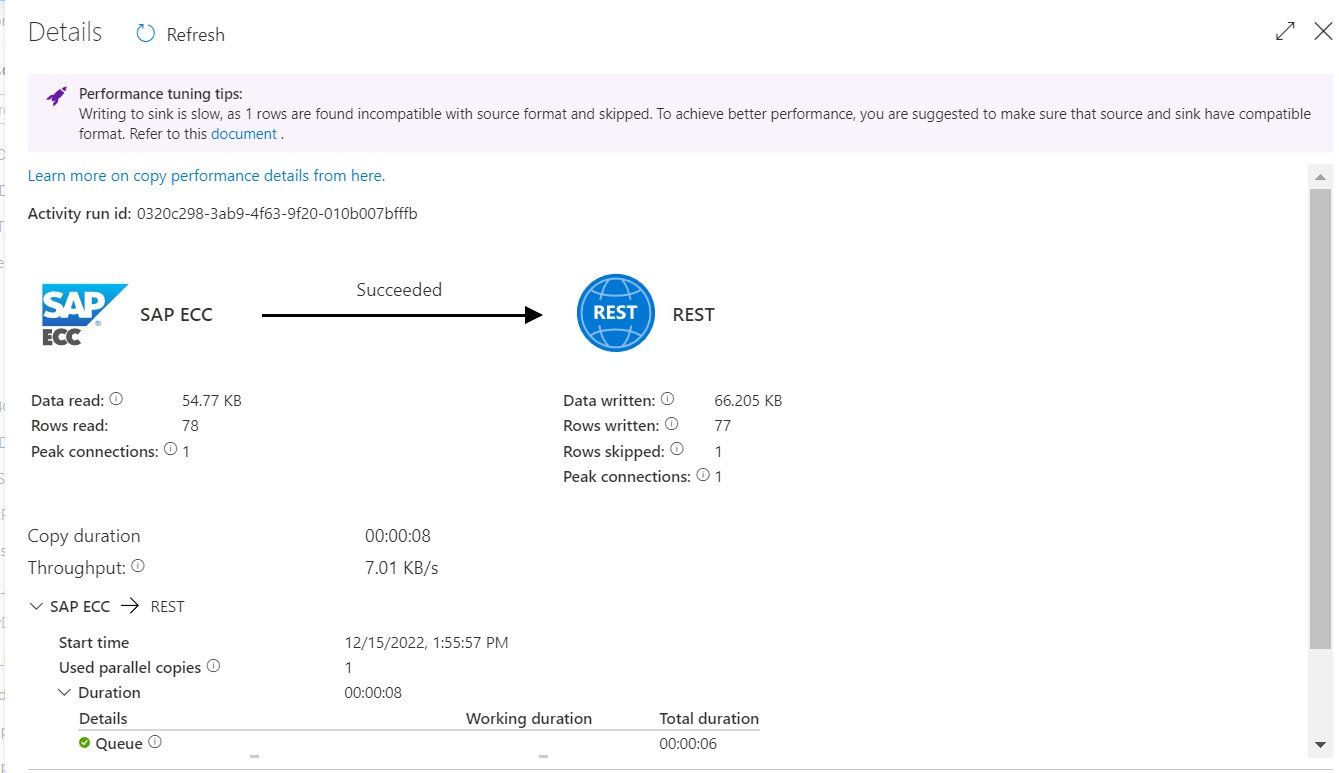

If I understand correctly, here you are using SAP ECC dataset as source and REST dataset as Sink and not able to understand how the request body to be sent to API in sink. If correct me if I am wrong.

Please Note, REST connector as sink works with the REST APIs that accept JSON. The data will be sent in JSON with the following pattern. As needed, you can use the copy activity schema mapping to reshape the source data to conform to the expected payload by the REST API.

[

{ <data object> },

{ <data object> },

...

]

So, kindly make use of mappings tab to reshape the data which get send to API. Click here to know more about REST connector as sink.

Hope this helps. Please let me know if any further queries.

-------------

Please consider hitting Accept Answer button. Accepted answers help community as well.