Hi,

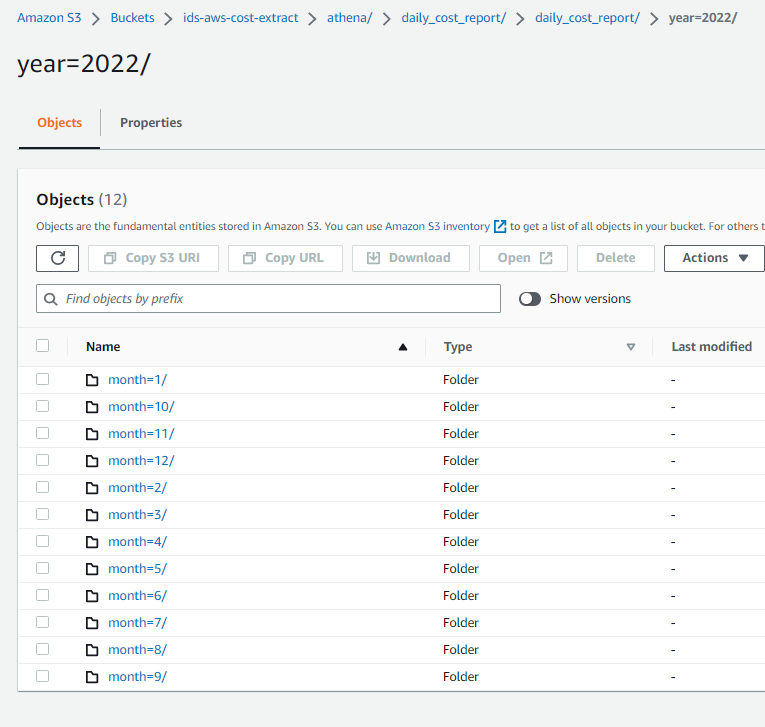

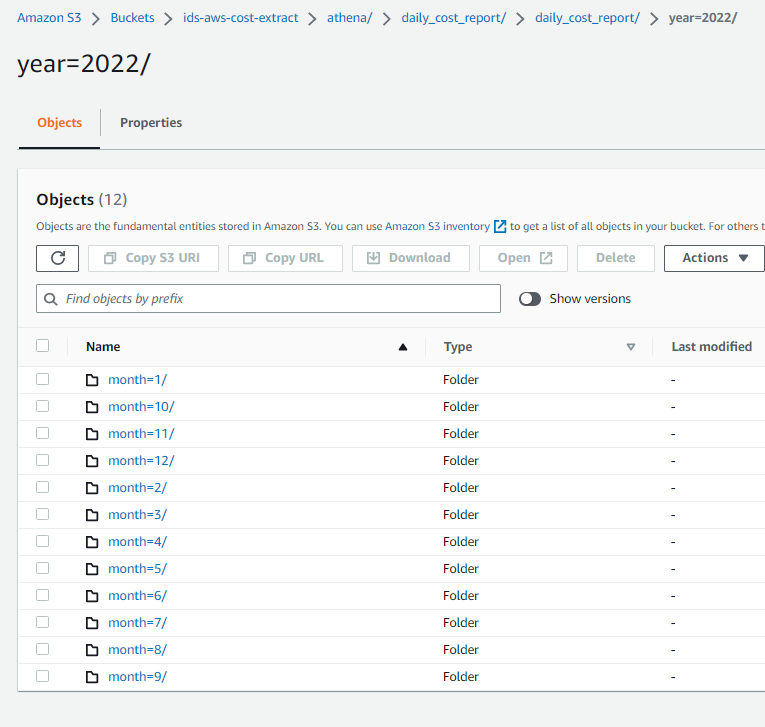

I have this hierarchy of folders in S3 bucket

each folder contains parquet files but with same name.

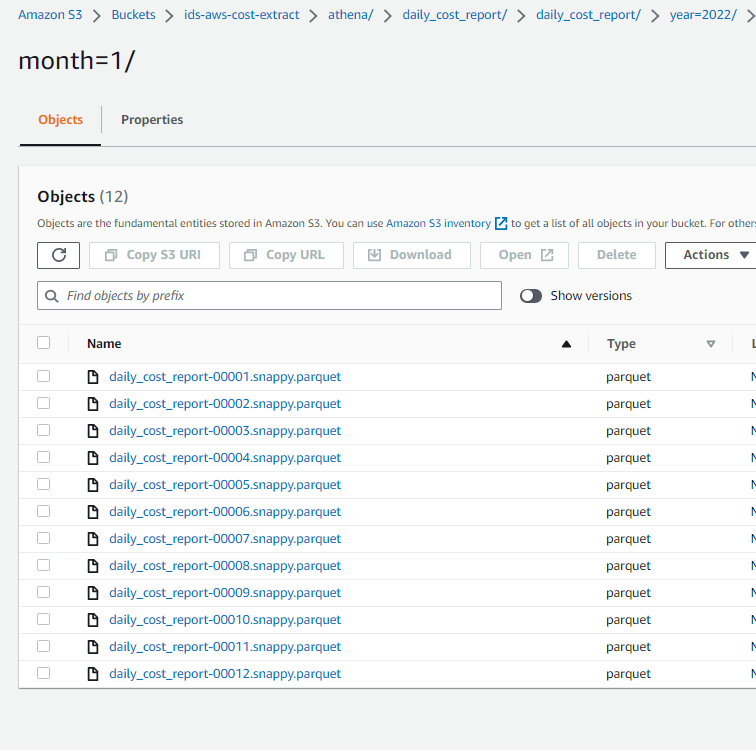

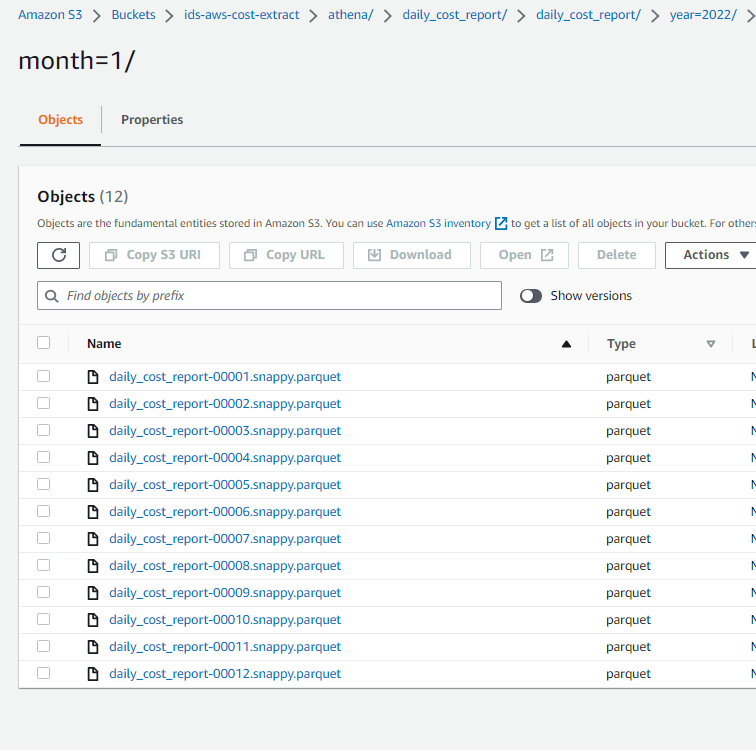

like month=1 folder have these files

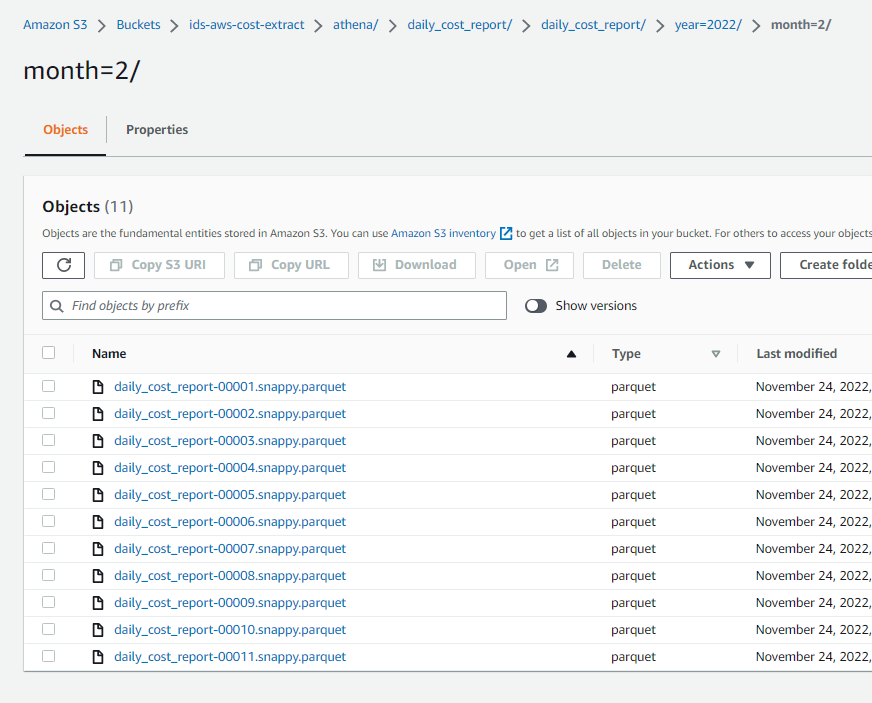

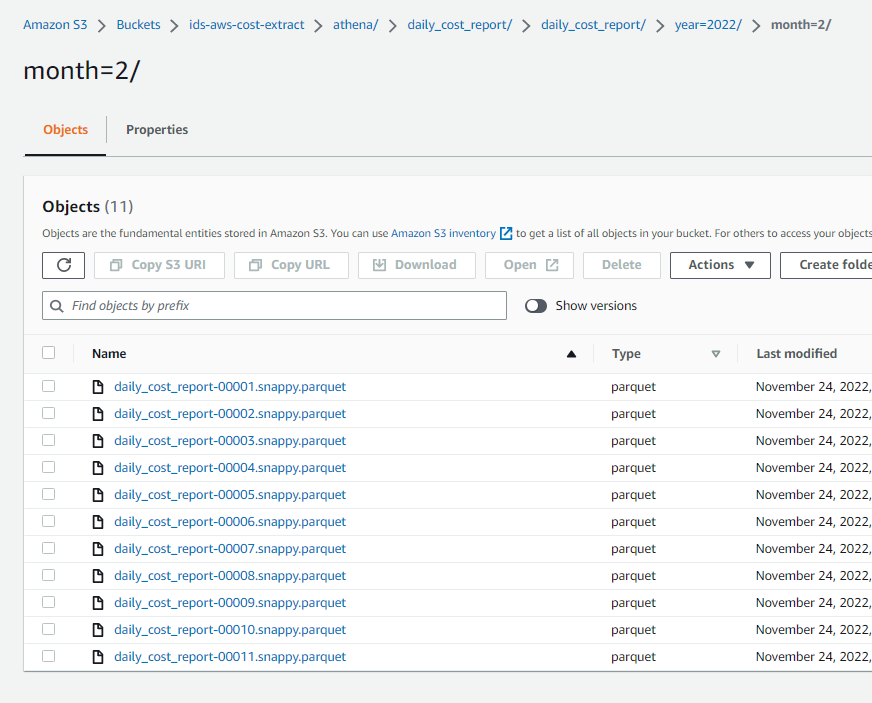

and month=2 folder have these files

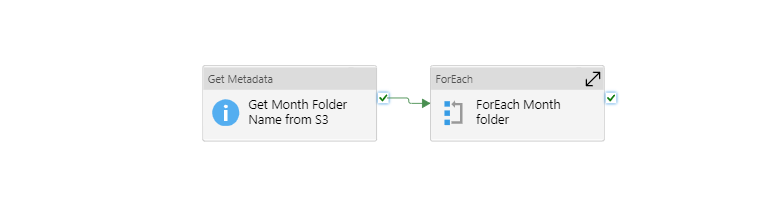

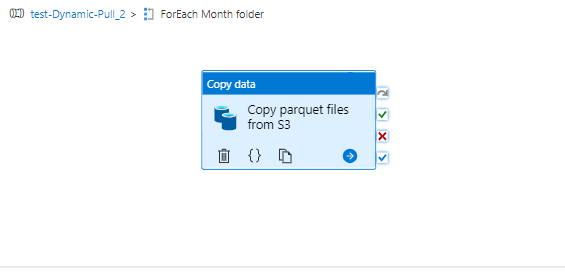

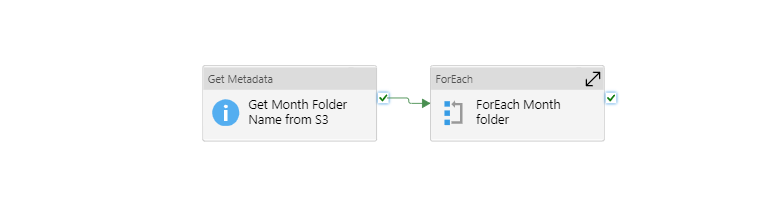

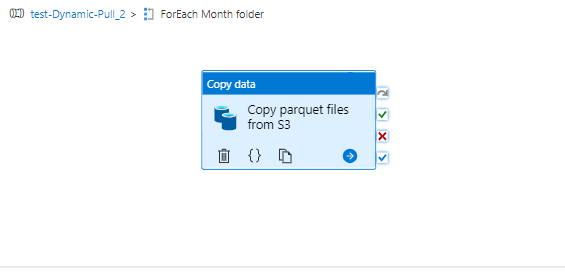

I have built this pipeline so far -

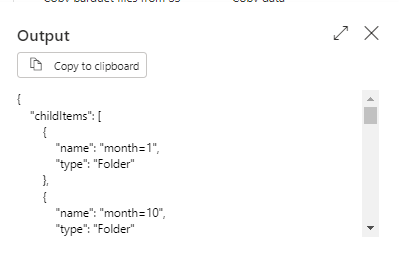

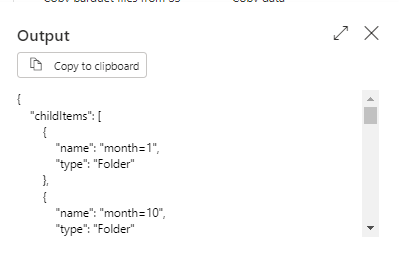

First of all my metadata activity is grabbing the folder name as

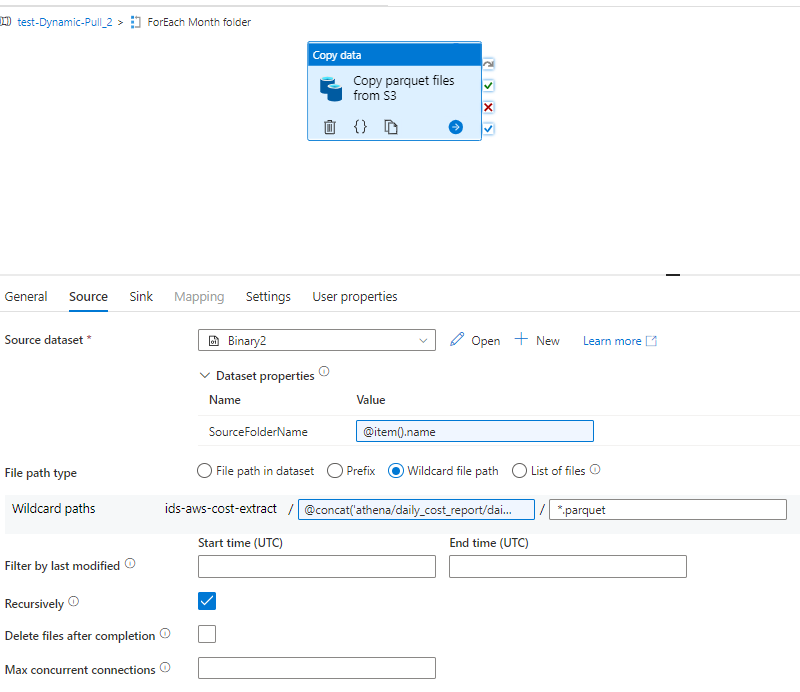

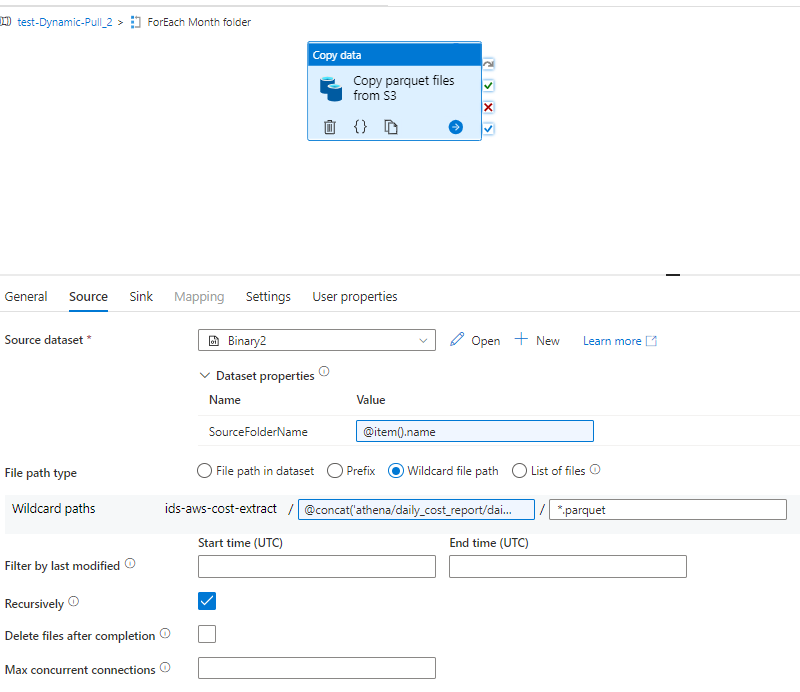

and then my foreach activity is iterating through each item coming from metadata activity to get inside the folder name and then copy activity is using that folder name and then i tried using the wild card path to copy all the parquet files to a single folder in datalake

This is my dynamic expression inside wildcard path

@markus.bohland@hotmail.de ('athena/daily_cost_report/daily_cost_report/year=2022/',item().name,'/')

My copy activity is working fine but since for the different month folders files names are same so every time my copy activity is replacing the files inside the sink .

I want to save the files as

The file name coming from the s3 bucket are like this -

daily_cost_report-00001.snappy.parquet

Now i want to save these files with some suffix .

Like for 2022 Jan files

daily_cost_report-00001_2022_01.snappy.parquet

daily_cost_report-00002_2022_01.snappy.parquet

daily_cost_report-00003_2022_01.snappy.parquet

For 2022 feb Files

daily_cost_report-00001_2022_02.snappy.parquet

daily_cost_report-00001_2022_02.snappy.parquet

and so on.

I am not able to get the file names inside foreach. How can we achieve this ?

Please suggest @MartinJaffer-MSFT