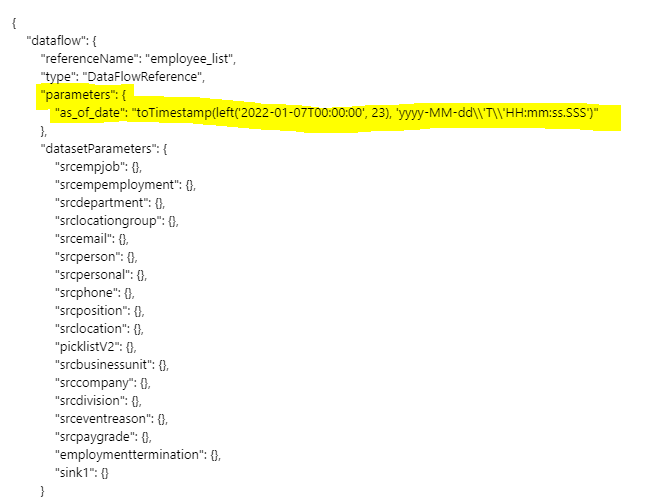

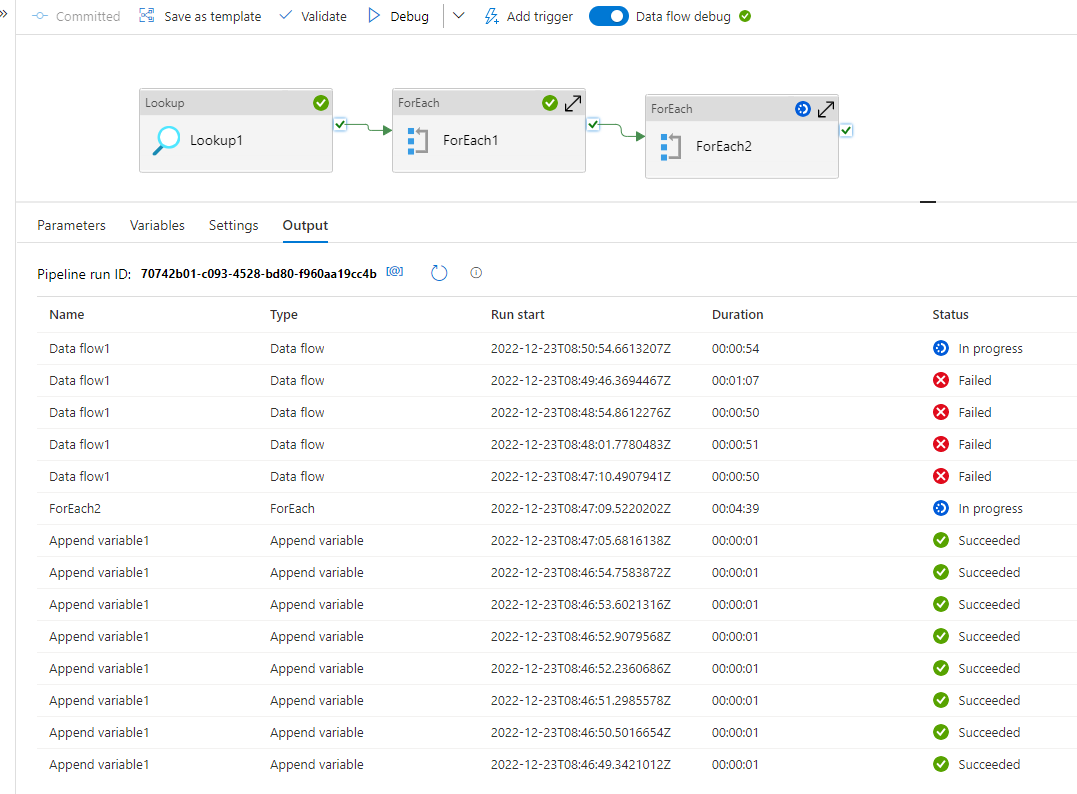

It sounds like sometimes the parameter value is either empty or cannot convert to a valid timestamp. On you monitoring view above in ADF, hover over one of the failed data flow activities and click on the input icon. Observe the value of the input parameter being sent to the data flow activity to ensure that it is valid.

How to set dynamic output file name in data flow

Hello.

I would like to dynamically set the name of the file to be output to the sink in the data flow, but I am getting an error.

{"message":"Job failed due to reason: at Sink 'sink1': File names cannot have empty value(s) while file name option is set as per partition. Details:","failureType":"UserError","target":"Data flow1","errorCode":"DF-Executor-InvalidPartitionFileNames"}

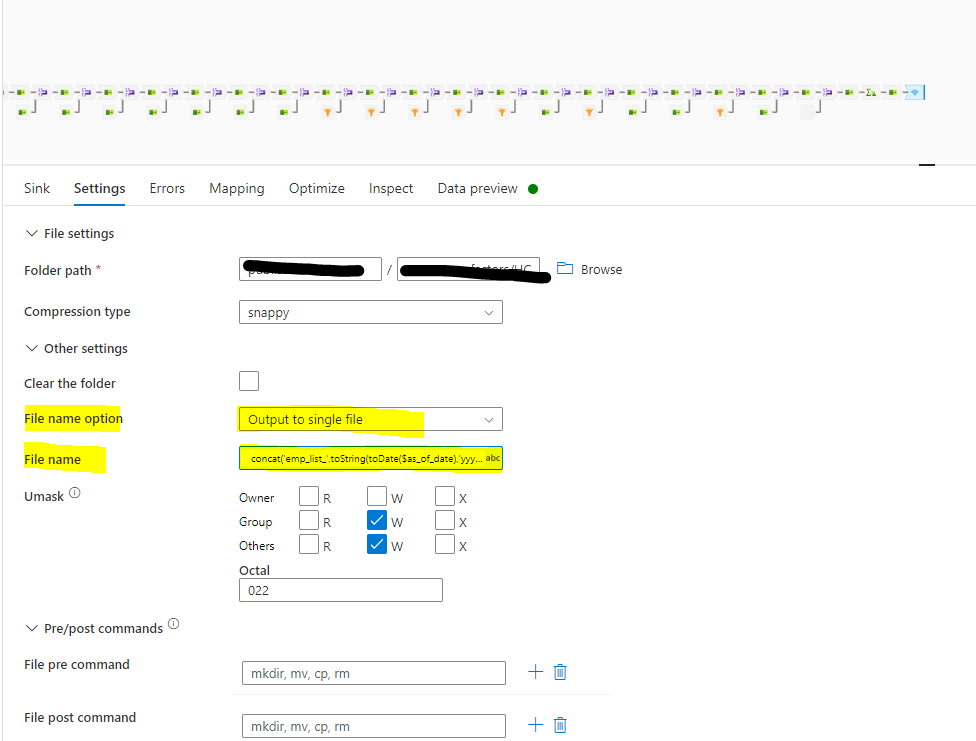

Sink settings are described below.

・Output as a single file.

・The name of the file to output is "emp_list_yyyyyMMdd.parquet".

concat('emp_list_',toString(toDate($as_of_date),'yyyy'),toString(toDate($as_of_date),'MM'),toString(toDate($as_of_date),'dd'),'.parquet')

*The parameter '$as_of_date' is the value of the parameter '$as_of_date'.

For example, if the parameter passed is "2022-01-07T00:00:00", I want to output only one file, 'emp_list_20220107.parquet'.

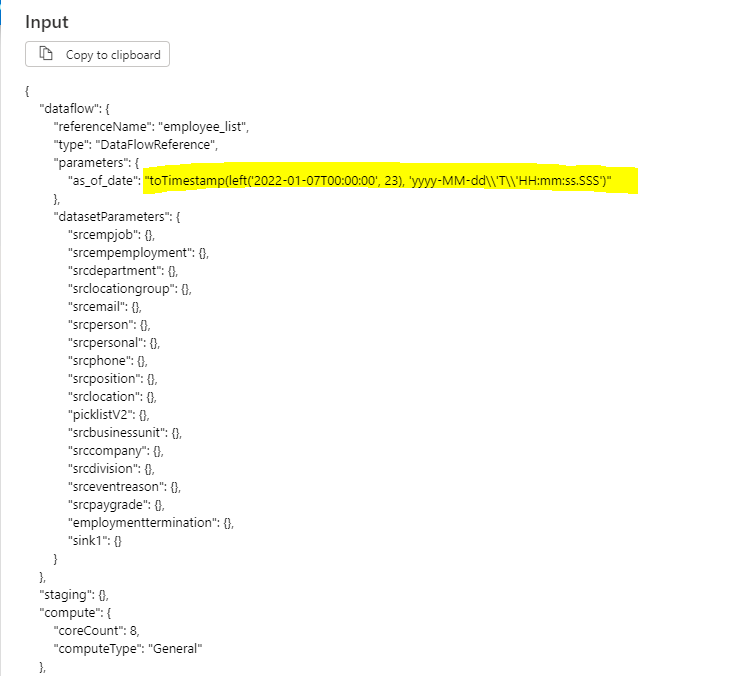

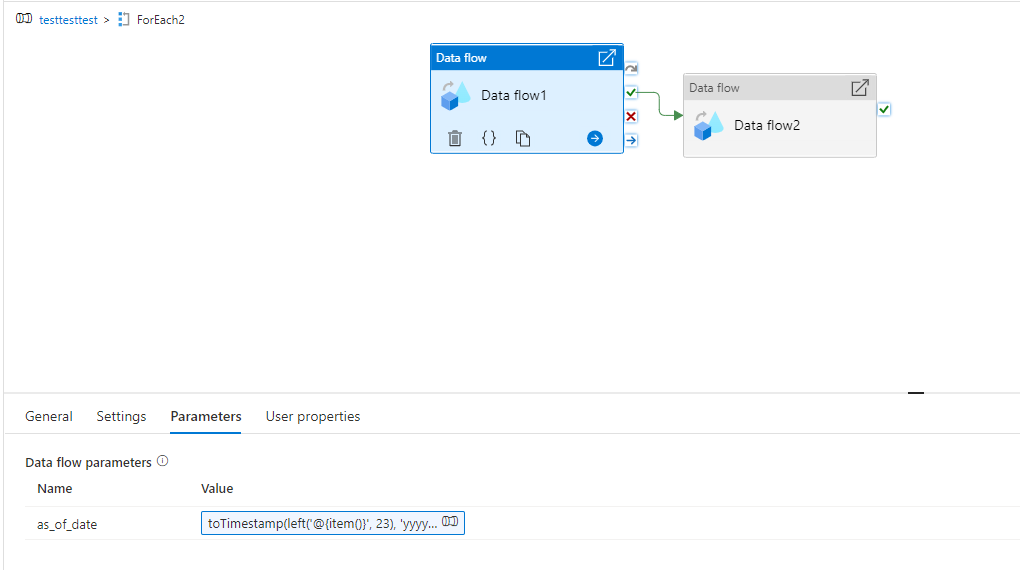

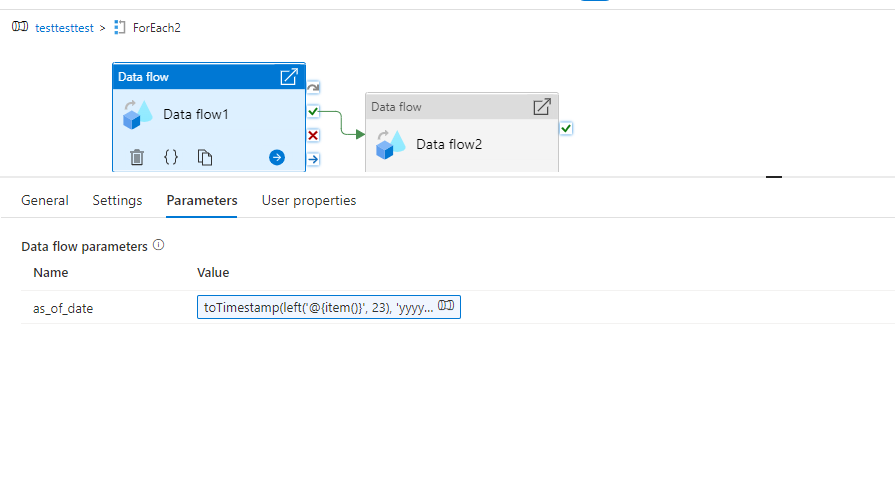

・The parameter '$as_of_date' is a timestamp type, passed from the pipeline with the following settings

toTimestamp(left('@{item()}', 23), 'yyyy-MM-dd\'T\'HH:mm:ss.SSS')

Azure Synapse Analytics

Azure Databricks

Azure Data Factory

1 answer

Sort by: Most helpful

-

MarkKromer-MSFT 5,231 Reputation points Microsoft Employee Moderator

MarkKromer-MSFT 5,231 Reputation points Microsoft Employee Moderator2022-12-24T01:13:50.843+00:00