Cannot access Azure Storage File via a DataStore

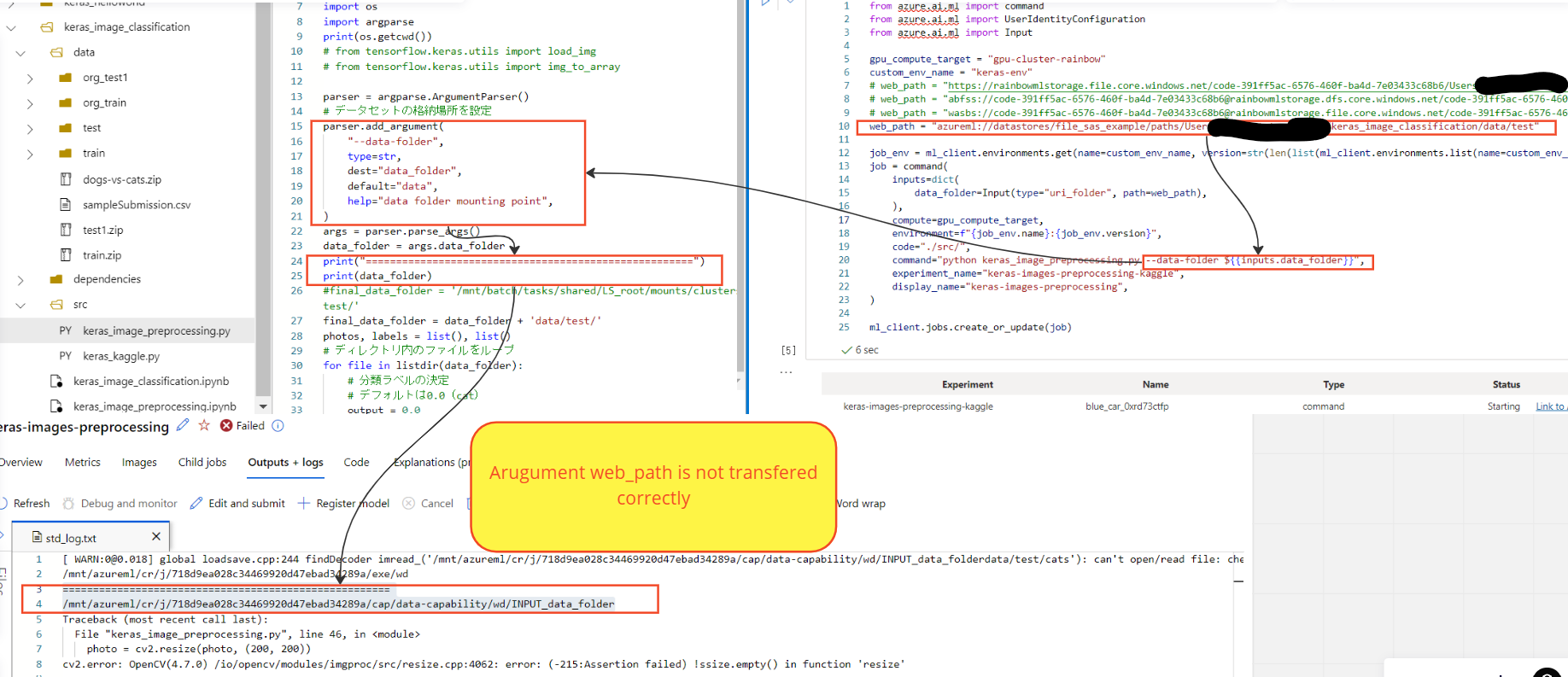

(1) Overview of Error

When I try to access Azure Storage File Shares from Azure Machine Learning Notebook, I get the following error.

Attachment : 1_Error.JPG

Error Message : DataAccessError(NotFound)

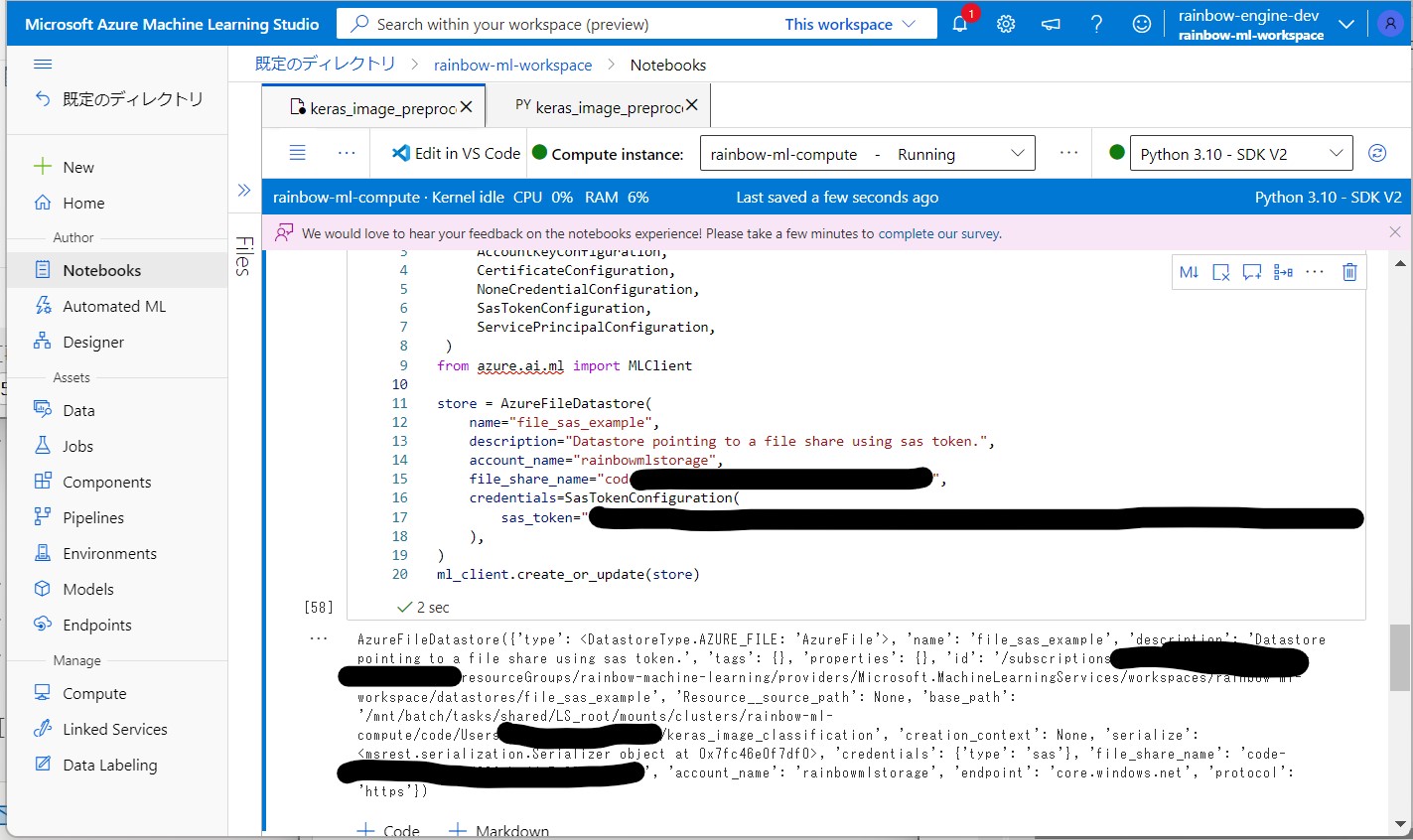

(2) Detail of my Notebook

Thanks to @romungi-MSFT in this post, I succeeded creating a 'AzureFileDatastore' using the following piece of code.

===

from azure.ai.ml.entities import AzureFileDatastore

from azure.ai.ml.entities._credentials import (

AccountKeyConfiguration,

CertificateConfiguration,

NoneCredentialConfiguration,

SasTokenConfiguration,

ServicePrincipalConfiguration,

)

from azure.ai.ml import MLClient

store = AzureFileDatastore(

name='file_sas_example',

description='Datastore pointing to a file share using sas token.',

account_name='rainbowmlstorage',

file_share_name='xxxxxxxxx',

credentials=SasTokenConfiguration(

sas_token='xxxxxxxxxxxxxxxxxx'

),

)

ml_client.create_or_update(store)

===

Attachment : 2_Succeed_creating_DataStore.JPG

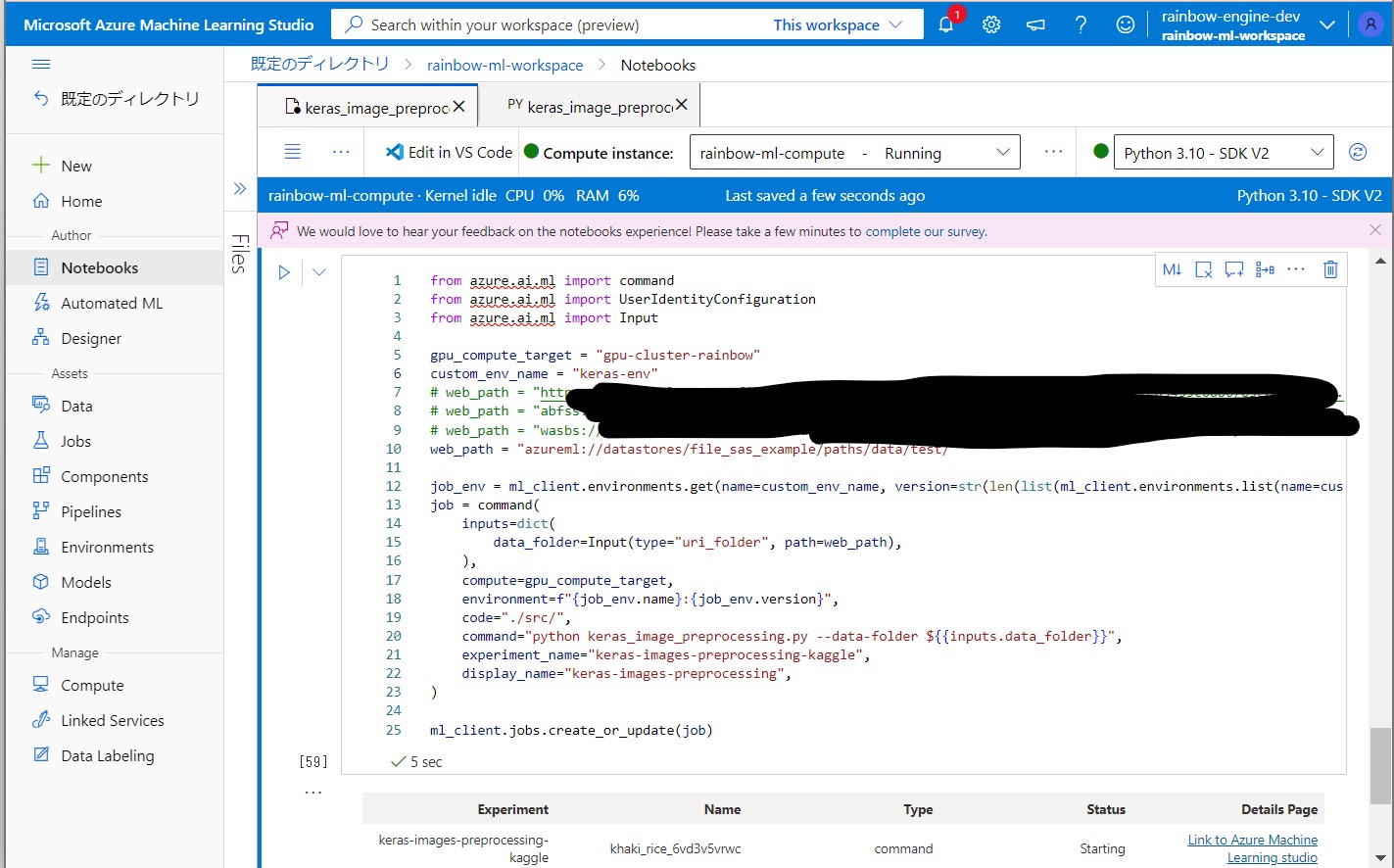

(3) Access to the data via DataStore

Finally, I get the error shown on top when I ran the following piece of code from Notebook.

===

from azure.ai.ml import command

from azure.ai.ml import UserIdentityConfiguration

from azure.ai.ml import Input

gpu_compute_target = 'gpu-cluster-rainbow'

custom_env_name = 'keras-env'

# Refered to this(https://learn.microsoft.com/en-us/azure/machine-learning/how-to-read-write-data-v2?tabs=python) document.

# And tried to make it like 'azureml://datastores/(data_store_name)/paths/(path)'

web_path = 'azureml://datastores/file_sas_example/paths/data/test/'

job_env = ml_client.environments.get(name=custom_env_name, version=str(len(list(ml_client.environments.list(name=custom_env_name)))))

job = command(

inputs=dict(

data_folder=Input(type='uri_folder', path=web_path),

),

compute=gpu_compute_target,

environment=f'{job_env.name}:{job_env.version}',

code='./src/',

command='python keras_image_preprocessing.py --data-folder ${<!-- -->{inputs.data_folder}}',

experiment_name='keras-images-preprocessing-kaggle',

display_name='keras-images-preprocessing',

)

ml_client.jobs.create_or_update(job)

===

Attachment : 3_Error_when_access_Datastore.JPG'

And this is the final error message shown in "lifecycler.log"

2023-01-04T12:47:09.731127Z ERROR run_lifecycler:run_service_and_step_through_lifecycle:step_through_lifecycle: lifecycler::lifecycle: failed to start capabilities exception=CapabilityError(StartError(ErrorResponse("DATA_CAPABILITY", Response { code: "500", error: Some(Error { code: "data-capability.UriMountSession.PyFuseError", message: "DataAccessError(NotFound)", target: "UriMountSession:INPUT_data_folder", node_info: Some(NodeInfo { node_id: "tvmps_xxxxxx_p", vm_id: "xxxxxx" }), category: UserError, error_details: [ErrorDetail { key: "NonCompliantReason", value: "DataAccessError(NotFound)" }, ErrorDetail { key: "StackTrace", value: " File \"/opt/miniconda/envs/data-capability/lib/python3.7/site-packages/data_capability/capability_session.py\", line 70, in start\n (data_path, sub_data_path) = session.start()\n\n File \"/opt/miniconda/envs/data-capability/lib/python3.7/site-packages/data_capability/data_sessions.py\", line 386, in start\n options=mnt_options\n\n File \"/opt/miniconda/envs/data-capability/lib/python3.7/site-packages/azureml/dataprep/fuse/dprepfuse.py\", line 680, in rslex_uri_volume_mount\n raise e\n\n File \"/opt/miniconda/envs/data-capability/lib/python3.7/site-packages/azureml/dataprep/fuse/dprepfuse.py\", line 674, in rslex_uri_volume_mount\n mount_context = RslexDirectURIMountContext(mount_point, uri, options)\n" }], inner_error: None }) })))