Hi everyone.

I think I know what's going on here.

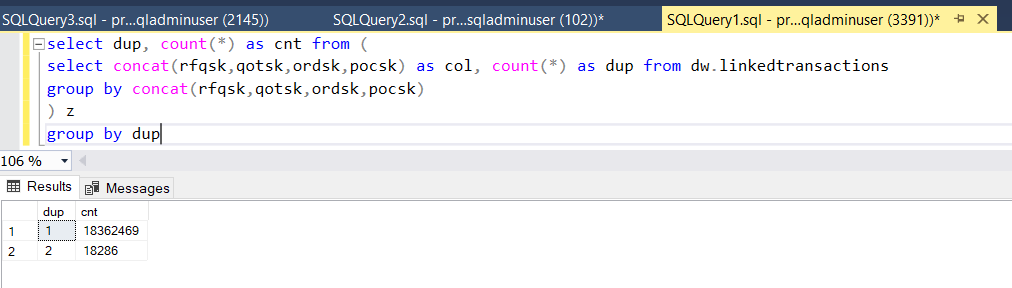

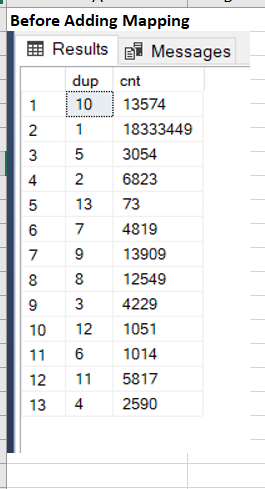

I have the same problem in my project, and I'm going crazy, but I have a clue:

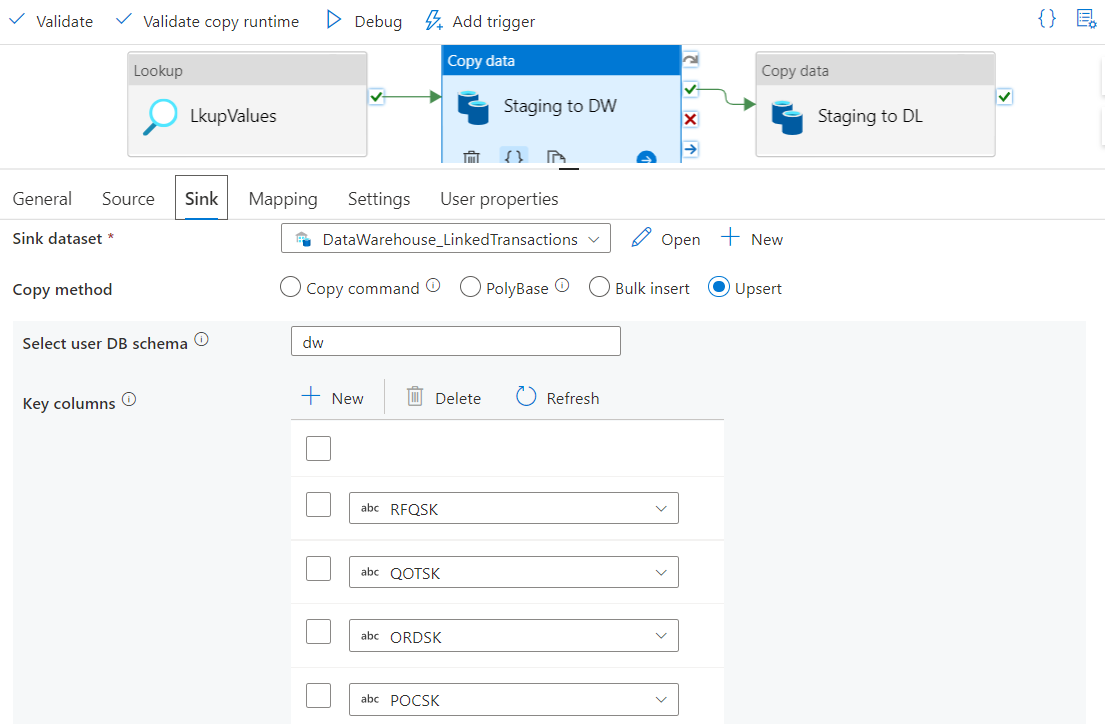

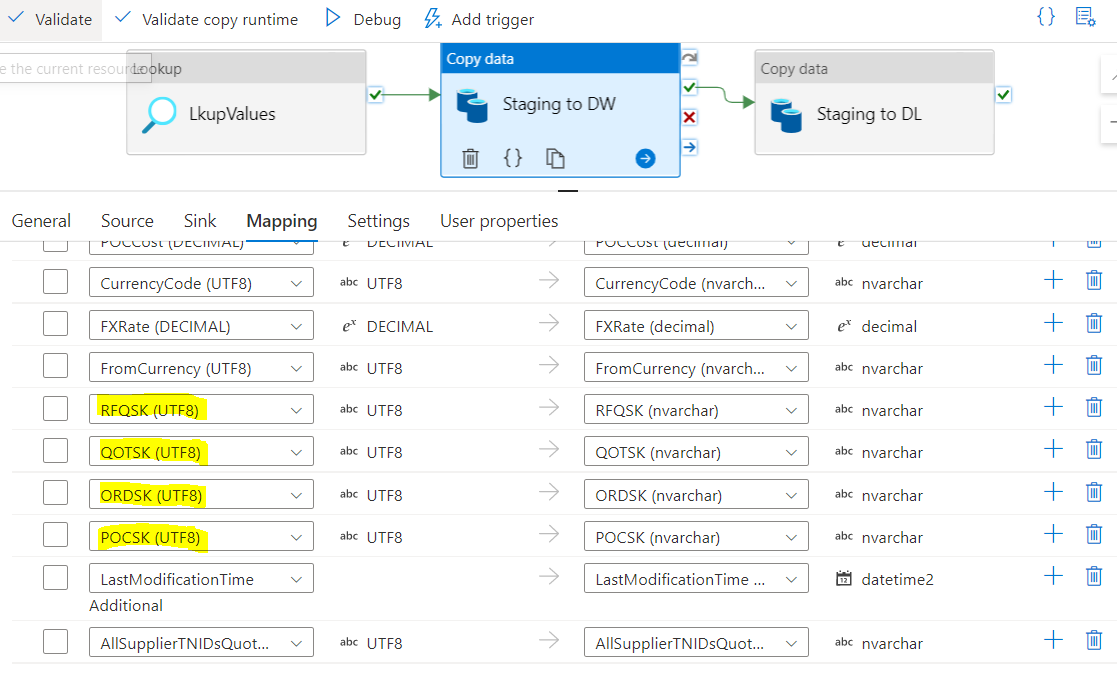

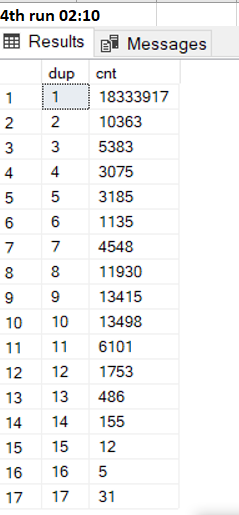

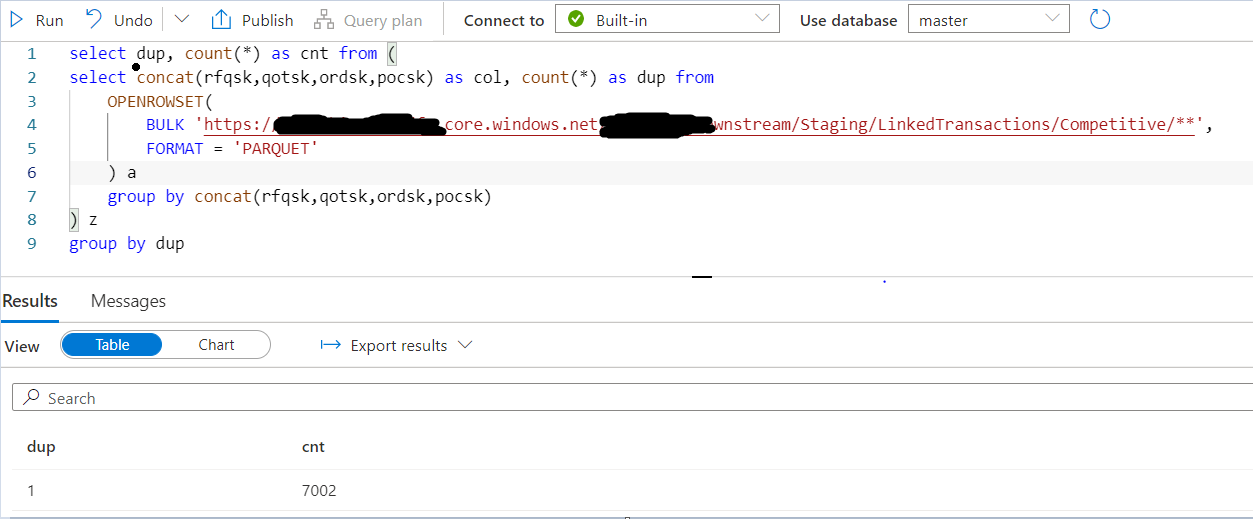

When I have duplicates at the end, but NOT at the source, it is because of the key Columns: one of them may be null, so the Copy Data activity does not concatenate the Key columns correctly. This could be very common, for example, in spark sql.

Please, let me know if this is a valid solution for you