This error message is driving me crazy!!!

Error :

{"message":"Job failed due to reason: at Source 'rawRecords': Path /DataLake-Bronze/Folder/part-00000-74f8b50e-67cf-4106-a04f-79d55b4b6243-c000.csv does not resolve to any file(s). Please make sure the file/folder exists and is not hidden. At the same time, please ensure special character is not included in file/folder name, for example, name starting with _. Details:","failureType":"UserError","target":"IncrementalLoad","errorCode":"DF-Executor-InvalidPath"}

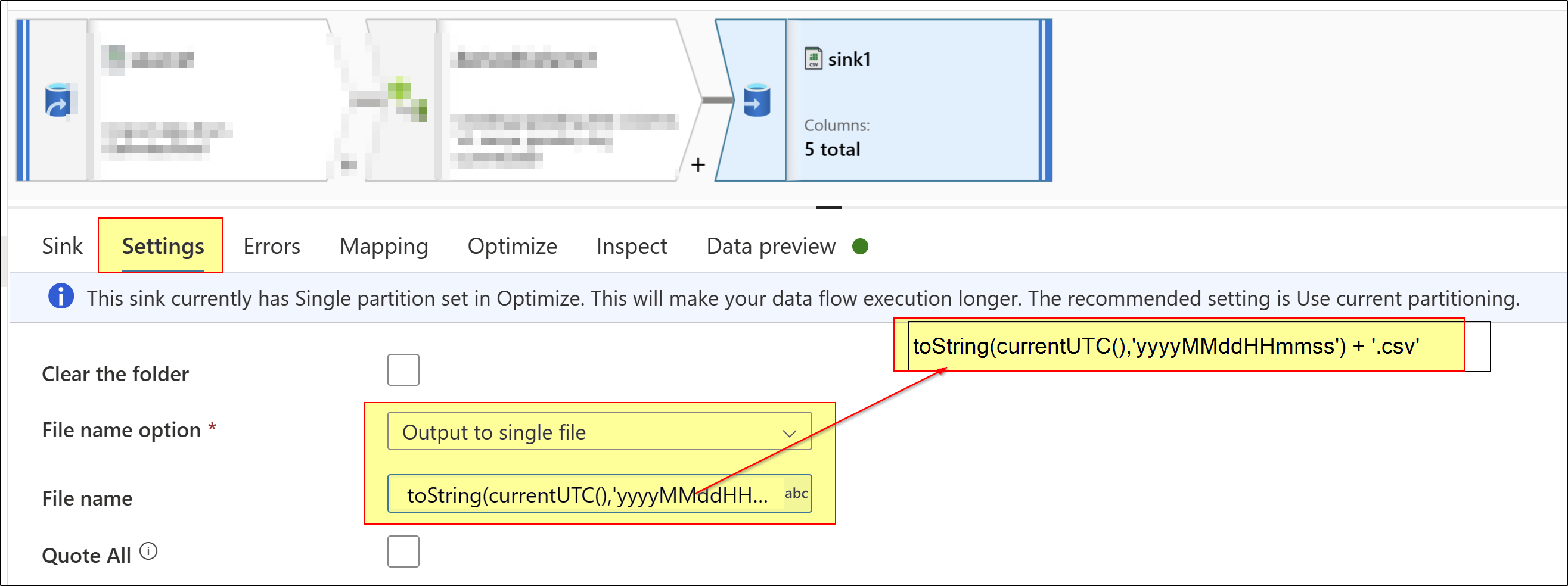

I have two pipes, first pipe P1 is for getting CDC records from SQL table and loading them to Data lake bronze layer in the .csv format. This Pipe runs a very simple data flow which contains of a Source and a Sink. I give each file a name by passing “triggerStartTime” parameter from the pipe.

@markus.bohland@hotmail.de (formatDateTime(pipeline().parameters.triggerStartTime,'yyyyMMddHHmmssfff'),'.csv') (For Example files are called

P1 runs by a Tumbling window trigger, Adding file to Data lake bronze layer in turn fires the storage event trigger and runs the second pipe, P2.

The second pipe, P2, runs a dataflow in which it checks records within the new file and decides if they are new records, or they are an update of existing records, etc then does required transformation and finally load the new and updated records (via Sink activity) to Silver layer. Name of the new arrived file to the bronze layer, will be passed from storage event trigger to the Pipe P2 and its flow via @Trigger ().outputs.body.fileName

However, running Pipe P2 fails in the first step of data flow i.e. Source with the error message that is driving me crazy (above). Problem is that name of the file that has been passed from trigger to the pipe (part-00000-74f8b50e-67cf-4106-a04f-79d55b4b6243-c000.csv) does not exist in the bronze layer since when I load files to bronze, I have already renamed it ,as mentioned above.

Any advice to help me to resolve this issue would be greatly appreciated.