I have created a pipeline in Azure Data Factory that triggers a Delta Live Table in Azure Databricks through a Web activity mentioned here in the Microsoft documentation.

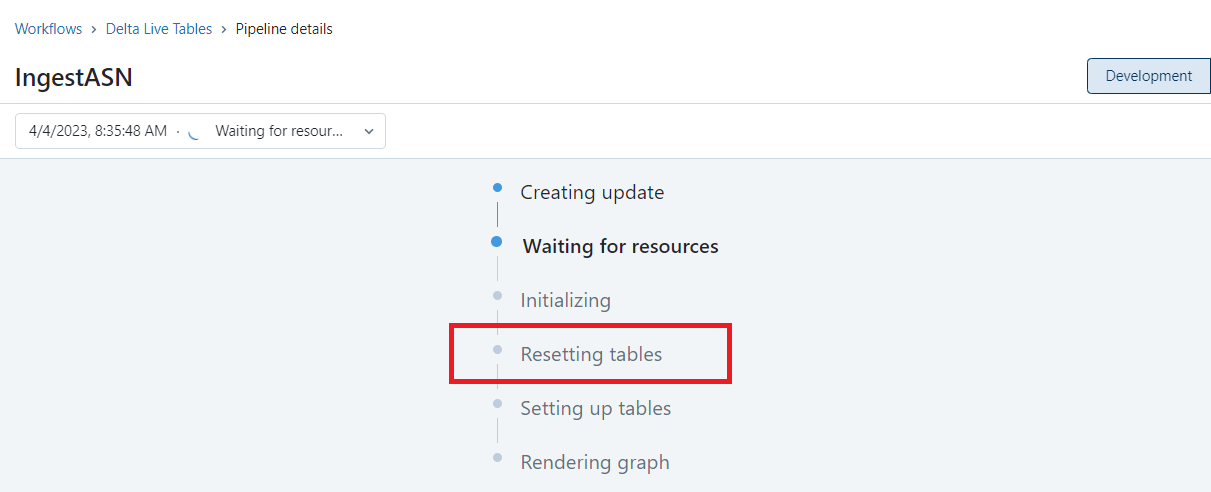

My problem is that when I trigger my DLT from ADF, it resets the whole tables, meaning that my data becomes unavailable during the pipeline execution. Here's how it looks like with this extra step:

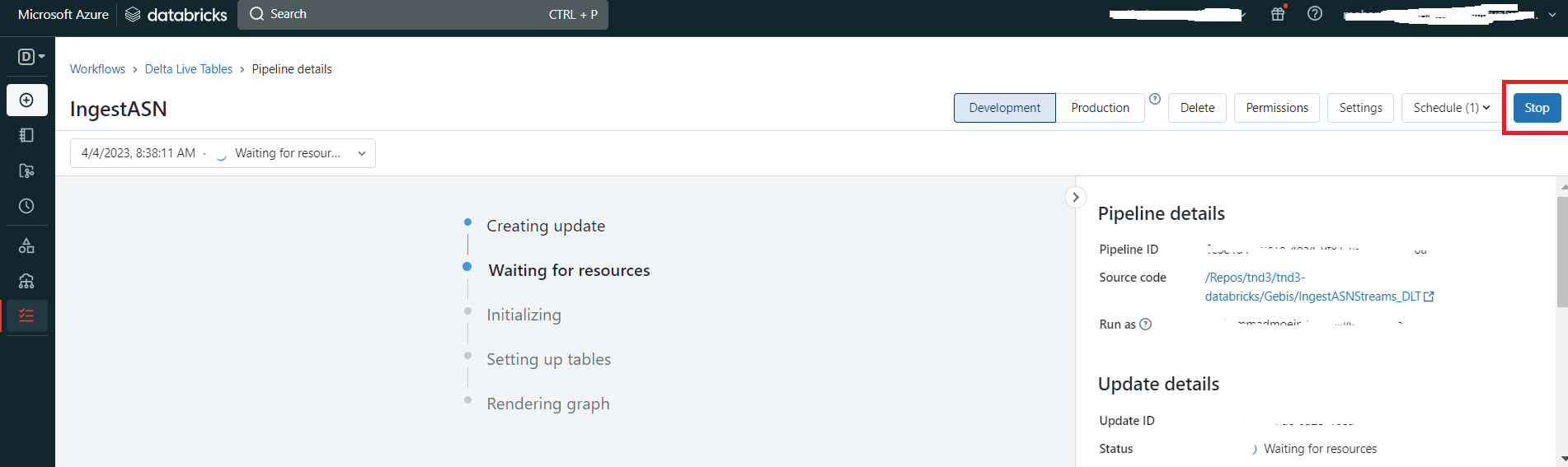

However, when I run it directly from the Databricks UI, the tables will not get reset and the data is available during the execution of my pipeline. Here's how it looks like:

I would like to have the same behavior in ADF, as I have when trigger the pipeline directly from the Databricks UI. I don't want to have this additional "resetting tables" step in my DLT pipeline when I trigger it from ADF.

Additional information: My Delta Live Table is a streaming pipeline, meaning that it will only pick up the new files that's been added to my source since the last time it ran. That's exactly my problem; resetting the whole tables pick every single historical file, as if I am running the pipeline for the first time.