Hi @jigsm ,

Thanks for your response and confirmation. Please follow below steps to achieve your requirement

Note: Please make sure that your subscription is registered with the Event Grid resource provider as event triggers are dependent on Azure Event Grid

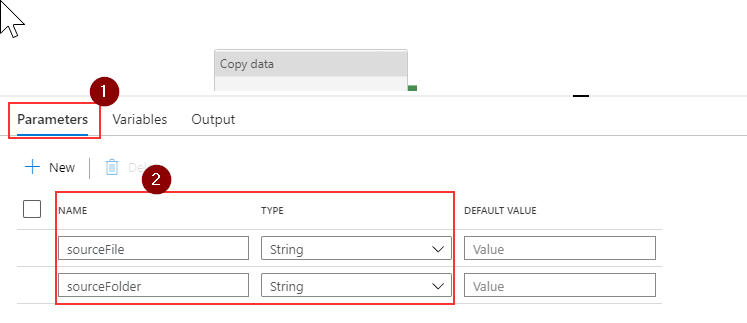

- Create pipeline parameters as below and do not input any values to them, since you will be passing those values from trigger parameters in the next steps.

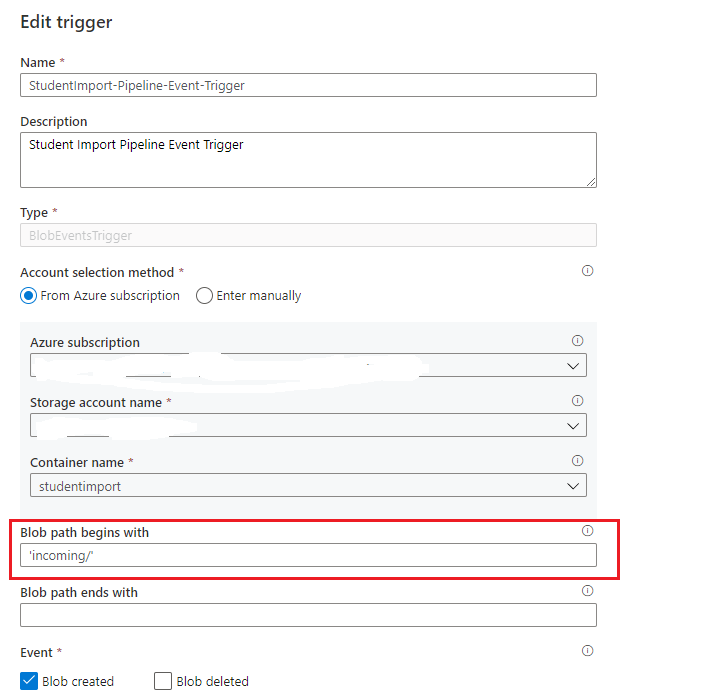

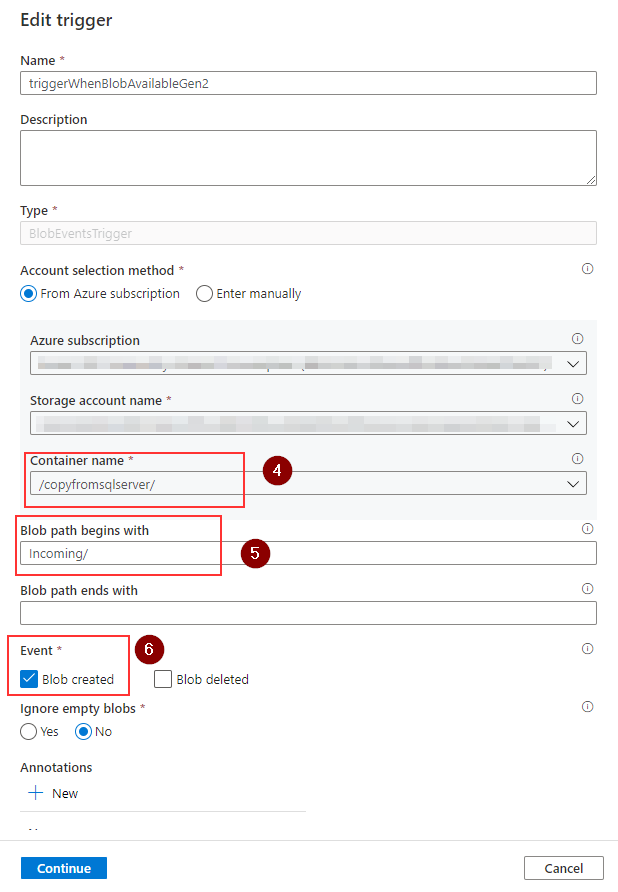

- Then create your event trigger from pipeline as shown below (here my container name is

copyfromsqlserverplease update with your container name)

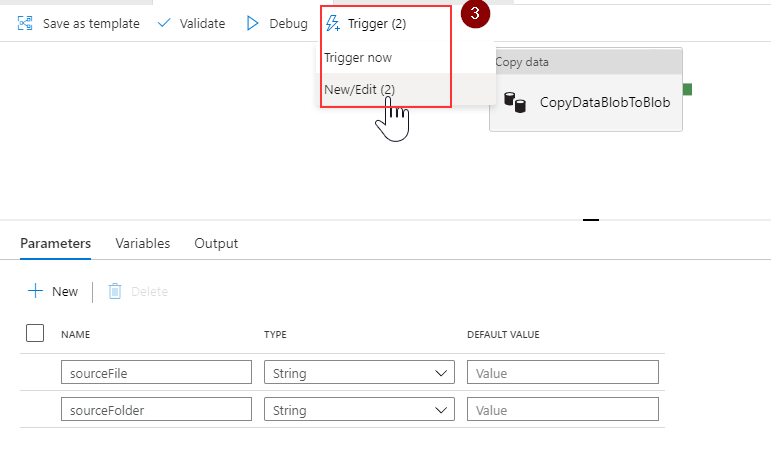

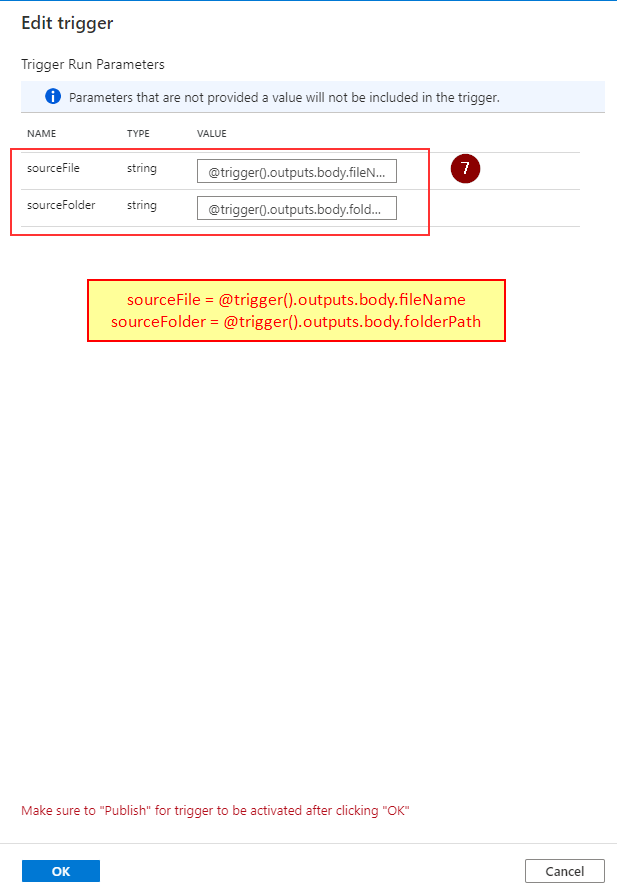

- Then create even trigger parameters which maps to pipeline parameters created earlier.

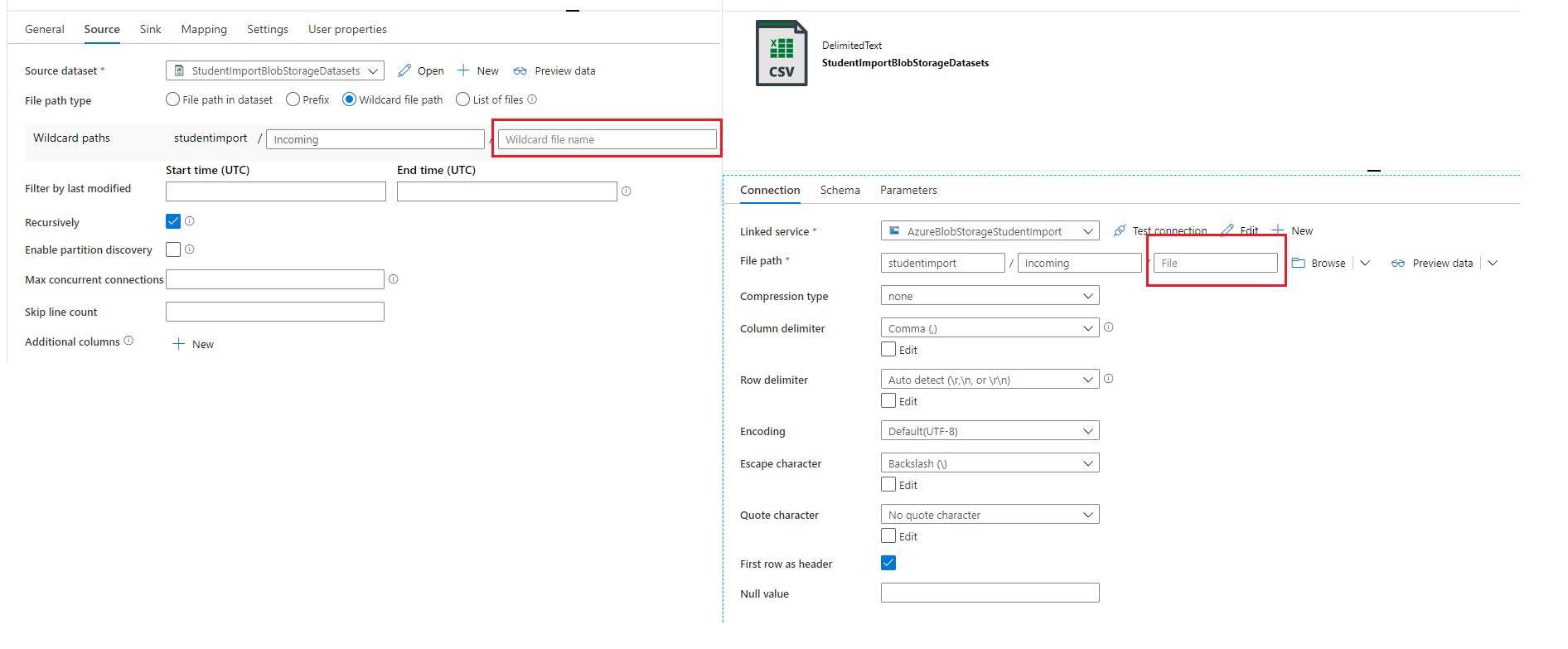

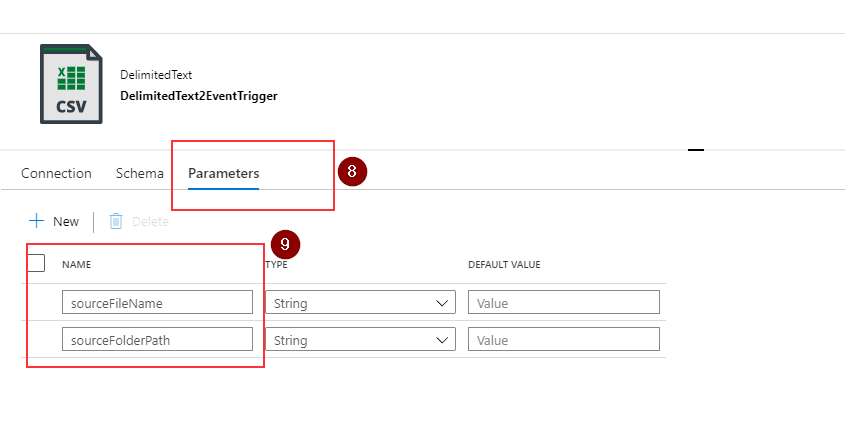

- Then create your copy source dataset and create dataset parameters as shown below.

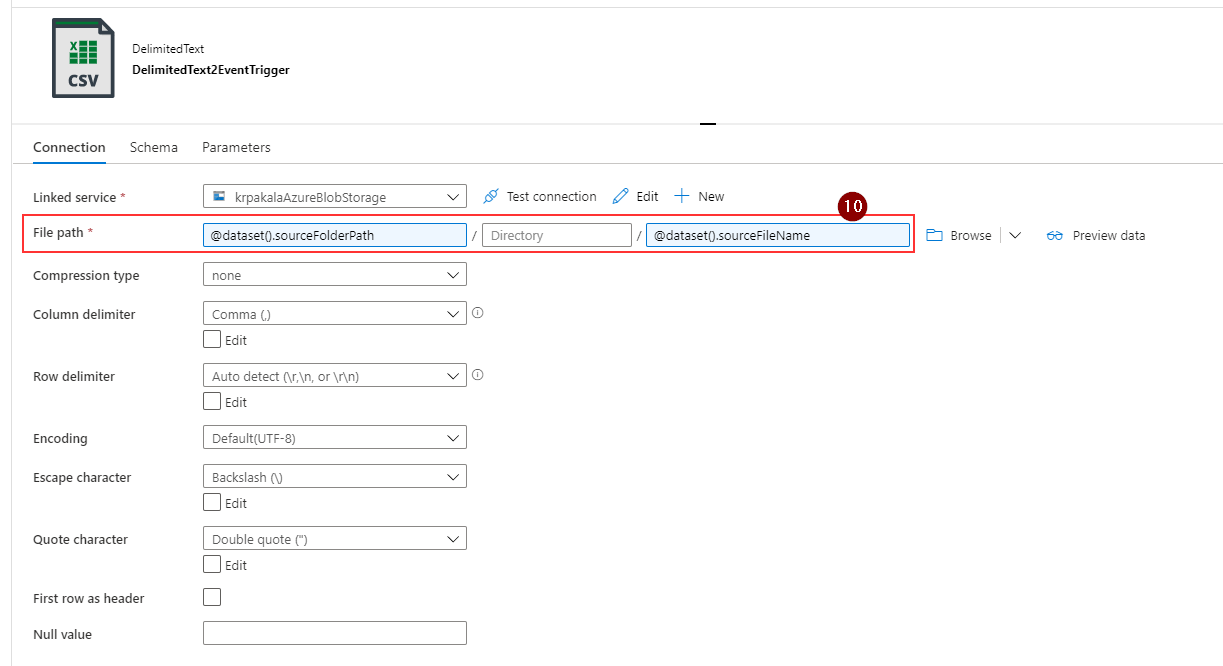

- Then use those dataset parameters inside dataset

connectionsettings

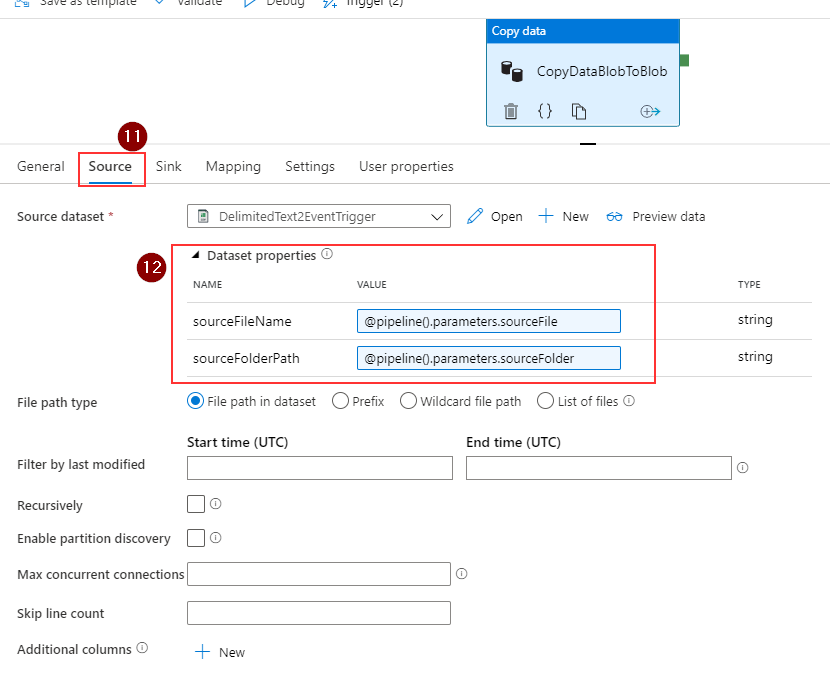

- Then map these dataset parameters to pipeline parameters as shown below under Copy

Sourcesettings.

- Now configure your sink accordingly and publish your pipeline and drop a blob/file in source location which would eventually trigger your pipeline.

Hope this helps. If you have further query, do let me know.

Thank you

----------

Please do consider to click on "Accept Answer" and "Upvote" on the post that helps you, as it can be beneficial to other community members.