@Steven Pretswell I have reproduced and found a solution.

First I created a csv file with the numbers 1...40, each number on its own line. This I use as source data. I will split these 40 numbers into 4 sets of 10.

What you described is somewhat like partitioning. In the Data Flow source, I set the partitioning to round-robin, so the partitions would be evenly sized. The contents of each are not sequential. While my source data is the range of numbers 1...40, contiguous (no holes) and ordered, I have no idea what your id's are like. This is why I chose round-robin.

Step 1

Next, to make the partitions visible, and easier to work with, I added a derived column whose contents are partitionId(column_1).

Step 2 (optional)

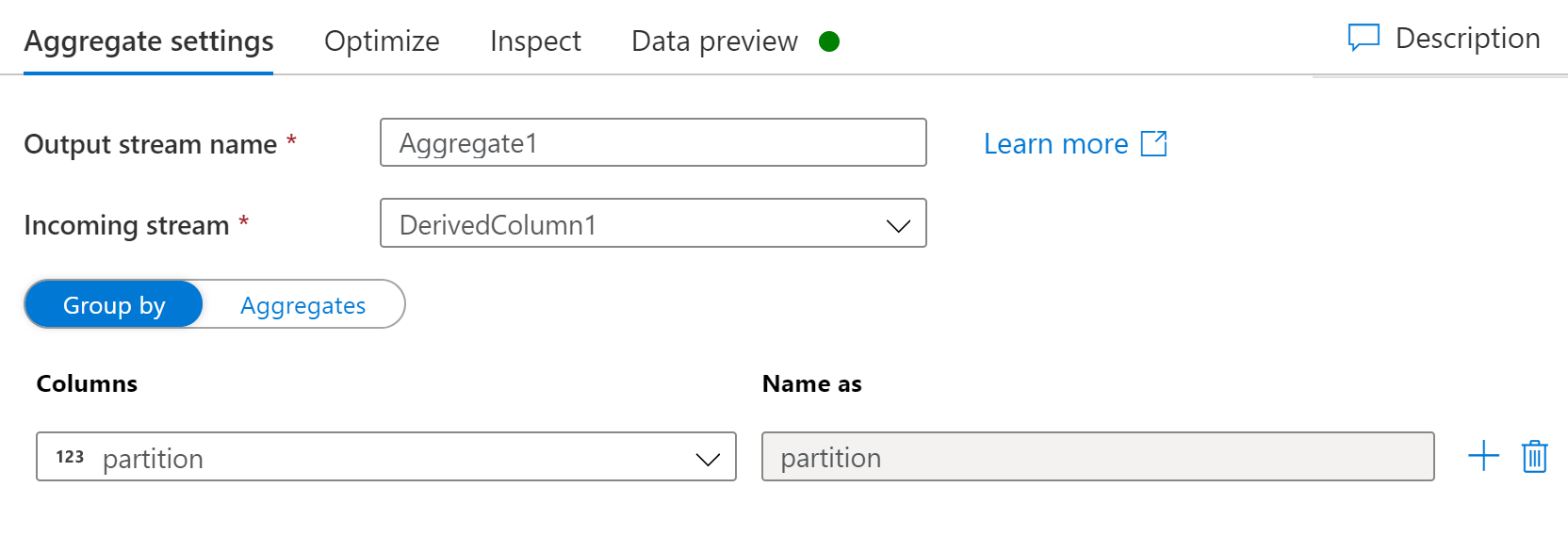

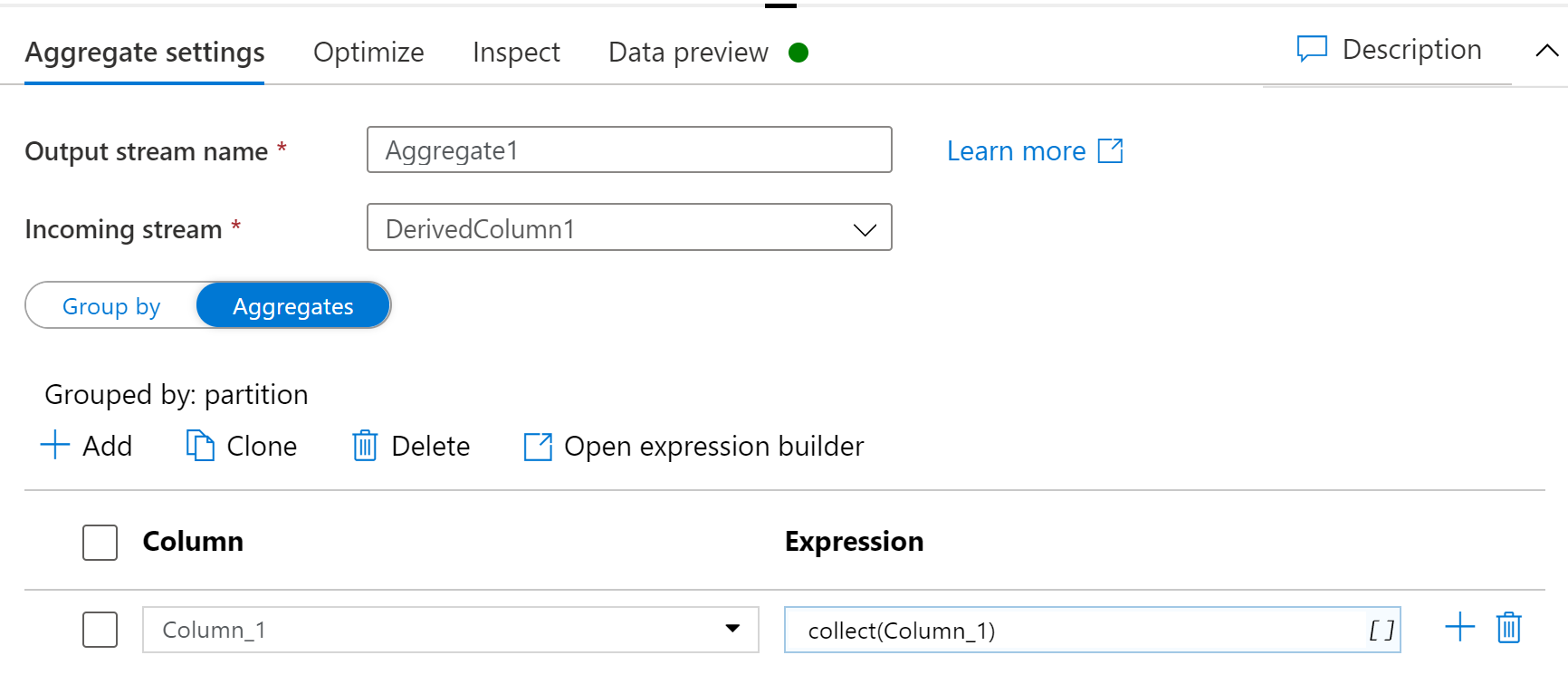

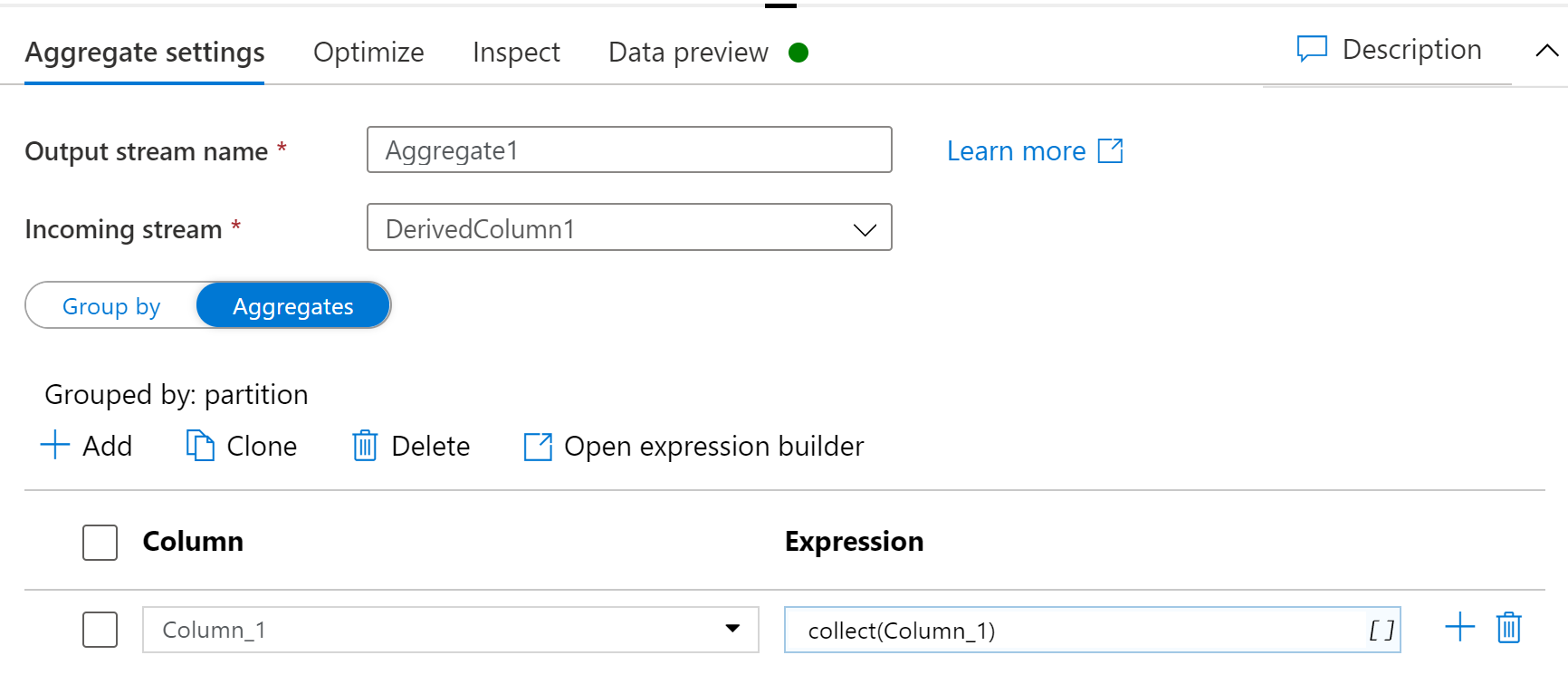

Now I want to group the rows by the partition and do collect like you suggested, but only on the contents of the group, keeping the groups separate. This is accomplished by an aggregation transformation.

Step 3 (important)

Group by: column partition, name as partition

Aggregate: column_1, collect(column_1)

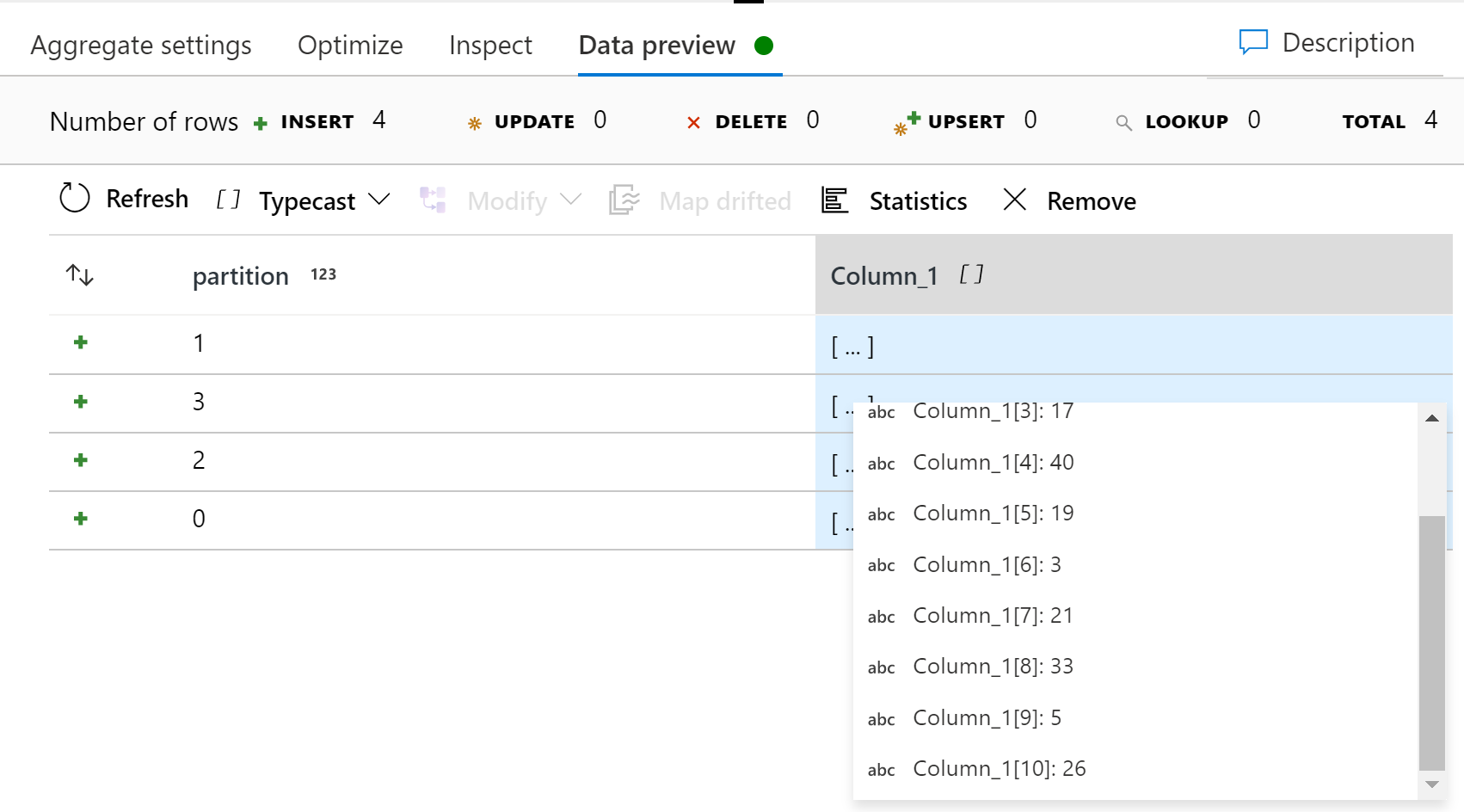

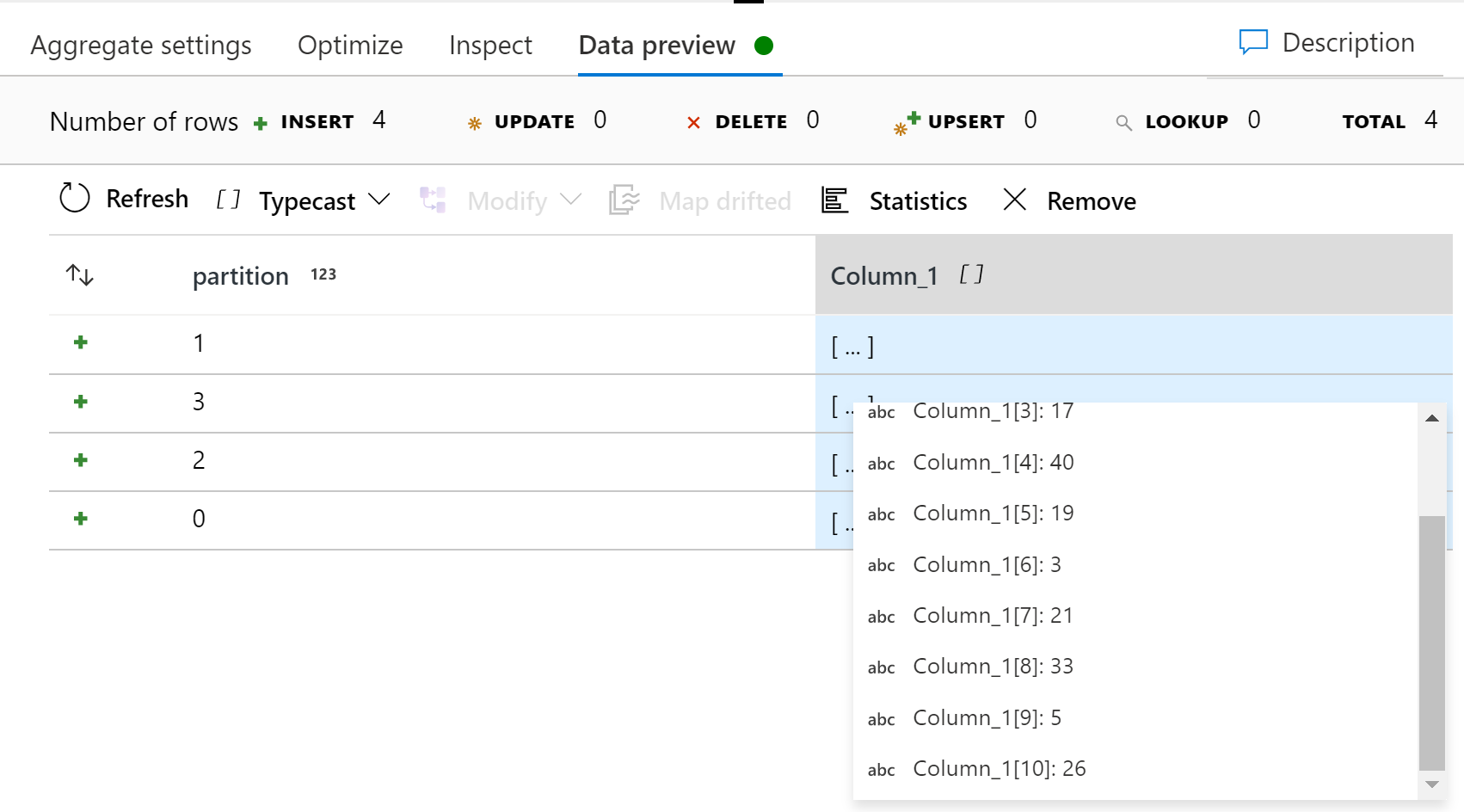

Now I preview the output of the aggregation, I see 4 rows, each a different partitionId. There is also an array in each. Peeking into the array I see 10 entries.

This is similar to the state you wish to achieve. Now to clean up, either use a select to discard the partition id, or just exclude it in the sink. Also, now that I know this works, I could clean up some more. Since partitionId was generated by a function, I can removed the derived column, and in the aggregate group by clause, use partitionId() instead of a column.