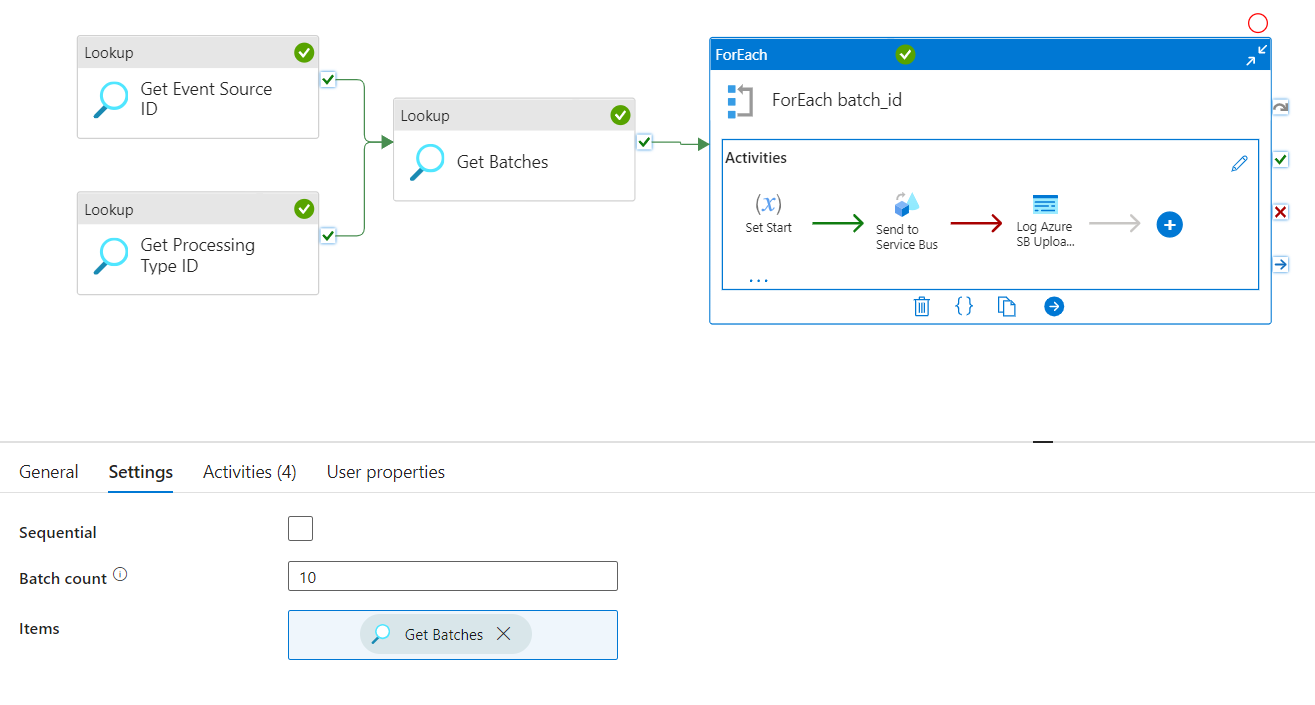

I am loading data to a Service Bus queue, using external calls in a data flow. This data flow is called by a pipeline with the call to the data flow in a loop. The loop value items are extracted by getting a distinct list of batch_id's from a column in my SQL table. The table is split into batches of around 1000.

I have set the for-each loop to have a batch count of 10 as seen in the picture above, the items are: defined as

@activity('Get Batches').output.value

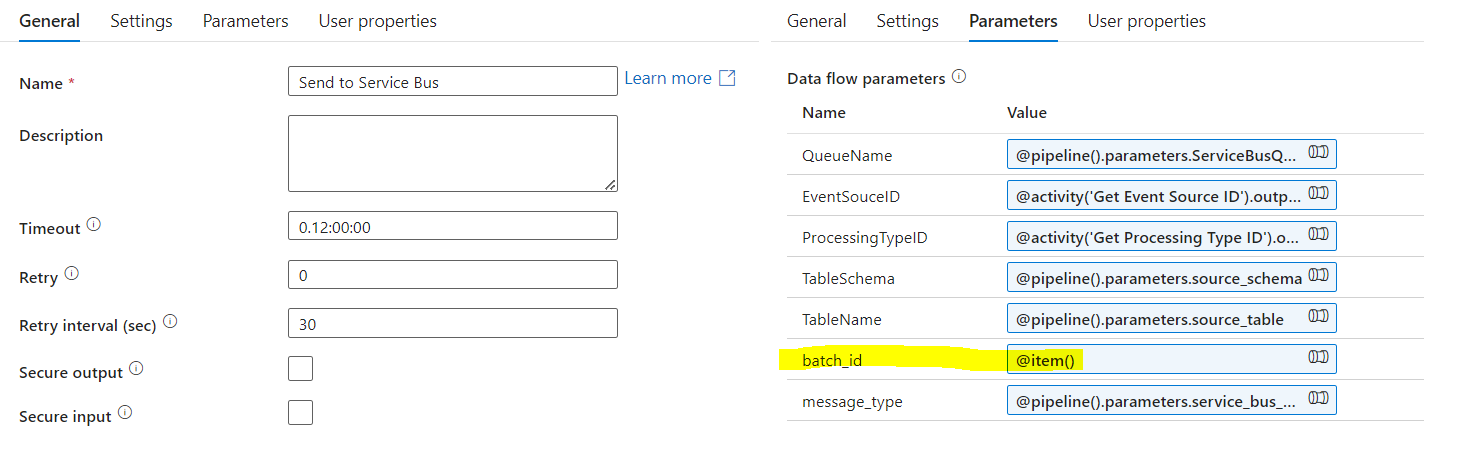

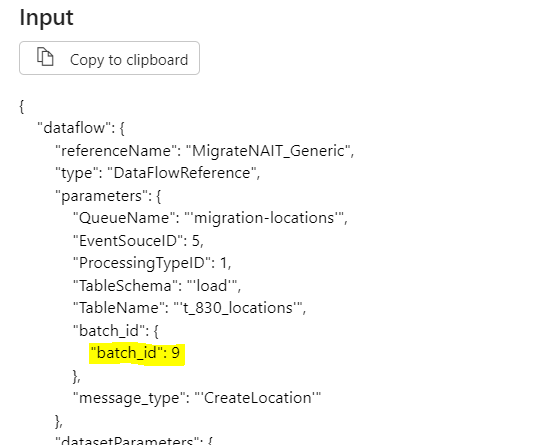

In theory this means that 10 executions of the batches can happen at once, the data flow picks up the current batch passed to it and processes the rows for that batchID_._ This is confirmed that the data flow is called this way by looking at an execution of the pipeline, where the item value is passed as the batchID:

And during execution the Send to Service Bus 1st execution Input looks like:

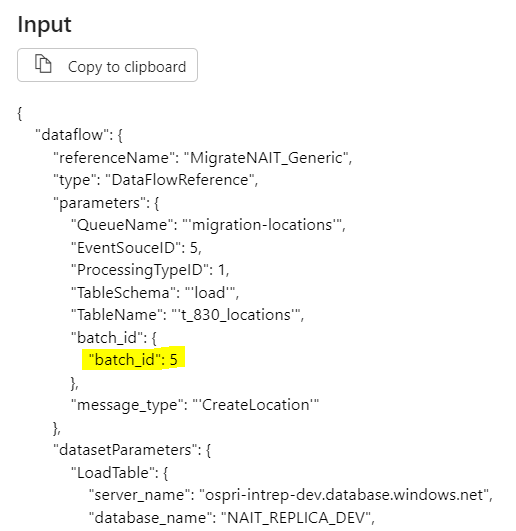

And the next Send to Service Bus execution input looks like:

The first 10 calls are for batchIds in the set. All looking good so far.

On the Data Flow itself, the Source uses this batchID to build a SQL statement, to extract the data:

concat("SELECT JSONPayload, source_lineage, lineage_id, batch_id, JSONChecksum, EventId, load_action,time_created,time_modified FROM ",$TableSchema,".",$TableName," WHERE load_action = 'insert' AND batch_id = ",toString($batch_id))

Note the batchID used. Please also note I am not stating batchId with an underscore in this description as it makes words between underlines italic.

However, when running the pipeline, even though different batchIds are passed in, the data flow executes using the exact same batch number. This means the data flow is not respecting the variables passed into it, when multiple executions happen using the foreach loop in the pipeline. This doesn't look possible, but I have confirmed this is exactly what it has done.

Is the ADF team aware of this bug? Is there a fix?