Arya Parashar - Thanks for the question and using MS Q&A platform.

**Problem:

**

Databricks clusters use one public IP address per node (including the driver node). Azure subscriptions have public IP address limits per region. Thus, cluster creation and scale-up operations may fail if they would cause the number of public IP addresses allocated to that subscription in that region to exceed the limit. This limit also includes public IP addresses allocated for non-Databricks usage, such as custom user-defined VMs.

In general, clusters only consume public IP addresses while they are active. However, PublicIPCountLimitReached errors may continue to occur for a short period of time even after other clusters are terminated. This is because Databricks temporarily caches Azure resources when a cluster is terminated. Resource caching is by design, since it significantly reduces the latency of cluster startup and autoscaling in many common scenarios.

Solution:

If your subscription has already reached its public IP address limit for a given region, then you should do one or the other of the following.

- Create new clusters in a different Databricks workspace. The other workspace must be located in a region in which you have not reached your subscription's public IP address limit.

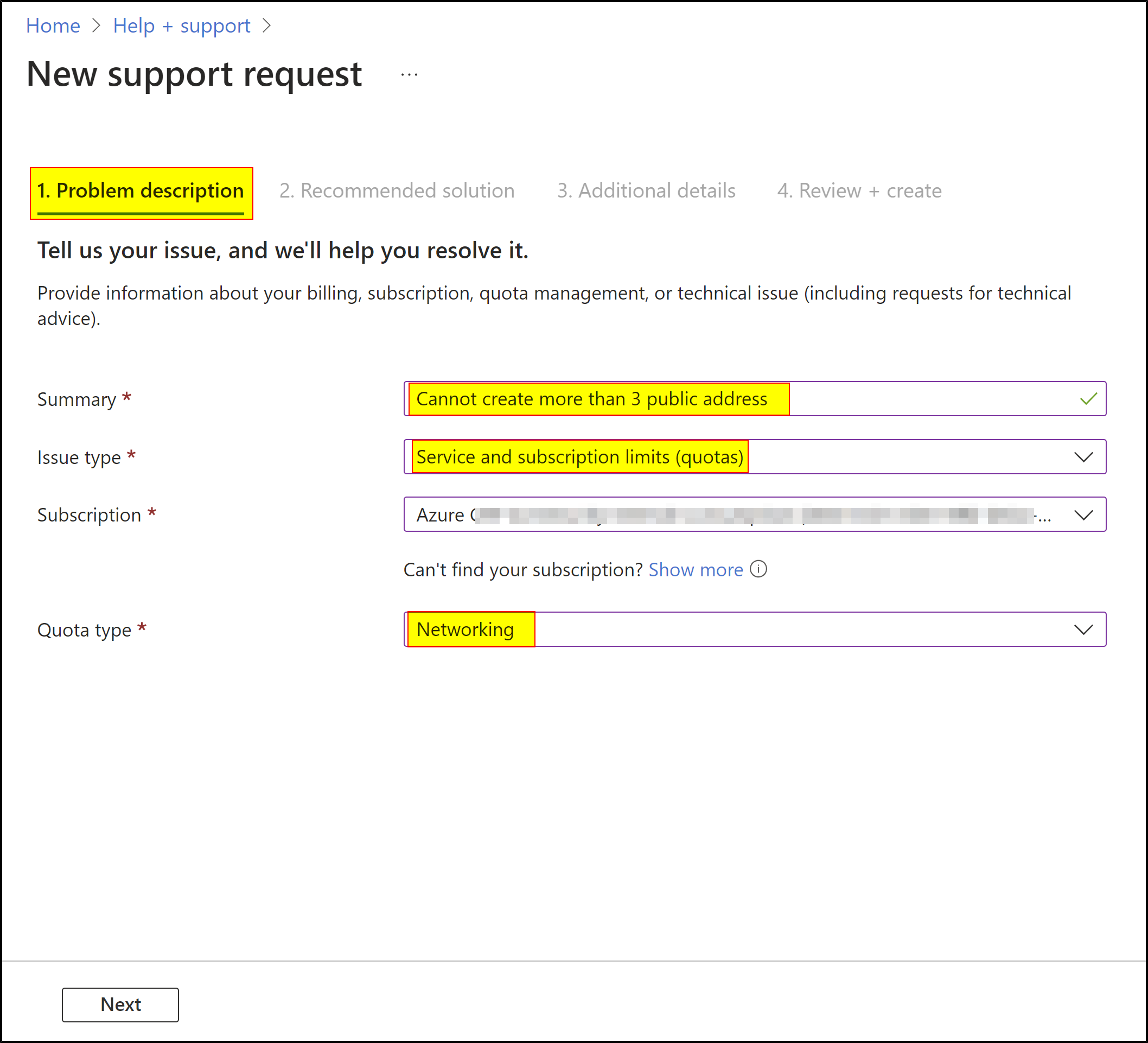

- Request to increase your public IP address limit. Choose Quota as the Issue Type, and Networking as the Quota Type.

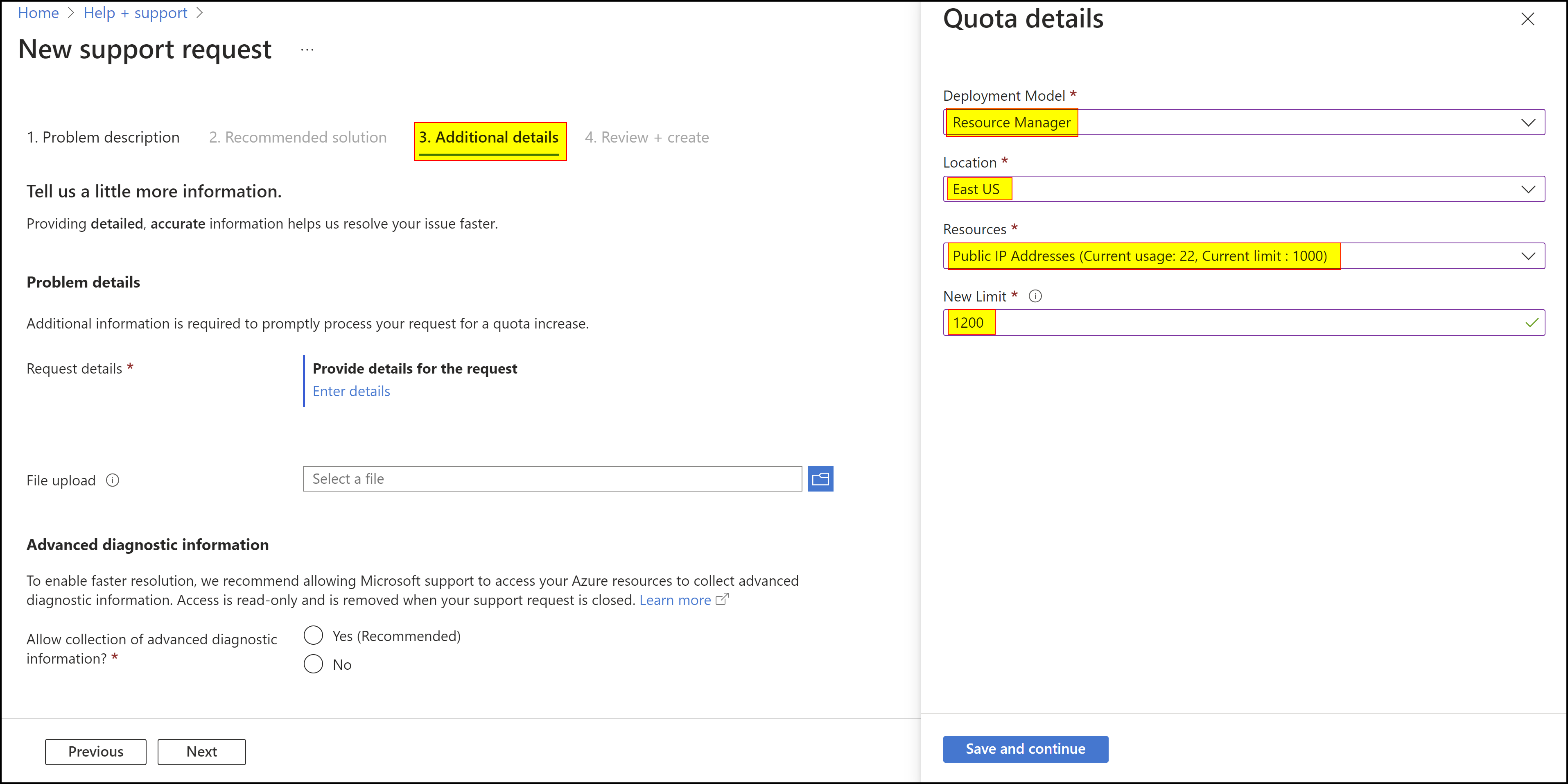

- In Details, request a Public IP Address quota increase. For example, if your limit is currently 60, and you want to create a 100-node cluster, request a limit increase to 160.

For more details, refer to Issue: Cloud provider launch failure while setting up the cluster (PublicIPCountLimitReached).

Hope this helps. Do let us know if you any further queries.