Hello,

I am seeking a way to transform a Dynamic SQL Statement that generates Dynamic Pivots, and write the same functionality in ADF.

The scenario is : Pivoting Accounting Data

There are many schedules with up to 7 different accounts for each schedule. I want to pivot the details from the GL details into a Generic table.

The generic table will look like :

CREATE TABLE GLSchedulesPivots (

ADFInsertDate datetime,

GLDetailBeginDate Date,

GLDetailEndDate Date,

CompanyID int,

ScheduleNumber int,

Control NVARCHAR(30),

Account1 money,

Account2 money,

Account3 money,

Account4 money,

Account5 money,

Account6 money,

Account7 money,

Total money -- Sum of the above 6 Accounts

)

Some example schedules might look like the following

| ScheduleNumber|CompanyID|Account1|Account2| Account3|Account4|Account5|Account6|Account7|

| -------- | -------- | -------- | -------- | -------- | -------- | -------- | -------- | -------- |

|50|1|22701|22702|22703|22704|22705|22706|22707|

|21|1|44801|44802|44803|44804|44805|44806|44807|

(I hope the MD for the table above comes through after posting it was showing the MD code rather than the table...)

I've written some Dynamic SQL code that would find all the different Schedules and create a Dymaic SQL statement to Execute with the EXECUTE(@sqlStatement) command:

something similar to :

SET @SqlExpression = '

select

companyid,

control,

'+@pivotAggCol +',

'+@pivotAggColCOALESCE +' as Total

FROM

(

Select

GL.companyid,

GL.control ,

GL.accountnumber,

GL.postingamount

FROM

GLJEDetail as GL

WHERE

GL.companyID = '+@companyid+'

and gl.accountingdate BETWEEN '''+@ScheduleStartDate+''' AND '''+@ScheduleEndDate+'''

) as SourceTable

PIVOT

(

SUM(postingamount)

FOR accountnumber in ('+@pivotAggCol+')

) as PivotTable'

So in my Stored Proc where I have my POC running, the above code sits in a Cursor ( I know, I know.. but its really best fit for this case...anyways ) and finds the Accounts for each of the different Schedules.

We see some parameters in the code above as

DECLARE @pivotAggCol nvarchar(200)

DECLARE @pivotAggColCOALESCE (nvarchar(200)

The aforementioned cursor could prepare the variables to be (in a more dynamic manner than in this example)

--Simplified for the example

SET @pivotAggCol = '22701,22702,22703,22704,22705,22706,22707'

SET @pivotAggColCOALESCE = '22701+22702+22703+22704+22705+22706+22707'

So the resulting Executing SQL Statement would dynamically create the pivot as :

SET @SqlExpression = '

select

companyid,

control,

'+ '22701,22702,22703,22704,22705,22706,22707' +',

'+ '22701+22702+22703+22704+22705+22706+22707' +' as Total

FROM

(

Select

GL.companyid,

GL.control ,

GL.accountnumber,

GL.postingamount

FROM

GLJEDetail as GL

WHERE

GL.companyID = '+ 1 +'

and gl.accountingdate BETWEEN '''+2000-01-01+''' AND '''+2023-01-01+'''

) as SourceTable

PIVOT

(

SUM(postingamount)

FOR accountnumber in ('+@pivotAggCol+')

) as PivotTable'

Then the statement above would be a executed with the EXECUTE statement :

EXECUTE(@SqlExpression)

So as the schedules change, so will the dynamic query that generates the pivot. Finally after the data is generated, it deposits the data into the 'generic' table such that all pivots can be stored in one table for easy retrieval. Where the values for Account1, are the values from column '22701', and the values for Account2 are the values from the column '22702' and so on ....

Likely this is stuff you have all seen before =)

NOW My question after that enormous set up :

How can I translate this dynamic query in to ADF, I want to use the ADF Test ADF to see if its more efficient to use the pipelines rather than using the SQLServer to the work.

I can imagine a pipeline that prepares the two dynamic parameters above @pivotAggCol and @pivotAggColCOALESCE but how do I get those into the ADF Pivot ?

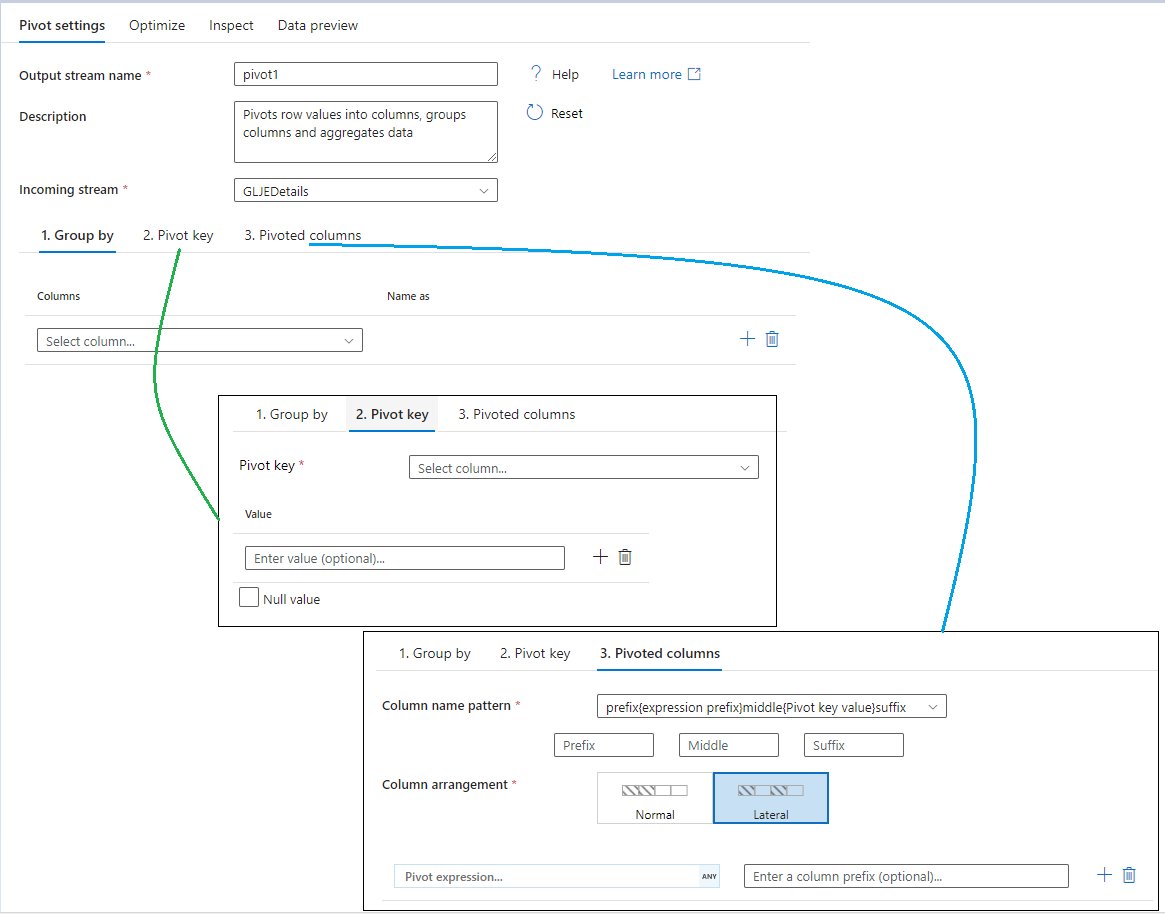

The settings for the pivot with in ADF :

I should also mention, that he dataset that I'll be attaching I cannot grab the projection from, as I feed the DataTable name in the pipeline dynamically. this way we can reuse the database connection for other things.

I could connect directly to the DataTable to get the projection but rather not if at all possible.

(Also looking at doing the same POC in Synapse, so if you have any good solutions in that area, I'm also wanting help there as well.)

Lastly Thank you all for your time.

And.... GO!