@Konstantinos Passadis ,

I have a similar issue while deploying ml model to endpoint. Basically, I'm replicating this lab: https://microsoftlearning.github.io/mslearn-aml-cli/Instructions/Labs/05-deploy-managed-endpoint.html

To deploy a model to managed online endpoint, I have first done this lab: https://microsoftlearning.github.io/mslearn-aml-cli/Instructions/Labs/01-create-workspace.html

Please note that I am using 'northeurope' as my region; not 'eastus' as in the lab.

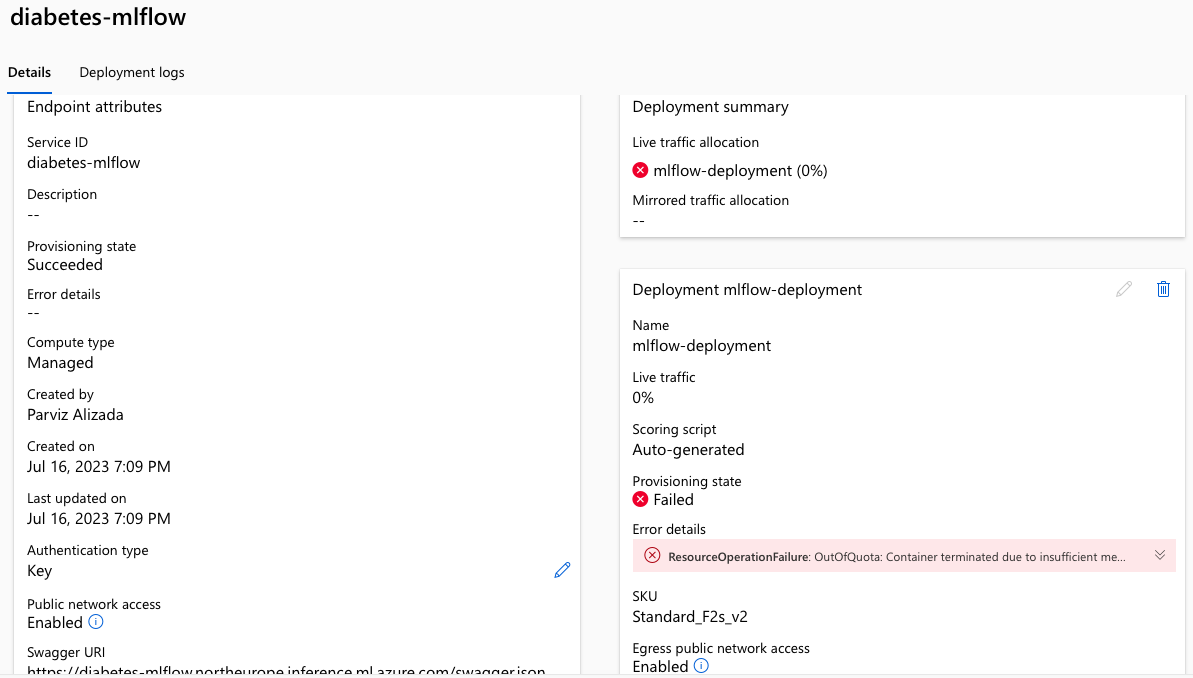

I get this error message:

**ResourceOperationFailure**: OutOfQuota: Container terminated due to insufficient memory. Please see troubleshooting guide, available here: [https://aka.ms/oe-tsg#error-outofquota ](https://aka.ms/oe-tsg#error-outofquota)_[](https://aka.ms/oe-tsg#error-outofquota)_

Here's the deployment log:

`Instance status:

SystemSetup: Succeeded

UserContainerImagePull: Succeeded

ModelDownload: Succeeded

UserContainerStart: InProgress

Container events:

Kind: Pod, Name: Pulling, Type: Normal, Time: 2023-07-16T18:43:33.938733Z, Message: Start pulling container image

Kind: Pod, Name: Downloading, Type: Normal, Time: 2023-07-16T18:43:34.624501Z, Message: Start downloading models

Kind: Pod, Name: Pulled, Type: Normal, Time: 2023-07-16T18:44:01.354717Z, Message: Container image is pulled successfully

Kind: Pod, Name: Downloaded, Type: Normal, Time: 2023-07-16T18:44:01.354717Z, Message: Models are downloaded successfully

Kind: Pod, Name: Created, Type: Normal, Time: 2023-07-16T18:44:01.442696Z, Message: Created container inference-server

Kind: Pod, Name: Started, Type: Normal, Time: 2023-07-16T18:44:01.522564Z, Message: Started container inference-server

Kind: Pod, Name: ReadinessProbeFailed, Type: Warning, Time: 2023-07-16T18:44:14.834845Z, Message: Readiness probe failed: HTTP probe failed with statuscode: 502

Kind: Pod, Name: LivenessProbeFailed, Type: Warning, Time: 2023-07-16T18:44:17.472304Z, Message: Liveness probe failed: HTTP probe failed with statuscode: 502

Kind: Pod, Name: ReadinessProbeFailed, Type: Warning, Time: 2023-07-16T18:44:24.829274Z, Message: Readiness probe failed: HTTP probe failed with statuscode: 502

Kind: Pod, Name: LivenessProbeFailed, Type: Warning, Time: 2023-07-16T18:44:27.472367Z, Message: Liveness probe failed: HTTP probe failed with statuscode: 502

Kind: Pod, Name: ReadinessProbeFailed, Type: Warning, Time: 2023-07-16T18:44:34.829264Z, Message: Readiness probe failed: HTTP probe failed with statuscode: 502

Kind: Pod, Name: LivenessProbeFailed, Type: Warning, Time: 2023-07-16T18:44:37.47205Z, Message: Liveness probe failed: HTTP probe failed with statuscode: 502

Kind: Pod, Name: ReadinessProbeFailed, Type: Warning, Time: 2023-07-16T18:44:44.829168Z, Message: Readiness probe failed: HTTP probe failed with statuscode: 502

Kind: Pod, Name: LivenessProbeFailed, Type: Warning, Time: 2023-07-16T18:44:47.472234Z, Message: Liveness probe failed: HTTP probe failed with statuscode: 502

Kind: Pod, Name: ReadinessProbeFailed, Type: Warning, Time: 2023-07-16T18:44:54.829435Z, Message: Readiness probe failed: HTTP probe failed with statuscode: 502

Kind: Pod, Name: LivenessProbeFailed, Type: Warning, Time: 2023-07-16T18:44:57.472191Z, Message: Liveness probe failed: HTTP probe failed with statuscode: 502

Kind: Pod, Name: ReadinessProbeFailed, Type: Warning, Time: 2023-07-16T18:45:04.829333Z, Message: Readiness probe failed: HTTP probe failed with statuscode: 502

Kind: Pod, Name: LivenessProbeFailed, Type: Warning, Time: 2023-07-16T18:45:07.472126Z, Message: Liveness probe failed: HTTP probe failed with statuscode: 502

Container logs:

2023-07-16T18:44:01,525261357+00:00 - rsyslog/run

2023-07-16T18:44:01,532164487+00:00 - nginx/run

2023-07-16T18:44:01,534186226+00:00 - gunicorn/run

2023-07-16T18:44:01,536345167+00:00 | gunicorn/run |

nginx: [warn] the "user" directive makes sense only if the master process runs with super-user privileges, ignored in /etc/nginx/nginx.conf:1

2023-07-16T18:44:01,538566509+00:00 | gunicorn/run | ###############################################

2023-07-16T18:44:01,541214659+00:00 | gunicorn/run | AzureML Container Runtime Information

2023-07-16T18:44:01,544593523+00:00 | gunicorn/run | ###############################################

2023-07-16T18:44:01,546049150+00:00 | gunicorn/run |

2023-07-16T18:44:01,548010387+00:00 | gunicorn/run |

2023-07-16T18:44:01,551370851+00:00 | gunicorn/run | AzureML image information: mlflow-ubuntu20.04-py38-cpu-inference:20230703.v1

2023-07-16T18:44:01,553508791+00:00 | gunicorn/run |

2023-07-16T18:44:01,555277525+00:00 | gunicorn/run |

2023-07-16T18:44:01,557435166+00:00 | gunicorn/run | PATH environment variable: /opt/miniconda/envs/amlenv/bin:/opt/miniconda/bin:/usr/local/sbin:/usr/local/bin:/usr/sbin:/usr/bin:/sbin:/bin

2023-07-16T18:44:01,559065596+00:00 | gunicorn/run | PYTHONPATH environment variable:

2023-07-16T18:44:01,560937932+00:00 | gunicorn/run |

2023-07-16T18:44:02,580313724+00:00 | gunicorn/run | CONDAPATH environment variable: /opt/miniconda

conda environments:

base /opt/miniconda

amlenv /opt/miniconda/envs/amlenv

2023-07-16T18:44:03,174895376+00:00 | gunicorn/run |

2023-07-16T18:44:03,176698311+00:00 | gunicorn/run | Pip Dependencies (before dynamic installation)

azure-core==1.27.1

azure-identity==1.13.0

azureml-inference-server-http==0.8.4

cachetools==5.3.1

certifi==2023.5.7

cffi==1.15.1

charset-normalizer==3.1.0

click==8.1.3

cryptography==41.0.1

Flask==2.2.5

Flask-Cors==3.0.10

google-api-core==2.11.1

google-auth==2.21.0

googleapis-common-protos==1.59.1

gunicorn==20.1.0

idna==3.4

importlib-metadata==6.7.0

inference-schema==1.5.1

itsdangerous==2.1.2

Jinja2==3.1.2

MarkupSafe==2.1.3

msal==1.22.0

msal-extensions==1.0.0

opencensus==0.11.2

opencensus-context==0.1.3

opencensus-ext-azure==1.1.9

portalocker==2.7.0

protobuf==4.23.3

psutil==5.9.5

pyasn1==0.5.0

pyasn1-modules==0.3.0

pycparser==2.21

pydantic==1.10.10

PyJWT==2.7.0

python-dateutil==2.8.2

pytz==2023.3

requests==2.31.0

rsa==4.9

six==1.16.0

typing_extensions==4.7.1

urllib3==1.26.16

Werkzeug==2.3.6

wrapt==1.12.1

zipp==3.15.0

2023-07-16T18:44:04,316759286+00:00 | gunicorn/run |

2023-07-16T18:44:04,318542920+00:00 | gunicorn/run | Entry script directory: /var/mlflow_resources/.

2023-07-16T18:44:04,320315654+00:00 | gunicorn/run |

2023-07-16T18:44:04,322045186+00:00 | gunicorn/run | ###############################################

2023-07-16T18:44:04,323794620+00:00 | gunicorn/run | Dynamic Python Package Installation

2023-07-16T18:44:04,325553753+00:00 | gunicorn/run | ###############################################

2023-07-16T18:44:04,327255785+00:00 | gunicorn/run |

2023-07-16T18:44:04,329473427+00:00 | gunicorn/run | Updating conda environment from /var/azureml-app/azureml-models/sample-mlflow-sklearn-model/1/model/conda.yaml !

Retrieving notices: ...working... done

./run: line 148: 62 Killed conda env create -n userenv -f "${CONDA_FILENAME}"

Collecting package metadata (repodata.json): ...working... Error occurred. Sleeping to send error logs.

2023-07-16T18:45:08,494105751+00:00 - gunicorn/finish 95 0

2023-07-16T18:45:08,495996485+00:00 - Exit code 95 is not normal. Killing image.`

I'm using SKU: Standard_F2s_v2 for the endpoint

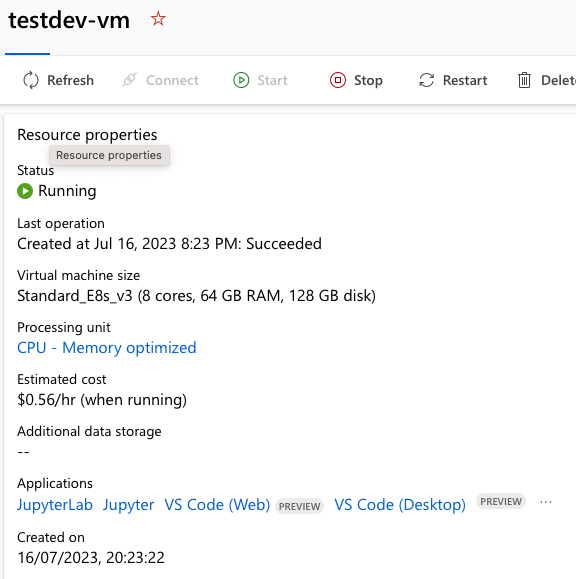

My compute VM is Standard_E8s_v3 (8 cores, 64 GB RAM, 128 GB disk).

Given my configurations, what would you recommend to do? If I need to request increase in quota for endpoint SKU, how can I do that?