@Saif Alramahi , thank you for posting this question on Microsoft Q&A. I understand that you are trying to send ADF logs to specific tables in Log Analytics workspace.

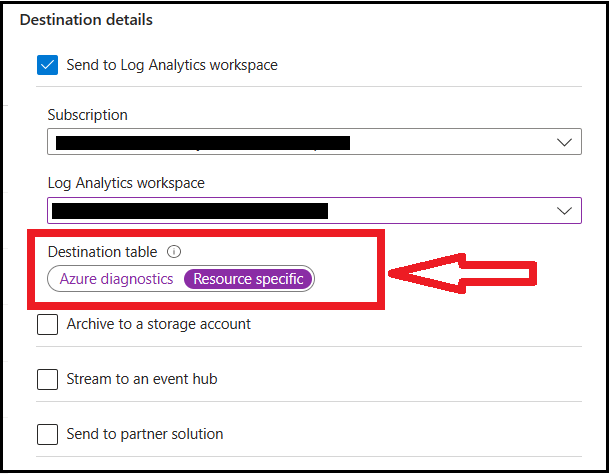

By default, there are limited options to specify the destination table where the logs will be exported using the Diagnostic settings, when using the "Send to Log Analytics workspace" option, as shown below:

For more details on these options available, please see Send to Log Analytics workspace.

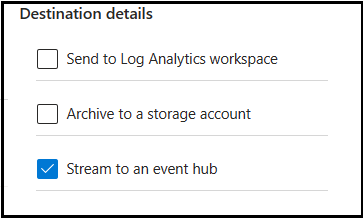

Coming to your requirement, you would have to use some customization/custom solution to categorize logs in specific custom tables. In this regard, the option to "Stream to an event hub" option will be a better option (instead of forwarding logs to LA workspace directly.

Doing so, you can use Azure Function with Event Hub trigger, where you can add some logic to write logs to custom table as they are being forwarded. For more details, see Azure Event Hubs trigger for Azure Functions

Azure Functions is a serverless solution that allows you to write less code, maintain less infrastructure. Using this solution, only writing to destination will need to be coded - polling/trigger will be taken care by Event Hub and FunctionApp's trigger.

Alternatively, if the logs are available in LA workspace (by using the default option of streaming logs to LA in Diagnostic Settings), you can write custom functions to query only what you need. For details, see Use a function in LA Workspace.

Functions would behave similar to querying from custom log table and will also provide flexibility to update it based on future requirement. This is the easiest, almost-no-maintenance requiring method to achieve the end goal in question. Because, in future if the schema of data being streamed from ADF changes, the data would still be in LA and only the LA-function code might need change. However, if you use EventHub or other custom solutions, you will have to ensure that the changes are also replicated in the custom solution.

Hope this helps. If you have any questions, please let us know.

If the answer helped, please click Accept answer so that it can help others in the community looking for help on similar topics.