Hello @Nikos Fotiou !

Welcome to Microsoft QnA!

I understand you are having trouble mounting your NFS volume with exit Status 32 as an error

Some suggestions (Source : https://stackoverflow.com/questions/34113569/kubernetes-nfs-volume-mount-fail-with-exit-status-32)

1.execute the following on each master and node

sudo yum install nfs-utils -y

2.apt-get install -y nfs-common , On every K8s node

3.

a. allow non-root users to mount NFS (on the server).

or

b. in PersistentVolume add

mountOptions:

**- nfsvers=4.1**

Also ( Source : https://learn.microsoft.com/en-us/troubleshoot/azure/azure-kubernetes/mounting-azure-blob-storage-container-fail#nfs-error2)

NFS 3.0 error 2: Exit status 32, access denied by server while mounting

Cause: AKS's VNET and subnet aren't allowed for the storage account

If the storage account's network is limited to selected networks, but the VNET and subnet of the AKS cluster aren't added to the selected networks, the mounting operation will fail.

Solution: Allow AKS's VNET and subnet for the storage account

- Identify the node that hosts the faulty pod by running the following command:

ConsoleCopy

kubectl get pod <pod-name> -n <namespace> -o wide

Check the node in the command output:

[

](https://learn.microsoft.com/en-us/troubleshoot/azure/azure-kubernetes/media/mounting-azure-blob-storage-container-fail/nfs-kubectl-get-pod-command-output.png#lightbox)

Go to the AKS cluster in the Azure portal, select Properties > Infrastructure resource group, access the VMSS associated with the node, and then check the Virtual network/subnet to identify the VNET and subnet.

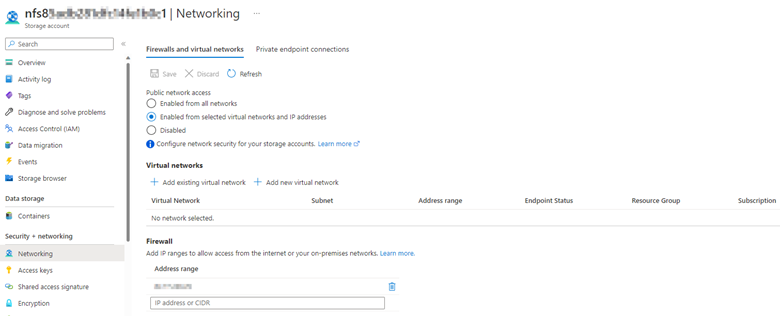

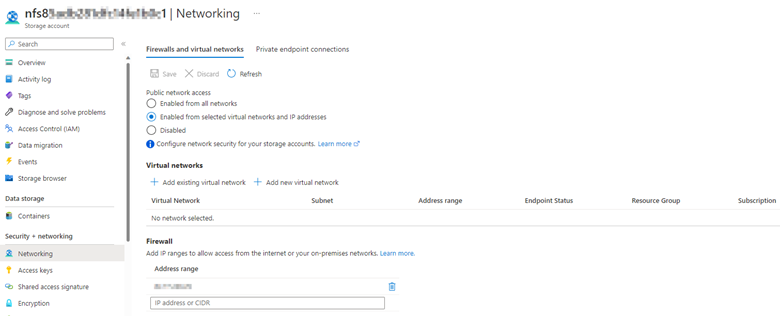

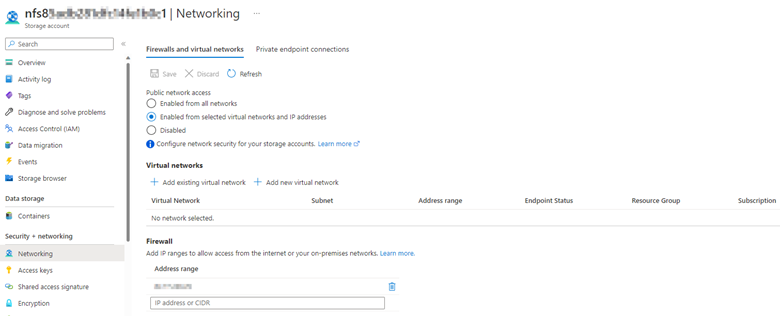

- Access the storage account in the Azure portal, and then select Networking. If Public network access is set to Enabled from selected virtual networks or Disabled, and the connectivity isn't through a private endpoint, check if the VNET and subnet of the AKS cluster are allowed under Firewalls and virtual networks.

[

](https://learn.microsoft.com/en-us/troubleshoot/azure/azure-kubernetes/media/mounting-azure-blob-storage-container-fail/nfs-firewalls-and-virtual-networks-settings.png#lightbox)

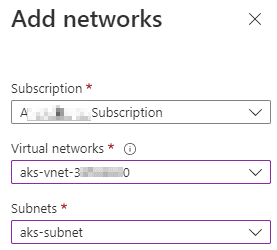

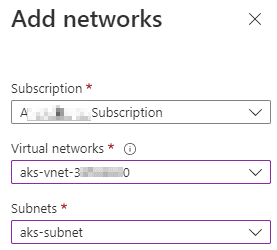

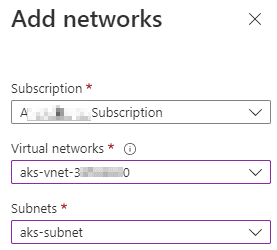

If the VNET and subnet of the AKS cluster aren't added, select Add existing virtual network. On the Add networks page, type the VNET and subnet of the AKS cluster, and then select Add > Save.

It may take a few moments for the changes to take effect. After the VNET and subnet are added, check if the pod status changes from ContainerCreating to Running.

[

](https://learn.microsoft.com/en-us/troubleshoot/azure/azure-kubernetes/media/mounting-azure-blob-storage-container-fail/nfs-pod-status-running.png#lightbox)

NFS 3.0 error 2: Exit status 32, access denied by server while mounting

Cause: AKS's VNET and subnet aren't allowed for the storage account

If the storage account's network is limited to selected networks, but the VNET and subnet of the AKS cluster aren't added to the selected networks, the mounting operation will fail.

Solution: Allow AKS's VNET and subnet for the storage account

- Identify the node that hosts the faulty pod by running the following command:

ConsoleCopy

kubectl get pod <pod-name> -n <namespace> -o wide

Check the node in the command output:

[

](https://learn.microsoft.com/en-us/troubleshoot/azure/azure-kubernetes/media/mounting-azure-blob-storage-container-fail/nfs-kubectl-get-pod-command-output.png#lightbox)

Go to the AKS cluster in the Azure portal, select Properties > Infrastructure resource group, access the VMSS associated with the node, and then check the Virtual network/subnet to identify the VNET and subnet.

Access the storage account in the Azure portal, and then select Networking. If Public network access is set to Enabled from selected virtual networks or Disabled, and the connectivity isn't through a private endpoint, check if the VNET and subnet of the AKS cluster are allowed under Firewalls and virtual networks.

[

](https://learn.microsoft.com/en-us/troubleshoot/azure/azure-kubernetes/media/mounting-azure-blob-storage-container-fail/nfs-firewalls-and-virtual-networks-settings.png#lightbox)

If the VNET and subnet of the AKS cluster aren't added, select Add existing virtual network. On the Add networks page, type the VNET and subnet of the AKS cluster, and then select Add > Save.

It may take a few moments for the changes to take effect. After the VNET and subnet are added, check if the pod status changes from ContainerCreating to Running.

[

](https://learn.microsoft.com/en-us/troubleshoot/azure/azure-kubernetes/media/mounting-azure-blob-storage-container-fail/nfs-pod-status-running.png#lightbox)

If you could show some logs or a general configuration , in case these did not helped we could dig deeper!

I hope this helps!

Kindly mark the answer as Accepted and Upvote in case it helped!

Regards